Structure (mathematical logic)

In universal algebra and in model theory, a structure consists of a set along with a collection of finitary operations and relations which are defined on it.

Universal algebra studies structures that generalize the algebraic structures such as groups, rings, fields, vector spaces and lattices. The term universal algebra is used for structures with no relation symbols.[1]

Model theory has a different scope that encompasses more arbitrary theories, including foundational structures such as models of set theory. From the model-theoretic point of view, structures are the objects used to define the semantics of first-order logic. In model theory a structure is often called just a model, although it is sometimes disambiguated as a semantic model when one discusses the notion in the more general setting of mathematical models. Logicians sometimes refer to structures as interpretations.[2]

In database theory, structures with no functions are studied as models for relational databases, in the form of relational models.

Contents |

Definition

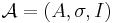

Formally, a structure can be defined as a triple  consisting of a domain A, a signature σ, and an interpretation function I that indicates how the signature is to be interpreted on the domain. To indicate that a structure has a particular signature σ one can refer to it as a σ-structure.

consisting of a domain A, a signature σ, and an interpretation function I that indicates how the signature is to be interpreted on the domain. To indicate that a structure has a particular signature σ one can refer to it as a σ-structure.

Domain

The domain of a structure is an arbitrary set; it is also called the underlying set of the structure, its carrier (especially in universal algebra), or its universe (especially in model theory). In classical first-order logic, the definition of a structure prohibits the empty domain.[3]

Sometimes the notation  or

or  is used for the domain of

is used for the domain of  , but often no notational distinction is made between a structure and its domain. (I.e. the same symbol

, but often no notational distinction is made between a structure and its domain. (I.e. the same symbol  refers both to the structure and its domain.)[4]

refers both to the structure and its domain.)[4]

Signature

The signature of a structure consists of a set of function symbols and relation symbols along with a function that ascribes to each symbol s a natural number  which is called the arity of s because it is the arity of the interpretation of s.

which is called the arity of s because it is the arity of the interpretation of s.

Since the signatures that arise in algebra often contain only function symbols, a signature with no relation symbols is called an algebraic signature. A structure with such a signature is also called an algebra; this should not be confused with the notion of an algebra over a field.

Interpretation function

The interpretation function I of  assigns functions and relations to the symbols of the signature. Each function symbol f of arity n is assigned an n-ary function

assigns functions and relations to the symbols of the signature. Each function symbol f of arity n is assigned an n-ary function  on the domain. Each relation symbol R of arity n is assigned an n-ary relation

on the domain. Each relation symbol R of arity n is assigned an n-ary relation  on the domain. A nullary function symbol c is called a constant symbol, because its interpretation I(c) can be identified with a constant element of the domain.

on the domain. A nullary function symbol c is called a constant symbol, because its interpretation I(c) can be identified with a constant element of the domain.

When a structure (and hence an interpretation function) is given by context, no notational distinction is made between a symbol s and its interpretation I(s). For example if f is a binary function symbol of  , one simply writes

, one simply writes  rather than

rather than  .

.

Examples

The standard signature σf for fields consists of two binary function symbols + and ×, a unary function symbol −, and the two constant symbols 0 and 1. Thus a structure (algebra) for this signature consists of a set of elements A together with two binary functions, a unary function, and two distinguished elements; but there is no requirement that it satisfy any of the field axioms. The rational numbers Q, the real numbers R and the complex numbers C, like any other field, can be regarded as σ-structures in an obvious way:

where

-

is addition of rational numbers,

is addition of rational numbers, is multiplication of rational numbers,

is multiplication of rational numbers, is the function that takes each rational number x to -x, and

is the function that takes each rational number x to -x, and is the number 0 and

is the number 0 and is the number 1;

is the number 1;

and  and

and  are similarly defined.

are similarly defined.

But the ring Z of integers, which is not a field, is also a σf-structure in the same way. In fact, there is no requirement that any of the field axioms hold in a σf-structure.

A signature for ordered fields needs an additional binary relation such as < or ≤, and therefore structures for such a signature are not algebras, even though they are of course algebraic structures in the usual, loose sense of the word.

The ordinary signature for set theory includes a single binary relation ∈. A structure for this signature consists of a set of elements and an interpretation of the ∈ relation as a binary relation on these elements.

Induced substructures and closed subsets

is called an (induced) substructure of

is called an (induced) substructure of  if

if

and

and  have the same signature

have the same signature  ;

;- the domain of

is contained in the domain of

is contained in the domain of  :

:  ; and

; and - the interpretations of all function and relation symbols agree on

.

.

The usual notation for this relation is  .

.

A subset  of the domain of a structure

of the domain of a structure  is called closed if it is closed under the functions of

is called closed if it is closed under the functions of  , i.e. if the following condition is satisfied: for every natural number n, every n-ary function symbol f (in the signature of

, i.e. if the following condition is satisfied: for every natural number n, every n-ary function symbol f (in the signature of  ) and all elements

) and all elements  , the result of applying f to the n-tuple

, the result of applying f to the n-tuple  is again an element of B:

is again an element of B:  .

.

For every subset  there is a smallest closed subset of

there is a smallest closed subset of  that contains B. It is called the closed subset generated by B, or the hull of B, and denoted by

that contains B. It is called the closed subset generated by B, or the hull of B, and denoted by  or

or  . The operator

. The operator  is a finitary closure operator on the set of subsets of

is a finitary closure operator on the set of subsets of  .

.

If  and

and  is a closed subset, then

is a closed subset, then  is an induced substructure of

is an induced substructure of  , where

, where  assigns to every symbol of σ the restriction to B of its interpretation in

assigns to every symbol of σ the restriction to B of its interpretation in  . Conversely, the domain of an induced substructure is a closed subset.

. Conversely, the domain of an induced substructure is a closed subset.

The closed subsets (or induced substructures) of a structure form a lattice. The meet of two subsets is their intersection. The join of two subsets is the closed subset generated by their union. Universal algebra studies the lattice of substructures of a structure in detail.

Examples

Let σ = {+, ×, −, 0, 1} be again the standard signature for fields. When regarded as σ-structures in the natural way, the rational numbers form a substructure of the real numbers, and the real numbers form a substructure of the complex numbers. The rational numbers are the smallest substructure of the real (or complex) numbers that also satisfies the field axioms.

The set of integers gives an even smaller substructure of the real numbers which is not a field. Indeed, the integers are the substructure of the real numbers generated by the empty set, using this signature. The notion in abstract algebra that corresponds to a substructure of a field, in this signature, is that of a subring, rather than that of a subfield.

The most obvious way to define a graph is a structure with a signature σ consisting of a single binary relation symbol E. The vertices of the graph form the domain of the structure, and for two vertices a and b,  means that a and b are connected by an edge. In this encoding, the notion of induced substructure is more restrictive than the notion of subgraph. For example, let G be a graph consisting of two vertices connected by an edge, and let H be the graph consisting of the same vertices but no edges. H is a subgraph of G, but not an induced substructure. The notion in graph theory that corresponds to induced substructures is that of induced subgraphs.

means that a and b are connected by an edge. In this encoding, the notion of induced substructure is more restrictive than the notion of subgraph. For example, let G be a graph consisting of two vertices connected by an edge, and let H be the graph consisting of the same vertices but no edges. H is a subgraph of G, but not an induced substructure. The notion in graph theory that corresponds to induced substructures is that of induced subgraphs.

Homomorphisms and embeddings

Homomorphisms

Given two structures  and

and  of the same signature σ, a (σ-)homomorphism from

of the same signature σ, a (σ-)homomorphism from  to

to  is a map

is a map  which preserves the functions and relations. More precisely:

which preserves the functions and relations. More precisely:

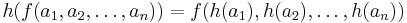

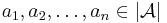

- For every n-ary function symbol f of σ and any elements

, the following equation holds:

, the following equation holds:

-

.

.

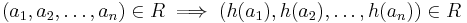

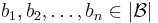

- For every n-ary relation symbol R of σ and any elements

, the following implication holds:

, the following implication holds:

-

.

.

The notation for a homomorphism h from  to

to  is

is  .

.

For every signature σ there is a concrete category σ-Hom which has σ-structures as objects and σ-homomorphisms as morphisms.

A homomorphism  is sometimes called strong if for every n-ary relation symbol R and any elements

is sometimes called strong if for every n-ary relation symbol R and any elements  such that

such that  , there are

, there are  such that

such that  and

and  The strong homomorphisms give rise to a subcategory of σ-Hom.

The strong homomorphisms give rise to a subcategory of σ-Hom.

Embeddings

A (σ-)homomorphism  is called a (σ-)embedding if it is one-to-one and

is called a (σ-)embedding if it is one-to-one and

- for every n-ary relation symbol R of σ and any elements

, the following equivalence holds:

, the following equivalence holds:

-

.

.

Thus an embedding is the same thing as a strong homomorphism which is one-to-one. The category σ-Emb of σ-structures and σ-embeddings is a concrete subcategory of σ-Hom.

Induced substructures correspond to subobjects in σ-Emb. If σ has only function symbols, σ-Emb is the subcategory of monomorphisms of σ-Hom. In this case induced substructures also correspond to subobjects in σ-Hom.

Example

As seen above, in the standard encoding of graphs as structures the induced substructures are precisely the induced subgraphs. However, a homomorphism between graphs is the same thing as a homomorphism between the two structures coding the graph. In the example of the previous section, even though the subgraph H of G is not induced, the identity map id: H → G is a homomorphism. This map is in fact a monomorphism in the category σ-Hom, and therefore H is a subobject of G which is not an induced substructure.

Homomorphism problem

The following problem is known as the homomorphism problem:

- Given two finite structures

and

and  of a finite relational signature, find a homomorphism

of a finite relational signature, find a homomorphism  or show that no such homomorphism exists.

or show that no such homomorphism exists.

Every constraint satisfaction problem (CSP) has a translation into the homomorphism problem.[5] Therefore the complexity of CSP can be studied using the methods of finite model theory.

Another application is in database theory, where a relational model of a database is essentially the same thing as a relational structure. It turns out that a conjunctive query on a database can be described by another structure in the same signature as the database model. A homomorphism from the relational model to the structure representing the query is the same thing as a solution to the query. This shows that the conjunctive query problem is also equivalent to the homomorphism problem.

Structures and first-order logic

Structures are sometimes referred to as "first-order structures". This is misleading, as nothing in their definition ties them to any specific logic, and in fact they are suitable as semantic objects both for very restricted fragments of first-order logic such as that used in universal algebra, and for second-order logic. In connection with first-order logic and model theory, structures are often called models, even when the question "models of what?" has no obvious answer.

Satisfaction relation

Each first-order structure  has a satisfaction relation

has a satisfaction relation  defined for all formulas

defined for all formulas  in the language consisting of the language of

in the language consisting of the language of  together with a constant symbol for each element of M, which is interpreted as that element. This relation is defined inductively using Tarski's T-schema.

together with a constant symbol for each element of M, which is interpreted as that element. This relation is defined inductively using Tarski's T-schema.

A structure  is said to be a model of a theory T if the language of

is said to be a model of a theory T if the language of  is the same as the language of T and every sentence in T is satisfied by

is the same as the language of T and every sentence in T is satisfied by  . Thus, for example, a "ring" is a structure for the language of rings that satisfies each of the ring axioms, and a model of ZFC set theory is a structure in the language of set theory that satisfies each of the ZFC axioms.

. Thus, for example, a "ring" is a structure for the language of rings that satisfies each of the ring axioms, and a model of ZFC set theory is a structure in the language of set theory that satisfies each of the ZFC axioms.

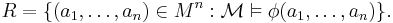

Definable relations

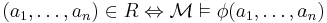

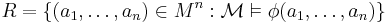

An n-ary relation R on the universe M of a structure  is said to be definable (or explicitly definable, or

is said to be definable (or explicitly definable, or  -definable) if there is a formula φ(x1,...,xn) such that

-definable) if there is a formula φ(x1,...,xn) such that

In other words, R is definable if and only if there is a formula φ such that

is correct.

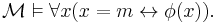

An important special case is the definability of specific elements. An element m of M is definable in  if and only if there is a formula φ(x) such that

if and only if there is a formula φ(x) such that

Definability with parameters

A relation R is said to be definable with parameters (or  -definable) if there is a formula φ with parameters from

-definable) if there is a formula φ with parameters from  such that R is definable using φ. Every element of a structure is definable using the element itself as a parameter.

such that R is definable using φ. Every element of a structure is definable using the element itself as a parameter.

Implicit definability

Recall from above that an n-ary relation R on the universe M of a structure  is explicitly definable if there is a formula φ(x1,...,xn) such that

is explicitly definable if there is a formula φ(x1,...,xn) such that

Here the formula φ used to define a relation R must be over the signature of  and so φ may not mention R itself, since R is not in the signature of

and so φ may not mention R itself, since R is not in the signature of  . If there is a formula φ in the extended language containing the language of

. If there is a formula φ in the extended language containing the language of  and a new symbol R, and the relation R is the only relation on

and a new symbol R, and the relation R is the only relation on  such that

such that  , then R is said to be implicitly definable over

, then R is said to be implicitly definable over  .

.

There are many examples of implicitly definable relations that are not explicitly definable.

Many-sorted structures

Structures as defined above are sometimes called one-sorted structures to distinguish them from the more general many-sorted structures. A many-sorted structure can have an arbitrary number of domains. The sorts are part of the signature, and they play the role of names for the different domains. Many-sorted signatures also prescribe on which sorts the functions and relations of a many-sorted structure are defined. Therefore the arities of function symbols or relation symbols must be more complicated objects such as tuples of sorts rather than natural numbers.

Vector spaces, for example, can be regarded as two-sorted structures in the following way. The two-sorted signature of vector spaces consists of two sorts V (for vectors) and S (for scalars) and the following function symbols:

|

|

|

If V is a vector space over a field F, the corresponding two-sorted structure  consists of the vector domain

consists of the vector domain  , the scalar domain

, the scalar domain  , and the obvious functions, such as the vector zero

, and the obvious functions, such as the vector zero  , the scalar zero

, the scalar zero  , or scalar multiplication

, or scalar multiplication  .

.

Many-sorted structures are often used as a convenient tool even when they could be avoided with a little effort. But they are rarely defined in a rigorous way, because it is straightforward and tedious (hence unrewarding) to carry out the generalization explicitly.

In most mathematical endeavours, not much attention is paid to the sorts. A many-sorted logic however naturally leads to a type theory. As Bart Jacobs puts it: "A logic is always a logic over a type theory." This emphasis in turn leads to categorical logic because a logic over a type theory categorically corresponds to one ("total") category, capturing the logic, being fibred over another ("base") category, capturing the type theory.[6]

Other generalizations

Partial algebras

Both universal algebra and model theory study classes of (structures or) algebras that are defined by a signature and a set of axioms. In the case of model theory these axioms have the form of first-order sentences. The formalism of universal algebra is much more restrictive; essentially it only allows first-order sentences that have the form of universally quantified equations between terms, e.g.  x

x  y (x + y = y + x). One consequence is that the choice of a signature is more significant in universal algebra than it is in model theory. For example the class of groups, in the signature consisting of the binary function symbol × and the constant symbol 1, is an elementary class, but it is not a variety. Universal algebra solves this problem by adding a unary function symbol −1.

y (x + y = y + x). One consequence is that the choice of a signature is more significant in universal algebra than it is in model theory. For example the class of groups, in the signature consisting of the binary function symbol × and the constant symbol 1, is an elementary class, but it is not a variety. Universal algebra solves this problem by adding a unary function symbol −1.

In the case of fields this strategy works only for addition. For multiplication it fails because 0 does not have a multiplicative inverse. An ad hoc attempt to deal with this would be to define 0−1 = 0. (This attempt fails, essentially because with this definition 0 × 0−1 = 1 is not true.) Therefore one is naturally led to allow partial functions, i.e., functions which are defined only on a subset of their domain. However, there are several obvious ways to generalize notions such as substructure, homomorphism and identity.

Structures for typed languages

In type theory, there are many sorts of variables, each of which has a type. Types are inductively defined; given two types δ and σ there is also a type σ → δ that represents functions from objects of type σ to objects of type δ. A structure for a typed language (in the ordinary first-order semantics) must include a separate set of objects of each type, and for a function type the structure must have complete information about the function represented by each object of that type.

Higher-order languages

There is more than one possible semantics for higher-order logic, as discussed in the article on second-order logic. When using full higher-order semantics, a structure need only have a universe for objects of type 0, and the T-schema is extended so that a quantifier over a higher-order type is satisfied by the model if and only if it is disquotationally true. When using first-order semantics, an additional sort is added for each higher-order type, as in the case of a many sorted first order language.

Structures that are proper classes

In the study of set theory and category theory, it is sometimes useful to consider structures in which the domain of discourse is a proper class instead of a set. These structures are sometimes called class models to distinguish them from the "set models" discussed above. When the domain is a proper class, each function and relation symbol may also be represented by a proper class.

In Bertrand Russell's Principia Mathematica, structures were also allowed to have a proper class as their domain.

Notes

- ^ Some authors refer to structures as "algebras" when generalizing universal algebra to allow relations as well as functions.

- ^ Wilfrid Hodges (2009). "Functional Modelling and Mathematical Models". In Anthonie Meijers. Philosophy of technology and engineering sciences. Handbook of the Philosophy of Science. 9. Elsevier. ISBN 9780444516671.

- ^ This is similar to the definition of a prime number in elementary number theory, which has been carefully chosen so that the irreducible number 1 is not considered prime. The convention that the domain of a structure may not be empty is particularly important in logic, because several common inference rules, notably, universal instantiation, are not sound when empty structures are permitted. A logical system that allows the empty domain is known as a free logic.

- ^ As a consequence of these conventions, the notation

may also be used to refer to the cardinality of the domain of

may also be used to refer to the cardinality of the domain of  . In practice this never leads to confusion.

. In practice this never leads to confusion. - ^ Jeavons, Peter; David Cohen; Justin Pearson (1998), "Constraints and universal algebra", Annals of Mathematics and Artificial Intelligence 24: 51–67, doi:10.1023/A:1018941030227.

- ^ Jacobs, Bart (1999), Categorical Logic and Type Theory, Elsevier, pp. 1–4

References

- Chang, Chen Chung; Keisler, H. Jerome (1989) [1973], Model Theory, Elsevier, ISBN 978-0-7204-0692-4

- Diestel, Reinhard (2005) [1997], Graph Theory, Graduate Texts in Mathematics, 173 (3rd ed.), Berlin, New York: Springer-Verlag, ISBN 978-3-540-26183-4, http://www.math.uni-hamburg.de/home/diestel/books/graph.theory/

- Ebbinghaus, Heinz-Dieter; Flum, Jörg; Thomas, Wolfgang (1994), Mathematical Logic (2nd ed.), New York: Springer, ISBN 978-0-387-94258-2

- Hinman, P. (2005), Fundamentals of Mathematical Logic, A K Peters, ISBN 978-1-56881-262-5

- Hodges, Wilfrid (1993), Model theory, Cambridge: Cambridge University Press, ISBN 978-0-521-30442-9

- Hodges, Wilfrid (1997), A shorter model theory, Cambridge: Cambridge University Press, ISBN 978-0-521-58713-6

- Marker, David (2002), Model Theory: An Introduction, Berlin, New York: Springer-Verlag, ISBN 978-0-387-98760-6

- Poizat, Bruno (2000), A Course in Model Theory: An Introduction to Contemporary Mathematical Logic, Berlin, New York: Springer-Verlag, ISBN 978-0-387-98655-5

- Rautenberg, Wolfgang (2010), A Concise Introduction to Mathematical Logic (3rd ed.), New York: Springer Science+Business Media, doi:10.1007/978-1-4419-1221-3, ISBN 978-1-4419-1220-6, http://www.springerlink.com/content/978-1-4419-1220-6/

- Rothmaler, Philipp (2000), Introduction to Model Theory, London: CRC Press, ISBN 9789056993139

External links

- Semantics section in Classical Logic (an entry of Stanford Encyclopedia of Philosophy)