Statistical significance

In statistics, a result is called "statistically significant" if it is unlikely to have occurred by chance. The phrase test of significance was coined by Ronald Fisher.[1] As used in statistics, significant does not mean important or meaningful, as it does in everyday speech. Research analysts who focus solely on significant results may miss important response patterns which individually may fall under the threshold set for tests of significance. Many researchers urge that tests of significance should always be accompanied by effect-size statistics, which approximate the size and thus the practical importance of the difference.

The amount of evidence required to accept that an event is unlikely to have arisen by chance is known as the significance level or critical p-value: in traditional Fisherian statistical hypothesis testing, the p-value is the probability of observing data at least as extreme as that observed, given that the null hypothesis is true. If the obtained p-value is small then it can be said either the null hypothesis is false or an unusual event has occurred. P-values do not have any repeat sampling interpretation.

An alternative statistical hypothesis testing framework is the Neyman–Pearson frequentist school which requires both a null and an alternative hypothesis to be defined and investigates the repeat sampling properties of the procedure, i.e. the probability that a decision to reject the null hypothesis will be made when it is in fact true and should not have been rejected (this is called a "false positive" or Type I error) and the probability that a decision will be made to accept the null hypothesis when it is in fact false (Type II error). Fisherian p-values are philosophically different from Neyman–Pearson Type I errors. This confusion is unfortunately propagated by many statistics textbooks.[2]

Contents |

Use in practice

The significance level is usually denoted by the Greek symbol α (lowercase alpha). Popular levels of significance are 10% (0.1), 5% (0.05), 1% (0.01), 0.5% (0.005), and 0.1% (0.001). If a test of significance gives a p-value lower than the significance level α, the null hypothesis is rejected. Such results are informally referred to as 'statistically significant'. For example, if someone argues that "there's only one chance in a thousand this could have happened by coincidence," a 0.001 level of statistical significance is being implied. The lower the significance level, the stronger the evidence required. Choosing level of significance is a somewhat arbitrary task, but for many applications, a level of 5% is chosen, for no better reason than that it is conventional.[3][4]

In some situations it is convenient to express the statistical significance as 1 − α. In general, when interpreting a stated significance, one must be careful to note what, precisely, is being tested statistically.

Different levels of α trade off countervailing effects. Smaller levels of α increase confidence in the determination of significance, but run an increased risk of failing to reject a false null hypothesis (a Type II error, or "false negative determination"), and so have less statistical power. The selection of the level α thus inevitably involves a compromise between significance and power, and consequently between the Type I error and the Type II error. More powerful experiments – usually experiments with more subjects or replications – can obviate this choice to an arbitrary degree.

In terms of σ (sigma)

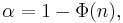

In some fields, for example nuclear and particle physics, it is common to express statistical significance in units of the standard deviation σ of a normal distribution. A statistical significance of " " can be converted into a value of α by use of the cumulative distribution function Φ of the standard normal distribution, through the relation:

" can be converted into a value of α by use of the cumulative distribution function Φ of the standard normal distribution, through the relation:

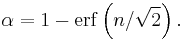

or via use of the error function:

However, values might more easily be found using tabulated values which are often found in text books: see standard normal table. The use of σ implicitly assumes a normal distribution of measurement values. For example, if a theory predicts a parameter to have a value of, say, 109 ± 3, and one measures the parameter to be 100, then one might report the measurement as a "3σ deviation" from the theoretical prediction. In terms of α, this statement is equivalent to saying that "assuming the theory is true, the likelihood of obtaining the experimental result by coincidence is 0.27%" (since 1 − erf(3/√2) = 0.0027).

Fixed significance levels such as those mentioned above may be regarded as useful in exploratory data analyses. However, modern statistical advice is that, where the outcome of a test is essentially the final outcome of an experiment or other study, the p-value should be quoted explicitly. And, importantly, it should be quoted whether the p-value is judged to be significant. This is to allow maximum information to be transferred from a summary of the study into meta-analyses.

Pitfalls and criticism

The scientific literature contains extensive discussion of the use of the concept of statistical significance and in particular of its potential misuse and criticism of its use.

Signal–noise ratio conceptualisation of significance

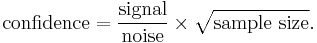

Statistical significance can be considered to be the confidence one has in a given result. In a comparison study, it is dependent on the relative difference between the groups compared, the amount of measurement and the noise associated with the measurement. In other words, the confidence one has in a given result being non-random (i.e. it is not a consequence of chance) depends on the signal-to-noise ratio (SNR) and the sample size.

Expressed mathematically, the confidence that a result is not by random chance is given by the following formula by Sackett:[5]

For clarity, the above formula is presented in tabular form below.

Dependence of confidence with noise, signal and sample size (tabular form)

| Parameter | Parameter increases | Parameter decreases |

|---|---|---|

| Noise | Confidence decreases | Confidence increases |

| Signal | Confidence increases | Confidence decreases |

| Sample size | Confidence increases | Confidence decreases |

In words, the dependence of confidence is high if the noise is low and/or the sample size is large and/or the effect size (signal) is large. The confidence of a result (and its associated confidence interval) is not dependent on effect size alone. If the sample size is large and the noise is low a small effect size can be measured with great confidence. Whether a small effect size is considered important is dependent on the context of the events compared.

In medicine, small effect sizes (reflected by small increases of risk) are often considered clinically relevant and are frequently used to guide treatment decisions (if there is great confidence in them). Whether a given treatment is considered a worthy endeavour is dependent on the risks, benefits and costs.

See also

- A/B testing

- ABX test

- Fisher's method for combining independent tests of significance

- Reasonable doubt

- Look-elsewhere effect

References

- ^ "Critical tests of this kind may be called tests of significance, and when such tests are available we may discover whether a second sample is or is not significantly different from the first." — R. A. Fisher (1925). Statistical Methods for Research Workers, Edinburgh: Oliver and Boyd, 1925, p.43.

- ^ Raymond Hubbard, M.J. Bayarri, P Values are not Error Probabilities. A working paper that explains the difference between Fisher's evidential p-value and the Neyman–Pearson Type I error rate

.

. - ^ Stigler S (2008). "Fisher and the 5% level". Chance 21 (4): 12. doi:10.1007/s00144-008-0033-3.

- ^ Fisher RA (1925). Statistical Methods for Research Workers (first ed.). Edinburgh: Oliver & Boyd.

- ^ Sackett DL (October 2001). "Why randomized controlled trials fail but needn't: 2. Failure to employ physiological statistics, or the only formula a clinician-trialist is ever likely to need (or understand!)". CMAJ 165 (9): 1226–37. PMC 81587. PMID 11706914. http://www.cmaj.ca/cgi/pmidlookup?view=long&pmid=11706914.

Further reading

- Ziliak, Stephen, and McCloskey, Deirdre, (2008). The Cult of Statistical Significance: How the Standard Error Costs Us Jobs, Justice, and Lives. Ann Arbor, University of Michigan Press, 2009.

- Thompson, Bruce, (2004). The "significance" crisis in psychology and education. Journal of Socio-Economics, 33, pp. 607–613.

- Chow, Siu L. , (1996). Statistical Significance: Rationale, Validity and Utility, Volume 1 of series Introducing Statistical Methods, Sage Publications Ltd, ISBN 978-0-76195205-3 – argues that statistical significance is useful in certain circumstances.

- Kline, Rex, (2004). Beyond Significance Testing: Reforming Data Analysis Methods in Behavioral Research Washington, DC: American Psychological Association.

External links

- Earliest Uses: The entry on Significance has some historical information.

- Raymond Hubbard, M.J. Bayarri, P Values are not Error Probabilities. A working paper that explains the difference between Fisher's evidential p-value and the Neyman-Pearson Type I error rate

.

. - The Concept of Statistical Significance Testing – Article by Bruce Thompon of the ERIC Clearinghouse on Assessment and Evaluation, Washington, D.C.

- What does it mean for a result to be “statistically significant”? - An article from the Statistical Assessment Service at George Mason University, Washington, D.C.

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||