Recursion

Recursion is the process of repeating items in a self-similar way. For instance, when the surfaces of two mirrors are exactly parallel with each other the nested images that occur are a form of infinite recursion. The term has a variety of meanings specific to a variety of disciplines ranging from linguistics to logic. The most common application of recursion is in mathematics and computer science, in which it refers to a method of defining functions in which the function being defined is applied within its own definition. Specifically this defines an infinite number of instances (function values), using a finite expression that for some instances may refer to other instances, but in such a way that no loop or infinite chain of references can occur. The term is also used more generally to describe a process of repeating objects in a self-similar way.

Contents |

Formal definitions of recursion

In mathematics and computer science, a class of objects or methods exhibit recursive behavior when they can be defined by two properties:

- A simple base case (or cases), and

- A set of rules which reduce all other cases toward the base case.

For example, the following is a recursive definition of a person's ancestors:

- One's parents are one's ancestors (base case).

- The parents of one's ancestors are also one's ancestors (recursion step).

The Fibonacci sequence is a classic example of recursion:

- Fib(0) is 0 [base case]

- Fib(1) is 1 [base case]

- For all integers n > 1: Fib(n) is (Fib(n-1) + Fib(n-2)) [recursive definition]

Many mathematical axioms are based upon recursive rules. For example, the formal definition of the natural numbers in set theory follows: 1 is a natural number, and each natural number has a successor, which is also a natural number. By this base case and recursive rule, one can generate the set of all natural numbers

A more humorous illustration goes: "To understand recursion, you must first understand recursion." Or perhaps more accurate is the following, from Andrew Plotkin: "If you already know what recursion is, just remember the answer. Otherwise, find someone who is standing closer to Douglas Hofstadter than you are; then ask him or her what recursion is."

Recursively defined mathematical objects include functions, sets, and especially fractals.

Recursion in language

Linguist Noam Chomsky theorizes that unlimited extension of any natural language is possible using the recursive device of embedding phrases within sentences. Thus, a chatty person may say, "Dorothy, who met the wicked Witch of the West in Munchkin Land where her wicked Witch sister was killed, liquidated her with a pail of water." Clearly, two simple sentences—"Dorothy met the Wicked Witch of the West in Munchkin Land" and "Her sister was killed in Munchkin Land"—can be embedded in a third sentence, "Dorothy liquidated her with a pail of water," to obtain a very verbose sentence.

The idea that recursion is an essential property of human language (as Chomsky suggests) is challenged by linguist Daniel Everett in his work Cultural Constraints on Grammar and Cognition in Pirahã: Another Look at the Design Features of Human Language, in which he hypothesizes that cultural factors made recursion unnecessary in the development of the Pirahã language. This concept, which challenges Chomsky's idea that recursion is the only trait which differentiates human and animal communication, is currently under debate. Andrew Nevins, David Pesetsky and Cilene Rodrigues provide a debate against this proposal.[1] Indirect proof that Everett's ideas are wrong comes from works in neurolinguistics where it appears that all human beings are endowed with the very same neurobiological structures to manage with all and only recursive languages. For a review, see Kaan et al. (2002)

Recursion in linguistics enables 'discrete infinity' by embedding phrases within phrases of the same type in a hierarchical structure. Without recursion, language does not have 'discrete infinity' and cannot embed sentences into infinity (with a 'Russian nesting doll' effect). Everett contests that language must have discrete infinity, and asserts that the Pirahã language - which he claims lacks recursion - is in fact finite. He likens it to the finite game of chess, which has a finite number of moves but is nevertheless very productive, with novel moves being discovered throughout history.

Recursion in plain English

Recursion is the process a procedure goes through when one of the steps of the procedure involves invoking the procedure itself. A procedure that goes through recursion is said to be 'recursive'.

To understand recursion, one must recognize the distinction between a procedure and the running of a procedure. A procedure is a set of steps that are to be taken based on a set of rules. The running of a procedure involves actually following the rules and performing the steps. An analogy might be that a procedure is like a cookbook in that it is the possible steps, while running a procedure is actually preparing the meal.

Recursion is related to, but not the same as, a reference within the specification of a procedure to the execution of some other procedure. For instance, a recipe might refer to cooking vegetables, which is another procedure that in turn requires heating water, and so forth. However, a recursive procedure is special in that (at least) one of its steps calls for a new instance of the very same procedure, like a sourdough recipe calling for some dough left over from the last time the same recipe was made. This of course immediately creates the possibility of an endless loop; recursion can only be properly used in a definition if the step in question is skipped in certain cases so that the procedure can complete, like a sourdough recipe that also tells you how to get some starter dough in case you've never made it before. Even if properly defined, a recursive procedure is not easy for humans to perform, as it requires distinguishing the new from the old (partially executed) invocation of the procedure; this requires some administration of how far various simultaneous instances of the procedures have progressed. For this reason recursive definitions are very rare in everyday situations. An example could be the following procedure to find a way through a maze. Proceed forward until reaching either an exit or a branching point (a dead end is considered a branching point with 0 branches). If the point reached is an exit, terminate. Otherwise try each branch in turn, using the procedure recursively; if every trial fails by reaching only dead ends, return on the path that led to this branching point and report failure. Whether this actually defines a terminating procedure depends on the nature of the maze: it must not allow loops. In any case, executing the procedure requires carefully recording all currently explored branching points, and which of their branches have already been exhaustively tried.

Recursive humor

Recursion is sometimes used humorously in computer science, programming, philosophy, or mathematics textbooks; the following two examples might be entries in a glossary:[2]

- Recursion

- See "Recursion".

- Recursion

- If you still don't get it, see "Recursion".

A variation is found in the index on page 269 of some editions of Kernighan and Ritchie's book "The C Programming Language"; thus the index entry recursively references itself:

- recursion 86, 139, 141, 182, 202, 269

The earliest version of this joke was in "Software Tools" by Kernighan and Plauger, and also appears in "The UNIX Programming Environment" by Kernighan and Pike. It did not appear in the first edition of The C Programming Language.

Another similar joke occurs in Google search engine: when a search for "recursion" is made, the site suggests "Did you mean: Recursion."

Recursive acronyms can also be examples of recursive humor. PHP, for example, stands for "PHP Hypertext Preprocessor" and WINE, for example, stands for "Wine Is Not an Emulator."

Recursion in mathematics

Recursively defined sets

Example: the natural numbers

The canonical example of a recursively defined set is given by the natural numbers:

- 1 is in

- if n is in

, then n + 1 is in

, then n + 1 is in

- The set of natural numbers is the smallest set satisfying the previous two properties.

Example: The set of true reachable propositions

Another interesting example is the set of all "true reachable" propositions in an axiomatic system.

- if a proposition is an axiom, it is a true reachable proposition.

- if a proposition can be obtained from true reachable propositions by means of inference rules, it is a true reachable proposition.

- The set of true reachable propositions is the smallest set of propositions satisfying these conditions.

This set is called 'true reachable propositions' because in non-constructive approaches to the foundations of mathematics, the set of true propositions may be larger than the set recursively constructed from the axioms and rules of inference. See also Gödel's incompleteness theorems.

Functional recursion

A function may be partly defined in terms of itself. A familiar example is the Fibonacci number sequence: F(n) = F(n − 1) + F(n − 2). For such a definition to be useful, it must lead to values which are non-recursively defined, in this case F(0) = 0 and F(1) = 1.

A famous recursive function is the Ackermann function which, unlike the Fibonacci sequence, cannot easily be expressed without recursion.

Proofs involving recursive definitions

Applying the standard technique of proof by cases to recursively-defined sets or functions, as in the preceding sections, yields structural induction, a powerful generalization of mathematical induction which is widely used to derive proofs in mathematical logic and computer science.

Recursive optimization

Dynamic programming is an approach to optimization which restates a multiperiod or multistep optimization problem in recursive form. The key result in dynamic programming is the Bellman equation, which writes the value of the optimization problem at an earlier time (or earlier step) in terms of its value at a later time (or later step).

Recursion in computer science

A common method of simplification is to divide a problem into subproblems of the same type. As a computer programming technique, this is called divide and conquer and is key to the design of many important algorithms. Divide and conquer serves as a top-down approach to problem solving, where problems are solved by solving smaller and smaller instances. A contrary approach is dynamic programming. This approach serves as a bottom-up approach, where problems are solved by solving larger and larger instances, until the desired size is reached.

A classic example of recursion is the definition of the factorial function, given here in C code:

unsigned int factorial(unsigned int n) { if (n <= 1) return 1; else return n * factorial(n-1); }

The function calls itself recursively on a smaller version of the input (n - 1) and multiplies the result of the recursive call by n, until reaching the base case, analogously to the mathematical definition of factorial.

Recursion in computer programming is exemplified when a function is defined in terms of simpler, often smaller versions of itself. The solution to the problem is then devised by combining the solutions obtained from the simpler versions of the problem. One example application of recursion is in parsers for programming languages. The great advantage of recursion is that an infinite set of possible sentences, designs or other data can be defined, parsed or produced by a finite computer program.

Recurrence relations are equations to define one or more sequences recursively. Some specific kinds of recurrence relation can be "solved" to obtain a non-recursive definition.

Use of recursion in an algorithm has both advantages and disadvantages. The main advantage is usually simplicity. The main disadvantage is often that the algorithm may require large amounts of memory if the depth of the recursion is very large.

The recursion theorem

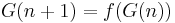

In set theory, this is a theorem guaranteeing that recursively defined functions exist. Given a set X, an element a of X and a function  , the theorem states that there is a unique function

, the theorem states that there is a unique function  (where

(where  denotes the set of natural numbers including zero) such that

denotes the set of natural numbers including zero) such that

for any natural number n.

Proof of uniqueness

Take two functions  and

and  such that:

such that:

where a is an element of X.

It can be proved by mathematical induction that  for all natural numbers n:

for all natural numbers n:

- Base Case:

so the equality holds for

so the equality holds for  .

.

- Inductive Step: Suppose

for some

for some  . Then

. Then

- Hence F(k) = G(k) implies F(k+1) = G(k+1).

By Induction,  for all

for all  .

.

Examples

Some common recurrence relations are:

|

Bibliography

- Johnsonbaugh, Richard (2004). Discrete Mathematics. Prentice Hall. ISBN 0-13-117686-2.

- Hofstadter, Douglas (1999). Gödel, Escher, Bach: an Eternal Golden Braid. Basic Books. ISBN 0-465-02656-7.

- Shoenfield, Joseph R. (2000). Recursion Theory. A K Peters Ltd. ISBN 1-56881-149-7.

- Causey, Robert L. (2001). Logic, Sets, and Recursion. Jones & Bartlett. ISBN 0-7637-1695-2.

- Cori, Rene; Lascar, Daniel; Pelletier, Donald H. (2001). Recursion Theory, Godel's Theorems, Set Theory, Model Theory. Oxford University Press. ISBN 0-19-850050-5.

- Barwise, Jon; Moss, Lawrence S. (1996). Vicious Circles. Stanford Univ Center for the Study of Language and Information. ISBN 0-19-850050-5. - offers a treatment of corecursion.

- Rosen, Kenneth H. (2002). Discrete Mathematics and Its Applications. McGraw-Hill College. ISBN 0-07-293033-0.

- Cormen, Thomas H., Charles E. Leiserson, Ronald L. Rivest, Clifford Stein (2001). Introduction to Algorithms. Mit Pr. ISBN 0-262-03293-7.

- Kernighan, B.; Ritchie, D. (1988). The C programming Language. Prentice Hall. ISBN 0-13-110362-8.

- Stokey, Nancy,; Robert Lucas; Edward Prescott (1989). Recursive Methods in Economic Dynamics. Harvard University Press. ISBN 0674750969.

- Hungerford (1980). Algebra. Springer. ISBN 978-0387905181., first chapter on set theory.

See also

- Church-Turing thesis

- Continuous predicate

- Corecursion

- Course-of-values recursion

- Droste effect

- Fixed point combinator

- Infinite loop

- Infinitism

- Iterated function

- Mise en abyme

- Primitive recursive function

- Reentrant (subroutine)

- Self-reference

- Strange loop

- Tail recursion

- Tupper's self-referential formula

- Turtles all the way down

- Viable System Model

References

- ^ Nevins, Andrew; Pesetsky, David; Rodrigues, Cilene (2009). "Evidence and argumentation: A reply to Everett (2009)" (PDF). Language 85 (3): 671–681. doi:10.1353/lan.0.0140. http://web.mit.edu/linguistics/people/faculty/pesetsky/Nevins_Pesetsky_Rodrigues_2_Evidence_and_Argumentation_Reply_to_Everett.pdf.

- ^ Hunter, David (2011). Essentials of Discrete Mathematics. Jones and Bartlett. pp. 494. http://books.google.com/books?id=kuwhTxCVovQC&dq=recursion+joke&source=gbs_navlinks_s.

External links

- Recursion - tutorial by Alan Gauld

- A Primer on Recursion- contains pointers to recursion in Formal Languages, Linguistics, Math and Computer Science

- Zip Files All The Way Down

- Nevins, Andrew and David Pesetsky and Cilene Rodrigues. Evidence and Argumentation: A Reply to Everett (2009). Language 85.3: 671--681 (2009)

- Kaan, E. – Swaab, T. Y. (2002) “The brain circuitry of syntactic comprehension”, Trends in Cognitive Sciences, vol. 6, Issue 8, 350-356.

,

,