Minor (linear algebra)

In linear algebra, a minor of a matrix A is the determinant of some smaller square matrix, cut down from A by removing one or more of its rows or columns. Minors obtained by removing just one row and one column from square matrices (first minors) are required for calculating matrix cofactors, which in turn are useful for computing both the determinant and inverse of square matrices.

Contents |

Detailed definition

Let A be an m × n matrix and k an integer with 0 < k ≤ m, and k ≤ n. A k × k minor of A is the determinant of a k × k matrix obtained from A by deleting m − k rows and n − k columns.

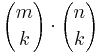

Since there are:

(read "m choose k")

(read "m choose k")

ways to choose k rows from m rows, and there are

ways to choose k columns from n columns, there are a total of

minors of size k × k.

Nomenclature

The (i,j) minor (often denoted Mij) of an n × n square matrix A is defined as the determinant of the (n − 1) × (n − 1) matrix formed by removing from A its ith row and jth column. An (i,j) minor is also referred to as (i,j)th minor, or simply i,j minor.

Mij is also called the minor of the element aij of matrix A.

A minor that is formed by removing only one row and column from a square matrix A (such as Mij) is called a first minor. When two rows and columns are removed, this is called a second minor.[1]

Cofactors and adjugate or adjoint of a matrix

The (i,j) cofactor Cij of a square matrix A is just (−1)i + j times the corresponding (n − 1) × (n − 1) minor Mij:

- Cij = (−1)i + j Mij

The cofactor matrix of A, or matrix of A cofactors, typically denoted C, is defined as the n×n matrix whose (i,j) entry is the (i,j) cofactor of A.

The transpose of C is called the adjugate or classical adjoint of A. (In modern terminology, the "adjoint" of a matrix most often refers to the corresponding adjoint operator.) Adjugate matrices are used to compute the inverse of square matrices.

Example

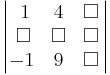

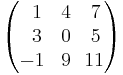

For example, given the matrix

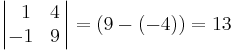

suppose we wish to find the cofactor C23. The minor M23 is the determinant of the above matrix with row 2 and column 3 removed (the following is not standard notation):

yields

yields

where the vertical bars around the matrix indicate that the determinant should be taken. Thus, C23 is (-1)2+3 M23

Complement

The complement, C, of a minor, M, of a square matrix, A, is formed by the determinant of the matrix A from which all the rows and columns associated with M have been removed. The complement of the first minor of an element aij is merely that element.[2]

Applications

The cofactors feature prominently in Laplace's formula for the expansion of determinants. If all the cofactors of a square matrix A are collected to form a new matrix of the same size and then transposed, one obtains the adjugate of A, which is useful in calculating the inverse of small matrices.

Given an m × n matrix with real entries (or entries from any other field) and rank r, then there exists at least one non-zero r × r minor, while all larger minors are zero.

We will use the following notation for minors: if A is an m × n matrix, I is a subset of {1,...,m} with k elements and J is a subset of {1,...,n} with k elements, then we write [A]I,J for the k × k minor of A that corresponds to the rows with index in I and the columns with index in J.

- If I = J, then [A]I,J is called a principal minor.

- If the matrix that corresponds to a principal minor is a quadratic upper-left part of the larger matrix (i.e., it consists of matrix elements in rows and columns from 1 to k), then the principal minor is called a leading principal minor. For an n × n square matrix, there are n leading principal minors. (Not in agreement with a lot of books. Sometimes the leading principal minor is consider to be the leading k x k matrix.)

- For Hermitian matrices, the principal minors can be used to test for positive definiteness.

Both the formula for ordinary matrix multiplication and the Cauchy-Binet formula for the determinant of the product of two matrices are special cases of the following general statement about the minors of a product of two matrices. Suppose that A is an m × n matrix, B is an n × p matrix, I is a subset of {1,...,m} with k elements and J is a subset of {1,...,p} with k elements. Then

where the sum extends over all subsets K of {1,...,n} with k elements. This formula is a straightforward extension of the Cauchy-Binet formula.

Multilinear algebra approach

A more systematic, algebraic treatment of the minor concept is given in multilinear algebra, using the wedge product: the k-minors of a matrix are the entries in the kth exterior power map.

If the columns of a matrix are wedged together k at a time, the k × k minors appear as the components of the resulting k-vectors. For example, the 2 × 2 minors of the matrix

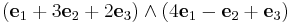

are −13 (from the first two rows), −7 (from the first and last row), and 5 (from the last two rows). Now consider the wedge product

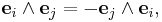

where the two expressions correspond to the two columns of our matrix. Using the properties of the wedge product, namely that it is bilinear and

and

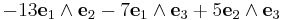

we can simplify this expression to

where the coefficients agree with the minors computed earlier.

References

- ^ Burnside, William Snow & Panton, Arthur William (1886) Theory of Equations: with an Introduction to the Theory of Binary Algebraic Form.

- ^ Bertha Jeffreys, Methods of Mathematical Physics, p.135, Cambridge University Press, 1999 ISBN 0521664020.

|

|||||

![[\mathbf{AB}]_{I,J} = \sum_{K} [\mathbf{A}]_{I,K} [\mathbf{B}]_{K,J}\,](/2012-wikipedia_en_all_nopic_01_2012/I/99d2053b50a232bc1b4ab90cba12b379.png)