Methods of computing square roots

There are several methods for calculating the principal square root of a nonnegative real number. For the square roots of a negative or complex number, see below.

Generally, these methods yield approximate results. To get a higher precision for the root, a higher precision for the square is required and a larger number of steps must be calculated.

Rough estimation

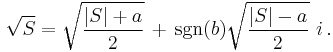

Many of the methods for calculating square roots of a positive real number S require an initial seed value. If the initial value is too far from the actual square root, the calculation will be slowed down. It is therefore useful to have a rough estimate, which may be very inaccurate but easy to calculate. If S ≥ 1, let D be the number of digits to the left of the decimal point. If S < 1, let D be the negative of the number of zeros to the immediate right of the decimal point. Then the rough estimation is this:

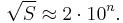

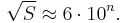

- If D is odd, D = 2n + 1, then use

- If D is even, D = 2n + 2, then use

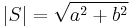

Two and six are used because they approximate the geometric means of the lowest and highest possible values with the given number of digits: ![\sqrt{\sqrt{1 \cdot 10}} = \sqrt[4]{10} \approx 2 \,](/2012-wikipedia_en_all_nopic_01_2012/I/7b4257056ab0c0ac4cf05d7ddd9de0b3.png) and

and ![\sqrt{\sqrt{10 \cdot 100}} = \sqrt[4]{1000} \approx 6 \,.](/2012-wikipedia_en_all_nopic_01_2012/I/e7012e95715ea00acdc4cce1e2a10703.png)

When working in the binary numeral system (as computers do internally), an alternative method is to use  (here D is the number of binary digits).

(here D is the number of binary digits).

Babylonian method

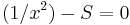

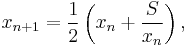

Perhaps the first algorithm used for approximating  is known as the "Babylonian method", named after the Babylonians,[1] or "Heron's method", named after the first-century Greek mathematician Hero of Alexandria who gave the first explicit description of the method.[2] It can be derived from (but predates by many centuries) Newton's method. The basic idea is that if x is an overestimate to the square root of a non-negative real number S then

is known as the "Babylonian method", named after the Babylonians,[1] or "Heron's method", named after the first-century Greek mathematician Hero of Alexandria who gave the first explicit description of the method.[2] It can be derived from (but predates by many centuries) Newton's method. The basic idea is that if x is an overestimate to the square root of a non-negative real number S then  will be an underestimate and so the average of these two numbers may reasonably be expected to provide a better approximation (though the formal proof of that assertion depends on the inequality of arithmetic and geometric means that shows this average is always an overestimate of the square root, as noted in the article on square roots, thus assuring convergence). This is a quadratically convergent algorithm, which means that the number of correct digits of the approximation roughly doubles with each iteration. It proceeds as follows:

will be an underestimate and so the average of these two numbers may reasonably be expected to provide a better approximation (though the formal proof of that assertion depends on the inequality of arithmetic and geometric means that shows this average is always an overestimate of the square root, as noted in the article on square roots, thus assuring convergence). This is a quadratically convergent algorithm, which means that the number of correct digits of the approximation roughly doubles with each iteration. It proceeds as follows:

- Begin with an arbitrary positive starting value x0 (the closer to the actual square root of S, the better).

- Let xn+1 be the average of xn and S / xn (using the arithmetic mean to approximate the geometric mean).

- Repeat step 2 until the desired accuracy is achieved.

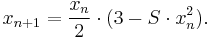

It can also be represented as:

This algorithm works equally well in the p-adic numbers, but cannot be used to identify real square roots with p-adic square roots; it is easy, for example, to construct a sequence of rational numbers by this method that converges to +3 in the reals, but to −3 in the 2-adics.

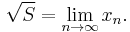

Example

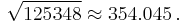

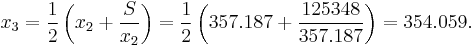

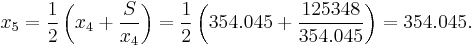

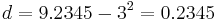

To calculate  , where S = 125348, to 6 significant figures, use the rough estimation method above to get x0. The number of digits in S is D = 6 = 2·2 + 2. So, n = 2 and the rough estimate is

, where S = 125348, to 6 significant figures, use the rough estimation method above to get x0. The number of digits in S is D = 6 = 2·2 + 2. So, n = 2 and the rough estimate is

Therefore,

Convergence

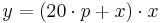

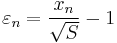

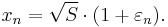

Let the relative error in xn be defined by

and thus

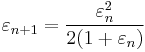

Then it can be shown that

and thus that

and consequently that convergence is assured provided that x0 and S are both positive.

Worst case for convergence

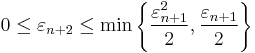

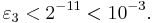

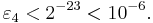

If using the rough estimate above with the Babylonian method, then the worst cases are:

Thus in any case,

Remember that rounding errors will slow the convergence. It is recommended to keep at least one extra digit beyond the desired accuracy of the xn being calculated to minimize round off error.

Exponential identity

Pocket calculators typically implement good routines to compute the exponential function and the natural logarithm, and then compute the square root of S using the identity

The same identity is used when computing square roots with logarithm tables or slide rules.

High/low method

A simple way to compute a square root is the high/low method, similar to the bisection method. This method involves guessing a number based on known squares, then checking if its square is too high or too low and adjusting accordingly.

To find the square root of 20, first note that 42 is 16, and that 52 is 25. As 16 < 20 < 25, the square root of 20 must be between 4 and 5. Guessing 4.5 yields 20.25 and is too high. The next step is to guess 4.4, yielding 19.36 and is too low. Therefore, as before, the square root of 20 must be in between 4.4 and 4.5. This pattern is continued until the desired number of decimal places is achieved. For example:

- 4.452 = 19.8025 (too low)

- 4.472 = 19.9809 (too low, but close)

- 4.482 = 20.0704 (too high)

- 4.4752 = 20.025625 (too high)

- 4.4732 = 20.007729 (too high, but close)

- 4.4722 = 19.998784 (too low)

Now it is known that the square root of 20 is between 4.472 and 4.473, so the decimal representation begins with 4.472...

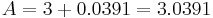

Bakhshali approximation

This method for finding an approximation to a square root was described in an ancient Indian mathematical manuscript called the Bakhshali manuscript. It is equivalent to two iterations of the Babylonian method beginning with N. The original presentation goes as follows: To calculate  , let N2 be the nearest perfect square to S. Then, calculate:

, let N2 be the nearest perfect square to S. Then, calculate:

This can be also written as:

Example

Find

Digit-by-digit calculation

This is a method to find each digit of the square root in a sequence. It is slower than the Babylonian method (if you have a calculator that can divide in one operation), but it has several advantages:

- It can be easier for manual calculations.

- Every digit of the root found is known to be correct, i.e., it does not have to be changed later.

- If the square root has an expansion that terminates, the algorithm terminates after the last digit is found. Thus, it can be used to check whether a given integer is a square number.

Napier's bones include an aid for the execution of this algorithm. The shifting nth-root algorithm is a generalization of this method.

The algorithm works for any base, and naturally, the way it proceeds depends on the base chosen.

Decimal (base 10)

Write the original number in decimal form. The numbers are written similar to the long division algorithm, and, as in long division, the root will be written on the line above. Now separate the digits into pairs, starting from the decimal point and going both left and right. The decimal point of the root will be above the decimal point of the square. One digit of the root will appear above each pair of digits of the square.

Beginning with the left-most pair of digits, do the following procedure for each pair:

- Starting on the left, bring down the most significant (leftmost) pair of digits not yet used (if all the digits have been used, write "00") and write them to the right of the remainder from the previous step (on the first step, there will be no remainder). In other words, multiply the remainder by 100 and add the two digits. This will be the current value c.

- Find p, y and x, as follows:

- Let p be the part of the root found so far, ignoring any decimal point. (For the first step, p = 0).

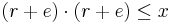

- Determine the greatest digit x such that

does not exceed c.

does not exceed c.

- Note: 20p + x is simply twice p, with the digit x appended to the right).

- Note: You can find x by guessing what c/(20·p) is and doing a trial calculation of y, then adjusting x upward or downward as necessary.

- Place the digit

as the next digit of the root, i.e., above the two digits of the square you just brought down. Thus the next p will be the old p times 10 plus x.

as the next digit of the root, i.e., above the two digits of the square you just brought down. Thus the next p will be the old p times 10 plus x.

- Subtract y from c to form a new remainder.

- If the remainder is zero and there are no more digits to bring down, then the algorithm has terminated. Otherwise go back to step 1 for another iteration.

Examples

Find the square root of 152.2756.

1 2. 3 4

/

\/ 01 52.27 56

01 1*1 <= 1 < 2*2 x = 1

01 y = x*x = 1*1 = 1

00 52 22*2 <= 52 < 23*3 x = 2

00 44 y = (20+x)*x = 22*2 = 44

08 27 243*3 <= 827 < 244*4 x = 3

07 29 y = (240+x)*x = 243*3 = 729

98 56 2464*4 <= 9856 < 2465*5 x = 4

98 56 y = (2460+x)*x = 2464*4 = 9856

00 00 Algorithm terminates: Answer is 12.34

Find the square root of 2.

1. 4 1 4 2

/

\/ 02.00 00 00 00

02 1*1 <= 2 < 2*2 x = 1

01 y = x*x = 1*1 = 1

01 00 24*4 <= 100 < 25*5 x = 4

00 96 y = (20+x)*x = 24*4 = 96

04 00 281*1 <= 400 < 282*2 x = 1

02 81 y = (280+x)*x = 281*1 = 281

01 19 00 2824*4 <= 11900 < 2825*5 x = 4

01 12 96 y = (2820+x)*x = 2824*4 = 11296

06 04 00 28282*2 <= 60400 < 28283*3 x = 2

The desired precision is achieved:

The square root of 2 is about 1.4142

Binary numeral system (base 2)

Inherent to digit-by-digit algorithms is a search and test step: find a digit,  , when added to the right of a current solution

, when added to the right of a current solution  , such that

, such that  , where

, where  is the value for which a root is desired. Expanding:

is the value for which a root is desired. Expanding:  . The current value of

. The current value of  —or, usually, the remainder—can be incrementally updated efficiently when working in binary, as the value of

—or, usually, the remainder—can be incrementally updated efficiently when working in binary, as the value of  will be a single bit, and the operations needed to compute

will be a single bit, and the operations needed to compute  and

and  can be replaced with faster bit shift operations. This gives rise to simple computer implementations:[3]

can be replaced with faster bit shift operations. This gives rise to simple computer implementations:[3]

short isqrt(short num) { short res = 0; short bit = 1 << 14; // The second-to-top bit is set: 1L<<30 for long // "bit" starts at the highest power of four <= the argument. while (bit > num) bit >>= 2; while (bit != 0) { if (num >= res + bit) { num -= res + bit; res = (res >> 1) + bit; } else res >>= 1; bit >>= 2; } return res; }

Faster algorithms, in binary and decimal or any other base, can be realized by using lookup tables—in effect trading more storage space for reduced run time.[4]

Vedic duplex method for extracting a square root

The Vedic duplex method is an ancient Indian method of extracting the square root. It is a variant of the digit by digit method for calculating the square root of a whole or decimal number one digit at a time.[5] The duplex is the square of the central digit plus double the cross-product of digits equidistant from the center. The duplex is computed from the quotient digits (square root digits) computed thus far, but after the initial digits. The duplex is subtracted from the dividend digit prior to the second subtraction for the product of the quotient digit times the divisor digit. For perfect squares the duplex and the dividend will get smaller and reach zero after a few steps. For non-perfect squares the decimal value of the square root can be calculated to any precision desired. However, as the decimal places proliferate, the duplex adjustment gets larger and longer to calculate. The duplex method follows the Vedic ideal for an algorithm, one-line, mental calculation. It is flexible in choosing the first digit group and the divisor. Small divisors are to be avoided by starting with a larger initial group.

In short, to calculate the duplex of a number, double the product of each pair of equidistant digits plus the square of the center digit (of the digits to the right of the colon).

Number => Calculation = Duplex 574 ==> 2(5·4) + 72 = 89 406,739 ==> 2(4·9)+ 2(0·3)+ 2(6·7) = 72+0+84 = 156 123,456 ==> 2(1·6)+ 2(2·5)+ 2(3·4) = 12 +20 +24 = 56 88,900,777 ==> 2(8·7)+2(8·7)+2(9·7)+2(0·0)+2(0·0) = 112+112+126+0+0 = 350 48329,03711 ==> 2(4·1)+2(8·1)+2(3·7)+2(2·3)+2(9·0)= 8+16+42+12+0 = 78

In a square root calculation the quotient digit set increases incrementally for each step.

Number => Calculation = Duplex: 1 ==> 12 = 1 14 ==>2(1·4) = 8 142 ==> 2(1·2) + 42 = 4 + 16 = 20 14,21 ==> 2(1·1) + 2(4·2) = 2 + 16 = 18 14213 ==> 6+8+4 = 18 142,135 ==> 10+24+4 = 38 1421356 ==> 12+40+12+1 = 65 1421,3562 ==> 4+48+20+6 = 78 142,135,623 ==> 6+16+24+10+9 = 65 142,1356,237 ==> 14+24+8+12+30 = 88 142,13562,373 ==> 6+56+12+4+36+25 = 139

Example 1, by discussion

Consider the perfect square 2809 = 532. Use the duplex method to find the square root of 2,809.

- Set down the number in groups of two digits.

- Define a divisor, a dividend and a quotient to find the root.

- Given 2809. Consider the first group, 28.

- Find the nearest perfect square below that group.

- The root of that perfect square is the first digit of our root.

- Since 28 > 25 and 25 = 52, take 5 as the first digit in the square root.

- For the divisor take double this first digit (2 · 5), which is 10.

- Next, set up a division framework with a colon.

- 28: 0 9 is the dividend and 5: is the quotient.

- Put a colon to the right of 28 and 5 and keep the colons lined up vertically. The duplex is calculated only on quotient digits to the right of the colon.

- Calculate the remainder. 28: minus 25: is 3:.

- Append the remainder on the left of the next digit to get the new dividend.

- Here, append 3 to the next dividend digit 0, which makes the new dividend 30. The divisor 10 goes into 30 just 3 times. (No reserve needed here for subsequent deductions.)

- Repeat the operation.

- The zero remainder appended to 9. Nine is the next dividend.

- This provides a digit to the right of the colon so deduct the duplex, 32 = 9.

- Subtracting this duplex from the dividend 9, a zero remainder results.

- Ten into zero is zero. The next root digit is zero. The next duplex is 2(3·0) = 0.

- The dividend is zero. This is an exact square root, 53.

Example 1, analysis and square root framework

Find the square root of 2809.

Set down the number in groups of two digits.

The number of groups gives the number of whole digits in the root.

Put a colon after the first group, 28, to separate it.

From the first group, 28, obtain the divisor, 10, since

28>25=52 and by doubling this first root, 2x5=10.

Gross dividend: 28: 0 9. Using mental math:

Divisor: 10) 3 0 Square: 10) 28: 30 9

Duplex, Deduction: 25: xx 09 Square root: 5: 3. 0

Dividend: 30 00

Remainder: 3: 00 00

Square Root, Quotient: 5: 3. 0

Example 2

Find the square root of 2,080,180,881. Solution by the duplex method: this ten-digit square has five digit-pairs, so it will have a five-digit square root. The first digit-pair is 20. Put the colon to the right. The nearest square below 20 is 16, whose root is 4, the first root digit. So, use 2·4=8 for the divisor. Now proceed with the duplex division, one digit column at a time. Prefix the remainder to the next dividend digit.

divisor; gross dividend: 8) 20: 8 0 1 8 0 8 8 1

read the dividend diagonally up: 4 8 7 11 10 10 0 8

minus the duplex: 16: xx 25 60 36 90 108 00 81

actual dividend: : 48 55 11 82 10 00 08 00

minus the product: : 40 48 00 72 00 00 0 00

remainder: 4: 8 7 11 10 10 0 8 00

quotient: 4: 5, 6 0 9. 0 0 0 0

Duplex calculations: Quotient-digits ==> Duplex deduction. 5 ==> 52= 25 5 and 6 ==> 2(5·6) = 60 5,6,0 ==> 2(5·0)+62 = 36 5,6,0,9 ==> 2(5·9)+2(6·0) = 90 5,6,0,9,0 ==> 2(5·0)+2(6·9)+ 0 = 108 5,6,0,9,0,0 ==> 2(5·0)+2(6·0)+2(0·9) = 0 5,6,0,9,0,0,0 ==> 2(5·0)+2(6·0)+2(0·0)+92 = 81

Hence the square root of 2,080,180,881 is exactly 45,609.

Example 3

Find the square root of two to ten places. Take 20,000 as the beginning group, using three digit-pairs at the start. The perfect square just below 20,000 is 141, since 1412 = 19881 < 20,000. So, the first root digits are 141 and the divisor doubled, 2 x 141 = 282. With a larger divisor the duplex will be relatively small. Hence, the multiple of the divisor can be picked without confusion.

Dividend: 2.0000 : 0 0 0 0 0 0 0 0

Diagonal;Divisor: (282) : 1190 620 400 1020 1620 1820 750 1120

Minus duplex: : xxxx 16 16 12 28 53 74 59

Actual dividend: 20000 : 1190 604 384 1008 1592 1767 676 1061

Minus product: 19881 : 1128 564 282 846 1410 1692 564 846

Remainder: 119 : 62 40 102 162 182 75 112 215

Root quotient: 1.41 : 4 2 1 3 5 6 2 3

Ten multiples of 282: 282; 564; 846; 1128; 1410; 1692; 1974; 2256; 2538; 2820.

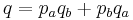

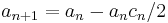

A two-variable iterative method

This method is applicable for finding the square root of  and converges best for

and converges best for  . This, however, is no real limitation for a computer based calculation, as in base 2 floating point and fixed point representations, it is trivial to multiply

. This, however, is no real limitation for a computer based calculation, as in base 2 floating point and fixed point representations, it is trivial to multiply  by an integer power of 4, and therefore

by an integer power of 4, and therefore  by the corresponding power of 2, by changing the exponent or by shifting, respectively. Therefore,

by the corresponding power of 2, by changing the exponent or by shifting, respectively. Therefore,  can be moved to the range

can be moved to the range  . Moreover, the following method does not employ general divisions, but only additions, subtractions, multiplications, and divisions by powers of two, which are again trivial to implement. A disadvantage of the method is that numerical errors accumulate, in contrast to single variable iterative methods such as the Babylonian one.

. Moreover, the following method does not employ general divisions, but only additions, subtractions, multiplications, and divisions by powers of two, which are again trivial to implement. A disadvantage of the method is that numerical errors accumulate, in contrast to single variable iterative methods such as the Babylonian one.

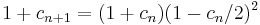

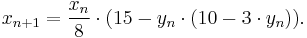

The initialization step of this method is

while the iterative steps read

Then,  (while

(while  ).

).

Note that the convergence of  , and therefore also of

, and therefore also of  , is quadratic.

, is quadratic.

The proof of the method is rather easy. First, rewrite the iterative definition of  as

as

.

.

Then it is straightforward to prove by induction that

and therefore the convergence of  to the desired result

to the desired result  is ensured by the convergence of

is ensured by the convergence of  to 0, which in turn follows from

to 0, which in turn follows from  .

.

This method was developed around 1950 by M. V. Wilkes, D. J. Wheeler and S. Gill[6] for use on EDSAC, one of the first electronic computers.[7] The method was later generalized, allowing the computation of non-square roots.[8]

Iterative methods for reciprocal square roots

The following are iterative methods for finding the reciprocal square root of S which is  . Once it has been found, find

. Once it has been found, find  by simple multiplication:

by simple multiplication:  . These iterations involve only multiplication, and not division. They are therefore faster than the Babylonian method. However, they are not stable. If the initial value is not close to the reciprocal square root, the iterations will diverge away from it rather than converge to it. It can therefore be advantageous to perform an iteration of the Babylonian method on a rough estimate before starting to apply these methods.

. These iterations involve only multiplication, and not division. They are therefore faster than the Babylonian method. However, they are not stable. If the initial value is not close to the reciprocal square root, the iterations will diverge away from it rather than converge to it. It can therefore be advantageous to perform an iteration of the Babylonian method on a rough estimate before starting to apply these methods.

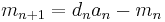

- One method is found by applying Newton's method to the equation

. It converges quadratically:

. It converges quadratically:

- Another iteration obtained by Halley's method, which is the Householder's method of order two, converges cubically, but involves more operations per iteration:

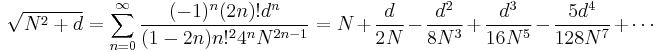

Taylor series

If N is an approximation to  , a better approximation can be found by using the Taylor series of the square root function:

, a better approximation can be found by using the Taylor series of the square root function:

As an iterative method, the order of convergence is equal to the number of terms used. With 2 terms, it is identical to the Babylonian method; With 3 terms, each iteration takes almost as many operations as the Bakhshali approximation, but converges more slowly. Therefore, this is not a particularly efficient way of calculation.

Other methods

Finding  is the same as solving the equation

is the same as solving the equation  . Therefore, any general numerical root-finding algorithm can be used. Newton's method, for example, reduces in this case to the Babylonian method. Other methods are less efficient than the ones presented above.

. Therefore, any general numerical root-finding algorithm can be used. Newton's method, for example, reduces in this case to the Babylonian method. Other methods are less efficient than the ones presented above.

A completely different method for computing the square root is based on the CORDIC algorithm, which uses only very simple operations (addition, subtraction, bitshift and table lookup, but no multiplication).

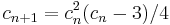

Continued fraction expansion

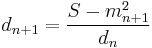

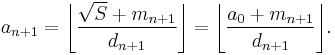

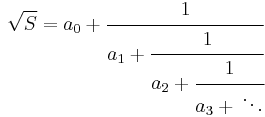

Quadratic irrationals (numbers of the form  , where a, b and c are integers), and in particular, square roots of integers, have periodic continued fractions. Sometimes what is desired is finding not the numerical value of a square root, but rather its continued fraction expansion. The following iterative algorithm can be used for this purpose (S is any natural number that is not a perfect square):

, where a, b and c are integers), and in particular, square roots of integers, have periodic continued fractions. Sometimes what is desired is finding not the numerical value of a square root, but rather its continued fraction expansion. The following iterative algorithm can be used for this purpose (S is any natural number that is not a perfect square):

Notice that mn, dn, and an are always integers. The algorithm terminates when this triplet is the same as one encountered before. The expansion will repeat from then on. The sequence [a0; a1, a2, a3, …] is the continued fraction expansion:

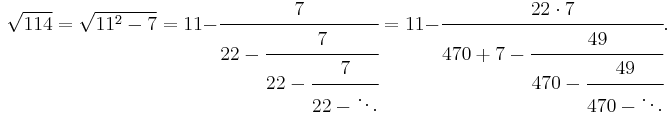

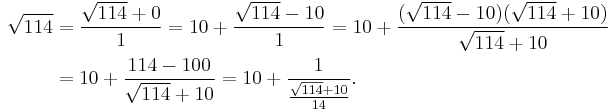

Example, square root of 114 as a continued fraction

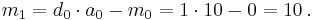

Begin with m0 = 0; d0 = 1; and a0 = 10 (102 = 100 and 112 = 121 > 114 so 10 chosen).

So, m1 = 10; d1 = 14; and a1 = 1.

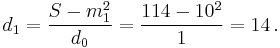

Next, m2 = 4; d2 = 7; and a2 = 2.

Now, loop back to the second equation above.

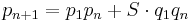

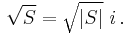

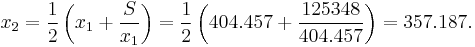

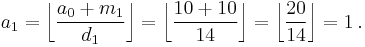

Consequently, the simple continued fraction for the square root of 114 is

Its actual value is approximately 10.67707 82520 31311 21....

Generalized continued fraction

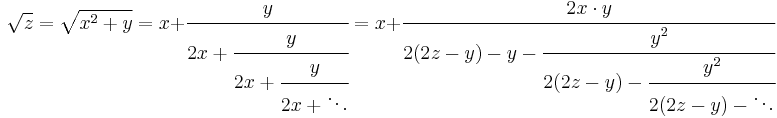

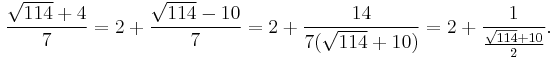

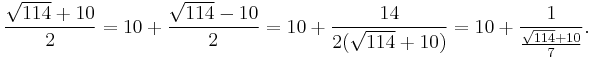

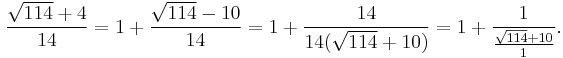

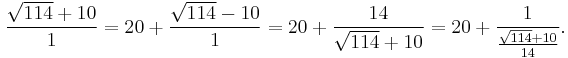

A more rapid method is to evaluate its generalized continued fraction. From the formula derived there:

the square root of 114 is quickly found:

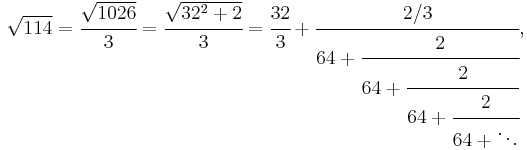

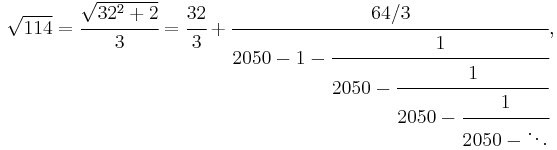

Furthermore, the fact that 114 is 2/3 of the way between 102=100 and 112=121 results in

which is simply the aforementioned [10;1,2, 10,2,1, 20,1,2, 10,2,1, 20,1,2, ...] evaluated at every third term. Combining pairs of fractions produces

which is now [10;1,2, 10,2,1,20,1,2, 10,2,1,20,1,2, ...] evaluated at the third term and every six terms thereafter.

Pell's equation

Pell's equation and its variants yield a method for efficiently finding continued fraction convergents of square roots of integers. However, it can be complicated to execute, and usually not every convergent is generated. The ideas behind the method are as follows:

- If (p, q) is a solution (where p and q are integers) to the equation

, then

, then  is a continued fraction convergent of

is a continued fraction convergent of  , and as such, is an excellent rational approximation to it.

, and as such, is an excellent rational approximation to it. - If (pa, qa) and (pb, qb) are solutions, then so is:

-

- (compare to the multiplication of quadratic integers)

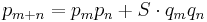

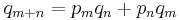

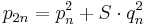

- More generally, if (p1, q1) is a solution, then it is possible to generate a sequence of solutions (pn, qn) satisfying:

The method is as follows:

- Find positive integers p1 and q1 such that

. This is the hard part; It can be done either by guessing, or by using fairly sophisticated techniques.

. This is the hard part; It can be done either by guessing, or by using fairly sophisticated techniques.

-

- To generate a long list of convergents, iterate:

- To find the larger convergents quickly, iterate:

-

- Notice that the corresponding sequence of fractions coincides with the one given by the Hero's method starting with

.

.

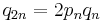

- In either case,

is a rational approximation satisfying

is a rational approximation satisfying

Approximations that depend on IEEE representation

On computers, a very rapid Newton's-method-based approximation to the square root can be obtained for floating point numbers when computers use an IEEE (or sufficiently similar) representation.

This technique is based on the fact that the IEEE floating point format approximates base-2 logarithm. For example, you can get the approximate logarithm of 32-bit single precision floating point number by translating its binary representation as an integer, scaling it by  , and removing a bias of 127, i.e.

, and removing a bias of 127, i.e.

For example, 1.0 is represented by a hexadecimal number 0x3F800000, which would represent  if taken as an integer. Using the formula above you get

if taken as an integer. Using the formula above you get  , as expected from

, as expected from  . In a similar fashion you get 0.5 from 1.5 (0x3FC00000).

. In a similar fashion you get 0.5 from 1.5 (0x3FC00000).

To get the square root, divide the logarithm by 2 and convert the value back. The following program demonstrates the idea. Note that the exponent's lowest bit is intentionally allowed to propagate into the mantissa. One way to justify the steps in this program is to assume  is the exponent bias and

is the exponent bias and  is the number of explicitly stored bits in the mantissa and then show that

is the number of explicitly stored bits in the mantissa and then show that

float sqrt_approx(float z) { union { int tmp; float f; } u; u.f = z; /* * To justify the following code, prove that * * ((((val_int / 2^m) - b) / 2) + b) * 2^m = ((val_int - 2^m) / 2) + ((b + 1) / 2) * 2^m) * * where * * val_int = u.tmp * b = exponent bias * m = number of mantissa bits * * . */ u.tmp -= 1 << 23; /* Subtract 2^m. */ u.tmp >>= 1; /* Divide by 2. */ u.tmp += 1 << 29; /* Add ((b + 1) / 2) * 2^m. */ return u.f; }

The three mathematical operations forming the core of the above function can be expressed in a single line. An additional adjustment can be added to reduce the maximum relative error. So, the three operations, not including the cast, can be rewritten as

u.tmp = (1 << 29) + (u.tmp >> 1) - (1 << 22) + a;

where a is a bias for adjusting the approximation errors. For example, with a = 0 the results are accurate for even powers of 2 (e.g., 1.0), but for other numbers the results will be slightly too big (e.g.,1.5 for 2.0 instead of 1.414... with 6% error). With m = -0x4C000, the errors are between about -3.5% and 3.5%.

If the approximation is to be used for an initial guess for Newton's method to the equation  , then the reciprocal form shown in the following section is preferred.

, then the reciprocal form shown in the following section is preferred.

Reciprocal of the square root

A variant of the above routine is included below, which can be used to compute the reciprocal of the square root, i.e.,  instead, was written by Greg Walsh, and implemented into SGI Indigo by Gary Tarolli.[9][10] The integer-shift approximation produced a relative error of less than 4%, and the error dropped further to 0.15% with one iteration of Newton's method on the following line.[11] In computer graphics it is a very efficient way to normalize a vector.

instead, was written by Greg Walsh, and implemented into SGI Indigo by Gary Tarolli.[9][10] The integer-shift approximation produced a relative error of less than 4%, and the error dropped further to 0.15% with one iteration of Newton's method on the following line.[11] In computer graphics it is a very efficient way to normalize a vector.

float invSqrt(float x) { float xhalf = 0.5f*x; union { float x; int i; } u; u.x = x; u.i = 0x5f3759df - (u.i >> 1); x = u.x * (1.5f - xhalf * u.x * u.x); return x; }

Some VLSI hardware implements inverse square root using a second degree polynomial estimation followed by a Goldschmidt iteration (see division (digital)).[12]

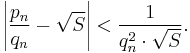

Negative or complex square

If S < 0, then its principal square root is

If S = a+bi where a and b are real and b ≠ 0, then its principal square root is

This can be verified by squaring the root.[13][14] Here

is the modulus of S. The principal square root of a complex number is defined to be the root with the non-negative real part.

See also

- Alpha max plus beta min algorithm

- Integer square root

- Mental calculation

- N-th root algorithm

- Shifting nth-root algorithm

- Square root of 2

Notes

- ^ There is no direct evidence showing how the Babylonians computed square roots, although there are informed conjectures. (Square root of 2#Notes gives a summary and references.)

- ^ Heath, Thomas (1921). A History of Greek Mathematics, Vol. 2. Oxford: Clarendon Press. pp. 323–324. http://books.google.com/books?id=LOA5AAAAMAAJ&pg=PR323.

- ^ Fast integer square root by Mr. Woo's abacus algorithm

- ^ Integer Square Root function

- ^ Vedic Mathematics: Sixteen Simple Mathematical Formulae from the Vedas, by Swami Sankaracarya (1880-1960), Motilal Banarsidass Indological Publishers and Booksellers, Varanasi, India, 1965; reprinted in Delhi, India, 1975, 1978. 367 pages.

- ^ M. V. Wilkes, D. J. Wheeler and S. Gill, "The Preparation of Programs for an Electronic Digital Computer", Addison-Wesley, 1951.

- ^ M. Campbell-Kelly, "Origin of Computing", Scientific American, September 2009.

- ^ J. C. Gower, "A Note on an Iterative Method for Root Extraction", The Computer Journal 1(3):142–143, 1958.

- ^ Rys (2006-11-29). "Origin of Quake3's Fast InvSqrt()". Beyond3D. http://www.beyond3d.com/content/articles/8/. Retrieved 2008-04-19.

- ^ Rys (2006-12-19). "Origin of Quake3's Fast InvSqrt() - Part Two". Beyond3D. http://www.beyond3d.com/content/articles/15/. Retrieved 2008-04-19.

- ^ Fast Inverse Square Root by Chris Lomont

- ^ "High-Speed Double-Precision Computation of Reciprocal, Division, Square Root and Inverse Square Root" by José-Alejandro Piñeiro and Javier Díaz Bruguera 2002 (abstract)

- ^ Abramowitz, Miltonn; Stegun, Irene A. (1964). Handbook of mathematical functions with formulas, graphs, and mathematical tables. Courier Dover Publications. p. 17. ISBN 0-486-61272-4. http://books.google.com/books?id=MtU8uP7XMvoC., Section 3.7.26, p. 17

- ^ Cooke, Roger (2008). Classical algebra: its nature, origins, and uses. John Wiley and Sons. p. 59. ISBN 0-470-25952-3. http://books.google.com/books?id=lUcTsYopfhkC., Extract: page 59

External links

- Weisstein, Eric W., "Square root algorithms" from MathWorld.

- C++ Source for several fast square root functions with analysis

- Square roots by subtraction

- Integer Square Root Algorithm by Andrija Radović

- Personal Calculator Algorithms I : Square Roots (William E. Egbert), Hewlett-Packard Journal (may 1977) : page 22

![\sqrt{114} = [10;1,2,10,2,1,20,1,2,10,2,1,20,1,2,10,2,1,20,\dots].\,](/2012-wikipedia_en_all_nopic_01_2012/I/8b51eb6ebcb2fecf993f83c47dd96556.png)