Metaheuristic

In computer science, metaheuristic designates a computational method that optimizes a problem by iteratively trying to improve a candidate solution with regard to a given measure of quality. Metaheuristics make few or no assumptions about the problem being optimized and can search very large spaces of candidate solutions. However, metaheuristics do not guarantee an optimal solution is ever found. Many metaheuristics implement some form of stochastic optimization.

Other terms having a similar meaning as metaheuristic, are: derivative-free, direct search, black-box, or indeed just heuristic optimizer. Several books and survey papers have been published on the subject.[1][2][3][4][5][6][7]

Contents |

Applications

Metaheuristics are used for combinatorial optimization in which an optimal solution is sought over a discrete search-space. An example problem is the travelling salesman problem where the search-space of candidate solutions grows more than exponentially as the size of the problem increases, which makes an exhaustive search for the optimal solution infeasible. Additionally, multidimensional combinatorial problems, including most design problems in engineering such as form-finding and behavior-finding, suffer from the curse of dimensionality, which also makes them infeasible for exhaustive search or analytical methods. Popular metaheuristics for combinatorial problems include simulated annealing by Kirkpatrick et al.,[8] genetic algorithms by Holland et al.,[9] ant colony optimization by Dorigo,[10] scatter search[11] and tabu search[12] by Glover.

Metaheuristics are also used for problems over real-valued search-spaces, where the classic way of optimization is to derive the gradient of the function to be optimized and then employ gradient descent or a quasi-Newton method. Metaheuristics do not use the gradient or Hessian matrix so their advantage is that the function to be optimized need not be continuous or differentiable and it can also have constraints. Popular metaheuristic optimizers for real-valued search-spaces include particle swarm optimization by Eberhart and Kennedy,[13] differential evolution by Storn and Price[14] and evolution strategies by Rechenberg[15] and Schwefel.[16]

Metaheuristics based on decomposition techniques have also been proposed for tackling hard combinatorial problems of large size.[17]

Main contributions

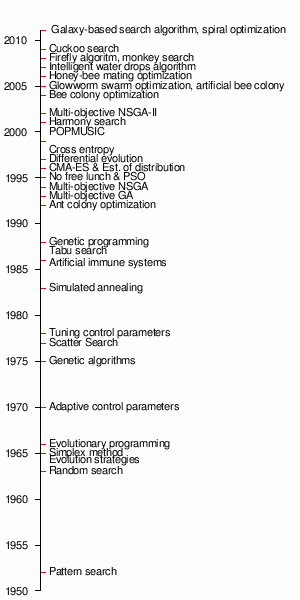

Timeline of main contributions.

Many different metaheuristics are in existence and new variants are continually being proposed. Some of the most significant contributions to the field are:

- 1952: Robbins and Monro work on stochastic optimization methods.[18]

- 1952: Fermi and Metropolis develop an early form of pattern search as described belatedly by Davidon.[19]

- 1954: Barricelli carry out the first simulations of the evolution process and use them on general optimization problems.[20]

- 1963: Rastrigin proposes random search.[21]

- 1965: Matyas proposes random optimization.[22]

- 1965: Rechenberg proposes evolution strategies.[23]

- 1965: Nelder and Mead propose a simplex heuristic, which was shown by Powell to converge to non-stationary points on some problems.[24]

- 1966: Fogel et al. propose evolutionary programming.[25]

- 1970: Hastings proposes the Metropolis-Hastings algorithm.[26]

- 1970: Cavicchio proposes adaptation of control parameters for an optimizer.[27]

- 1970: Kernighan and Lin propose a graph partitioning method, related to variable-depth search and prohibition-based (tabu) search.[28]

- 1975: Holland proposes the genetic algorithm.[9]

- 1977: Glover proposes Scatter Search.[11]

- 1978: Mercer and Sampson propose a metaplan for tuning an optimizer's parameters by using another optimizer.[29]

- 1980: Smith describes genetic programming.[30]

- 1983: Kirkpatrick et al. propose simulated annealing.[8]

- 1986: Glover proposes tabu search, first mention of the term metaheuristic.[12]

- 1986: Farmer et al. work on the artificial immune system.[31]

- 1986: Grefenstette proposes another early form of metaplan for tuning an optimizer's parameters by using another optimizer.[32]

- 1988: First conference on genetic algorithms is organized at the University of Illinois at Urbana-Champaign.

- 1988: Koza registers his first patent on genetic programming.[33][34]

- 1989: Goldberg publishes a well known book on genetic algorithms.[1]

- 1989: Evolver, the first optimization software using the genetic algorithm.

- 1989: Moscato proposes the memetic algorithm.[35]

- 1991: Interactive evolutionary computation.

- 1992: Dorigo proposes the ant colony algorithm.[10]

- 1993: The journal, Evolutionary Computation, begins publication by the Massachusetts Institute of Technology.

- 1993: Fonseca and Fleming propose MOGA for multiobjective optimization.[36]

- 1994: Battiti and Tecchiolli propose Reactive Search Optimization(RSO) principles for the online self-tuning of heuristics.[37][38]

- 1994: Srinivas and Deb propose NSGA for multiobjective optimization.[39]

- 1995: Kennedy and Eberhart propose particle swarm optimization.[13]

- 1995: Wolpert and Macready prove the no free lunch theorems.[40][41]

- 1996: Mühlenbein and Paaß work on the estimation of distribution algorithm.[42]

- 1996: Hansen and Ostermeier propose CMA-ES.[43]

- 1997: Storn and Price propose differential evolution.[14]

- 1997: Rubinstein proposes the cross entropy method.[44]

- 1999: Taillard and Voss propose POPMUSIC.[17]

- 2001: Geem et al. propose harmony search.[45]

- 2002: Deb et al. propose NSGA-II for multiobjective optimization.[46]

- 2004: Nakrani and Tovey propose bee colony optimization.[47]

- 2005: Krishnanand and Ghose propose Glowworm swarm optimization.[48][49][50]

- 2005: Karaboga proposes Artificial Bee Colony Algorithm (ABC).[51]

- 2006: Haddad et al. introduces honey-bee mating optimization.[52]

- 2007: Hamed Shah-Hosseini introduces Intelligent Water Drops.

- 2008: Wierstra et al. propose natural evolution strategies based on the natural gradient.[53]

- 2008: Yang introduces firefly algorithm.[54]

- 2008: Mucherino and Seref propose the Monkey Search

- 2009: Yang and Deb introduce cuckoo search.[55]

- 2011: Hamed Shah-Hosseini proposes the Galaxy-based Search Algorithm.[56]

- 2011: Tamura and Yasuda propose spiral optimization.[57][58]

Criticism

Mathematical analyses of metaheuristics have been presented in the literature, see e.g. Holland's schema theorem[9] for the genetic algorithm, Rechenberg's work[15] on evolution strategies, the work by Trelea,[59] amongst others, for analysis of particle swarm optimization, and Zaharie[60] for analysis of differential evolution. These analyses make a number of assumptions in regard to the optimizer variants and the simplicity of the optimization problems which limit their validity in real-world optimization scenarios. Performance and convergence aspects of metaheuristic optimizers are therefore often demonstrated empirically in the research literature. This has been criticized in the no free lunch set of theorems by Wolpert and Macready,[41] which, among other things, prove that all optimizers perform equally well when averaged over all problems. The practical relevance of the no free lunch theorems however is minor, because they will generally not hold on the collection of problems a practitioner is facing.

For the practitioner the most relevant issue is that metaheuristics are not guaranteed to find the optimum or even a satisfactory near-optimal solution. All metaheuristics will eventually encounter problems on which they perform poorly and the practitioner must gain experience in which optimizers work well on different classes of problems.

See also

Metaheuristic methods are, generally speaking, sub-fields of:

- Optimization algorithms

- Heuristic

- Stochastic search

Sub-fields of metaheuristics include:

- Local search

- Evolutionary computing which includes, amongst others:

- Evolutionary algorithms

- Swarm Intelligence

Other fields of interest:

References

- ^ a b Goldberg, D.E. (1989). Genetic Algorithms in Search, Optimization and Machine Learning. Kluwer Academic Publishers. ISBN 0201157675.

- ^ Luke, S. (2009). Essentials of metaheuristics. http://cs.gmu.edu/~sean/book/metaheuristics/.

- ^ Talbi, E-G. (2009). Metaheuristics: from design to implementation. Wiley. ISBN 0470278587.

- ^ Glover, F.; Kochenberger, G.A. (2003). Handbook of metaheuristics. 57. Springer, International Series in Operations Research & Management Science. ISBN 978-1-4020-7263-5.

- ^ Mucherino, A.; Seref, O. (2009). "Modeling and Solving Real Life Global Optimization Problems with Meta-Heuristic Methods". Advances in Modeling Agricultural Systems 25: 1–17. doi:10.1007/978-0-387-75181-8_19.

- ^ Blum, C.; Roli, A. (2003). Metaheuristics in combinatorial optimization: Overview and conceptual comparison. 35. ACM Computing Surveys. pp. 268–308.

- ^ Battiti, Roberto; Mauro Brunato; Franco Mascia (2008). Reactive Search and Intelligent Optimization. Springer Verlag. ISBN 978-0-387-09623-0.

- ^ a b Kirkpatrick, S.; Gelatt Jr., C.D.; Vecchi, M.P. (1983). "Optimization by Simulated Annealing". Science 220 (4598): 671–680. doi:10.1126/science.220.4598.671. PMID 17813860.

- ^ a b c Holland, J.H. (1975). Adaptation in Natural and Artificial Systems. University of Michigan Press. ISBN 0262082136.

- ^ a b Dorigo, M. (1992). Optimization, Learning and Natural Algorithms (Phd Thesis). Politecnico di Milano, Italie.

- ^ a b Glover, Fred (1977). "Heuristics for Integer programming Using Surrogate Constraints". Decision Sciences 8 (1): 156–166.

- ^ a b Glover, F. (1986). "Future Paths for Integer Programming and Links to Artificial Intelligence". Computers and Operations Research 13 (5): 533–549. doi:10.1016/0305-0548(86)90048-1.

- ^ a b Kennedy, J.; Eberhart, R. (1995). "Particle Swarm Optimization". Proceedings of IEEE International Conference on Neural Networks. IV. pp. 1942–1948.

- ^ a b Storn, R.; Price, K. (1997). "Differential evolution - a simple and efficient heuristic for global optimization over continuous spaces". Journal of Global Optimization 11 (4): 341–359. doi:10.1023/A:1008202821328.

- ^ a b Rechenberg, I. (1971). Evolutionsstrategie – Optimierung technischer Systeme nach Prinzipien der biologischen Evolution (PhD Thesis). ISBN 3772803733.

- ^ Schwefel, H-P. (1974). Numerische Optimierung von Computer-Modellen (PhD Thesis).

- ^ a b Taillard, Eric; Voss, Stephan (1999). "POPMUSIC: Partial Optimization Metaheuristic Under Special Intensification Conditions". Technical Report (Institute for Computer Sciences, heig-vd, Yverdon). http://mistic.heig-vd.ch/taillard/articles.dir/popmusic.pdf.

- ^ Robbins, H.; Monro, S. (1951). "A Stochastic Approximation Method". Annals of Mathematical Statistics 22 (3): 400–407. doi:10.1214/aoms/1177729586.

- ^ Davidon, W.C. (1991). "Variable metric method for minimization". SIAM Journal on Optimization 1 (1): 1–17. doi:10.1137/0801001.

- ^ Barricelli, N.A. (1954). "Esempi numerici di processi di evoluzione". Methodos: 45–68.

- ^ Rastrigin, L.A. (1963). "The convergence of the random search method in the extremal control of a many parameter system". Automation and Remote Control 24 (10): 1337–1342.

- ^ Matyas, J. (1965). "Random optimization". Automation and Remote Control 26 (2): 246–253.

- ^ Rechenberg, I. (1965). Cybernetic Solution Path of an Experimental Problem. Royal Aircraft Establishment Library Translation.

- ^ Nelder, J.A.; Mead, R. (1965). "A simplex method for function minimization". Computer Journal 7: 308–313. doi:10.1093/comjnl/7.4.308.

- ^ Fogel, L.; Owens, A.J.; Walsh, M.J. (1966). Artificial Intelligence through Simulated Evolution. Wiley. ISBN 0471265160.

- ^ Hastings, W.K. (1970). "Monte Carlo Sampling Methods Using Markov Chains and Their Applications". Biometrika 57 (1): 97–109. doi:10.1093/biomet/57.1.97.

- ^ Cavicchio, D.J. (1970). "Adaptive search using simulated evolution". Technical Report (University of Michigan, Computer and Communication Sciences Department). hdl:2027.42/4042.

- ^ Kernighan, B.W.;Lin, S. (1970). "An efficient heuristic procedure for partitioning graphs". Bell System Technical Journal 49 (2): 291–307.

- ^ Mercer, R.E.; Sampson, J.R. (1978). "Adaptive search using a reproductive metaplan". Kybernetes (The International Journal of Systems and Cybernetics) 7 (3): 215–228. doi:10.1108/eb005486.

- ^ Smith, S.F. (1980). A Learning System Based on Genetic Adaptive Algorithms (PhD Thesis). University of Pittsburgh.

- ^ Farmer, J.D.; Packard, N.; Perelson, A. (1986). "The immune system, adaptation and machine learning". Physica D 22 (1-3): 187–204. doi:10.1016/0167-2789(86)90240-X.

- ^ Grefenstette, J.J. (1986). "Optimization of control parameters for genetic algorithms". IEEE Transactions Systems, Man, and Cybernetics 16 (1): 122–128. doi:10.1109/TSMC.1986.289288.

- ^ US 4935877

- ^ Koza, J.R. (1992). Genetic Programming : on the programming of computers by means of natural selection. MIT Press. ISBN 0262111705.

- ^ Moscato, P. (1989). "On Evolution, Search, Optimization, Genetic Algorithms and Martial Arts : Towards Memetic Algorithms". Technical Report C3P 826 (Caltech Concurrent Computation Program).

- ^ Fonseca, C.M.; Fleming, P.J. (1993). "Genetic Algorithms for Multiobjective Optimization: formulation, discussion and generalization". Proceedings of the 5th International Conference on Genetic Algorithms (Urbana-Champaign, IL, USA): 416–423.

- ^ Battiti, Roberto; Gianpietro Tecchiolli (1994). "The reactive tabu search." (PDF). ORSA Journal on Computing 6 (2): 126–140. http://rtm.science.unitn.it/~battiti/archive/TheReactiveTabuSearch.PDF.

- ^ Battiti, Roberto; Gianpietro Tecchiolli (1995). "Training neural nets with the reactive tabu search." (PDF). IEEE Transactions on Neural Networks 6 (5): 1185–1200. http://rtm.science.unitn.it/~battiti/archive/rts-nn.pdf.

- ^ Srinivas, N.; Deb, K. (1994). "Multiobjective Optimization Using Nondominated Sorting in Genetic Algorithms". Evolutionary Computation 2 (3): 221–248. doi:10.1162/evco.1994.2.3.221.

- ^ Wolpert, D.H.; Macready, W.G. (1995). "No free lunch theorems for search". Technical Report SFI-TR-95-02-010 (Santa Fe Institute).

- ^ a b Wolpert, D.H.; Macready, W.G. (1997). "No free lunch theorems for optimization". IEEE Transactions on Evolutionary Computation 1 (1): 67–82. doi:10.1109/4235.585893.

- ^ Mülhenbein, H.; Paaß, G. (1996). "From recombination of genes to the estimation of distribution I. Binary parameters". Lectures Notes in Computer Science: Parallel Problem Solving from Nature (PPSN IV) 1411: 178–187.

- ^ Hansen; Ostermeier (1996). "Adapting arbitrary normal mutation distributions in evolution strategies: The covariance matrix adaptation.". Proceedings of the IEEE International Conference on Evolutionary Computation: 312–317.

- ^ Rubinstein, R.Y. (1997). "Optimization of Computer simulation Models with Rare Events". European Journal of Operations Research 99 (1): 89–112. doi:10.1016/S0377-2217(96)00385-2.

- ^ Geem, Z.W.; Kim, J.H.; Loganathan, G.V. (2001). "A new heuristic optimization algorithm: harmony search". Simulation 76 (2): 60–68. doi:10.1177/003754970107600201.

- ^ Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. (2002). "A Fast and Elitist Multiobjective Genetic Algorithm: NSGA-II". IEEE Transactions on Evolutionary Computation 6 (2): 182–197. doi:10.1109/4235.996017.

- ^ Nakrani, S.; Tovey, S. (2004). "On honey bees and dynamic server allocation in Internet hosting centers". Adaptive Behavior 12.

- ^ Krishnanand, K.; Ghose, D. (2009). "Glowworm swarm optimization for simultaneous capture of multiple local optima of multimodal functions". Swarm Intelligence 3 (2): 87–124. doi:10.1007/s11721-008-0021-5.

- ^ Krishnanand, K.; Ghose, D. (2006). "Glowworm swarm based optimization algorithm for multimodal functions with collective robotics applications". Multiagent and Grid Systems 2: 209–222.

- ^ Krishnanand, K.; Ghose, D. (2005). "Detection of multiple source locations using a glowworm metaphor with applications to collective robotics". Proceedings of IEEE Swarm Intelligence Symposium. pp. 84–91.

- ^ Karaboga, D. (2005). "An Idea Based On Honey Bee Swarm For Numerical Numerical Optimization". Technical Report-TR06 (Erciyes University, Engineering Faculty, Computer Engineering Department).

- ^ Haddad, O. B. et al.; Afshar, Abbas; Mariño, Miguel A. (2006). "Honey-bees mating optimization (HBMO) algorithm: a new heuristic approach for water resources optimization". Water Resources Management 20 (5): 661–680. doi:10.1007/s11269-005-9001-3.

- ^ Wierstra, D.; Schaul, T.; Peters, J.; Schmidhuber, J. (2008). "Natural Evolution Strategies". Proceedings of the IEEE Congress on Evolutionary Computation (CEC). Hong Kong, China. pp. 3381-3387. http://www.idsia.ch/~tom/publications/nes.pdf.

- ^ Yang, X.-S. (2008). Nature-Inspired Metaheuristic Algorithms. Luniver Press. ISBN 1905986289.

- ^ Yang, X.-S.; Deb, S. (2009). Cuckoo search via Levy flights, in: World Congress on Nature & Biologically Inspired Computing (NaBIC 2009).. IEEE Publication, USA. pp. 210–214.

- ^ Shah-Hosseini, Hamed (2011). "Principal components analysis by the galaxy-based search algorithm: a novel metaheuristic for continuous optimisation.". International Journal of Computational Science and Engineering 6 (1/2): 132–140. doi:10.1504/IJCSE.2011.041221.

- ^ Tamura, K.; Yasuda, K. (2011). "Primary Study of Spiral Dynamics Inspired Optimization". IEEJ Transactions on Electrical and Electronic Engineering 6 (S1): S98–S100. http://onlinelibrary.wiley.com/doi/10.1002/tee.20628/abstract.

- ^ Tamura, K.; Yasuda, K. (2011). "Spiral Dynamics Inspired Optimization". Journal of Advanced Computational Intelligence and Intelligent Informatics 15 (8): 1116–1122. http://www.fujipress.jp/finder/xslt.php?mode=present&inputfile=JACII001500080020.xml.

- ^ Trelea, I.C. (2003). "The Particle Swarm Optimization Algorithm: convergence analysis and parameter selection". Information Processing Letters 85 (6): 317–325. doi:10.1016/S0020-0190(02)00447-7.

- ^ Zaharie, D. (2002). "Critical values for the control parameters of differential evolution algorithms". Proceedings of the 8th International Conference on Soft Computing (MENDEL). Brno, Czech Republic. pp. 62–67.

External links

- EU/ME forum for researchers in the field.

- MetaHeur - Excel application to metaheuristic methods

|

|||||

|

|||||

|

|

|||||