Limit of a sequence

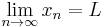

The limit of a sequence  is, intuitively, the unique number or point L (if it exists) such that the terms of the sequence become arbitrarily close to L for "large" values of n. If the limit exists, then we say that the sequence is convergent and that it converges (or tends) to L.

is, intuitively, the unique number or point L (if it exists) such that the terms of the sequence become arbitrarily close to L for "large" values of n. If the limit exists, then we say that the sequence is convergent and that it converges (or tends) to L.

Convergence of sequences is a fundamental notion in mathematical analysis, which has been studied since ancient times.

Contents |

Examples

- The sequence 0.1, 0.01, 0.001, ... tends to 0 (meaning that 0 is its limit).

- The sequence 0.9, 0.99, 0.999, ... tends to 1.

- The sequence 3, 3.1, 3.14, 3.141, ... tends to π.

- More generally, any bounded monotone increasing sequence of real numbers tends to the least upper bound of the set of its values.

Formal definition

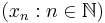

- For a sequence of real numbers

- A real number L is said to be the limit of the sequence xn, written

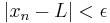

- if and only if for every real number

there exists a natural number

there exists a natural number  such that for every natural number

such that for every natural number  we have

we have  .

.

- As a generalization of this, for a sequence of points

in a topological space T:

in a topological space T:

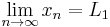

- An element L ∈ T is said to be a limit of this sequence if and only if for every neighborhood S of L there is a natural number N such that xn ∈ S for all n ≥ N. In this generality a sequence may admit more than one limit, but if T is a Hausdorff space, then each sequence has at most one limit. When a unique limit L exists, it may be written

If a sequence has a limit, we say the sequence is convergent, and that the sequence converges to the limit. Otherwise, the sequence is divergent (see also oscillation).

A null sequence is a sequence that converges to 0.

Hyperreal definition

The definition of the limit using the hyperreal numbers formalizes the intuition that for a "very large" value of the index, the corresponding term is "very close" to the limit. More precisely, a sequence xn tends to L if for every infinite hypernatural H, the term xH is infinitely close to L, i.e., the difference xH - L is infinitesimal. Equivalently, L is the standard part of xH

.

.

Thus, the limit can be defined by the formula

where the limit exists if and only if the righthand side is independent of the choice of an infinite H.

Comments

The definition means that eventually all elements of the sequence get as close as we want to the limit. (The condition that the elements become arbitrarily close to all of the following elements does not, in general, imply the sequence has a limit. See Cauchy sequence).

A sequence of real numbers may tend to  or

or  , compare infinite limits. Even though this can be written in the form

, compare infinite limits. Even though this can be written in the form

and

and

such a sequence is called divergent, unless we explicitly consider it a sequence in the affinely extended real number system or (in the first case only) the real projective line. In the latter cases the sequence has a limit (in the space itself), so could be called convergent, but when using this term here, care should be taken that this does not cause confusion.

The limit of a sequence of points  in a topological space T is a special case of the limit of a function: the domain is

in a topological space T is a special case of the limit of a function: the domain is  in the space

in the space  with the induced topology of the affinely extended real number system, the range is T, and the function argument n tends to +∞, which in this space is a limit point of

with the induced topology of the affinely extended real number system, the range is T, and the function argument n tends to +∞, which in this space is a limit point of  .

.

Further Examples

- The sequence 1, -1, 1, -1, 1, ... is oscillatory.

- The series with partial sums 1/2, 1/2 + 1/4, 1/2 + 1/4 + 1/8, 1/2 + 1/4 + 1/8 + 1/16, ... converges with limit 1. This is an example of an infinite series.

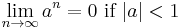

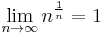

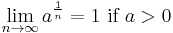

- If a is a real number with absolute value | a | < 1, then the sequence an has limit 0. If 0 < a, then the sequence a1/n has limit 1.

Also:

Properties

Consider the following function: f( x ) = xn if n-1 < x ≤ n. Then the limit of the sequence of xn is just the limit of f( x) at infinity.

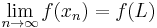

A function f, defined on a first-countable space, is continuous if and only if it is compatible with limits in that (f(xn)) converges to f(L) given that (xn) converges to L, i.e.

implies

implies

Note that this equivalence does not hold in general for spaces which are not first-countable.

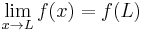

Compare the basic property (or definition):

- f is continuous at x if and only if

A subsequence of the sequence (xn) is a sequence of the form (xa(n)) where the a(n) are natural numbers with a(n) < a(n+1) for all n. Intuitively, a subsequence omits some elements of the original sequence. A sequence is convergent if and only if all of its subsequences converge towards the same limit.

Every convergent sequence in a metric space is a Cauchy sequence and hence bounded. A bounded monotonic sequence of real numbers is necessarily convergent: this is sometimes called the fundamental theorem of analysis. More generally, every Cauchy sequence of real numbers has a limit, or short: the real numbers are complete.

A sequence of real numbers is convergent if and only if its limit superior and limit inferior coincide and are both finite.

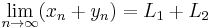

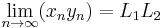

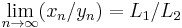

The algebraic operations are continuous everywhere (except for division around zero divisor); thus, given

and

and

then

and (if L2 and yn are non-zero)

These rules are also valid for infinite limits using the rules

- q + ∞ = ∞ for q ≠ -∞

- q × ∞ = ∞ if q > 0

- q × ∞ = -∞ if q < 0

- q / ∞ = 0 if q ≠ ± ∞

(see extended real number line).

History

The Greek philosopher Zeno of Elea is famous for formulating paradoxes that involve limiting processes.

Leucippus, Democritus, Antiphon, Eudoxus and Archimedes developed the method of exhaustion, which uses an infinite sequence of approximations to determine an area or a volume. Archimedes succeeded in summing what is now called a geometric series.

Newton dealt with series in his works on Analysis with infinite series (written in 1669, circulated in manuscript, published in 1711), Method of fluxions and infinite series (written in 1671, published in English translation in 1736, Latin original published much later) and Tractatus de Quadratura Curvarum (written in 1693, published in 1704 as an Appendix to his Optiks). In the latter work, Newton considers the binomial expansion of (x+o)n which he then linearizes by taking limits (letting o→0).

In the 18th century, mathematicians like Euler succeeded in summing some divergent series by stopping at the right moment; they did not much care whether a limit existed, as long as it could be calculated. At the end of the century, Lagrange in his Théorie des fonctions analytiques (1797) opined that the lack of rigour precluded further development in calculus. Gauss in his etude of hypergeometric series (1813) for the first time rigorously investigated under which conditions a series converged to a limit.

The modern definition of a limit (for any ε there exists an index N so that ...) was given by Bernhard Bolzano (Der binomische Lehrsatz, Prague 1816, little noticed at the time) and by Weierstrass in the 1770s.

See also

- Limit of a function

- Limit of a net - a net is a topological generalization of a sequence

- Modes of convergence

References

- Frank Morley and James Harkness A treatise on the theory of functions (New York: Macmillan, 1893)