Exchangeable random variables

In statistics, an exchangeable sequence of random variables (also sometimes interchangeable) is a sequence such that future samples behave like earlier samples, meaning formally that any order (of a finite number of samples) is equally likely. This formalizes the notion of "the future being predictable on the basis of past experience."

A sequence of independent and identically-distributed random variables (i.i.d.) is exchangeable, but so is sampling without replacement, which is not independent.

The notion is central to Bruno de Finetti's development of predictive inference and to Bayesian statistics — where frequentist statistics uses i.i.d. variables (samples from a population), Bayesian statistics more frequently uses exchangeable sequences. They are a key way in which Bayesian inference is "data-centric" (based on past and future observations), rather than "model-centric", as exchangeable sequences that are not i.i.d. cannot be modeled as "sampling from a fixed population".

Contents |

Definition

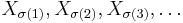

Formally, an exchangeable sequence of random variables is a finite or infinite sequence X1, X2, X3, ... of random variables such that for any finite permutation σ of the indices 1, 2, 3, ..., i.e. any permutation σ that leaves all but finitely many indices fixed, the joint probability distribution of the permuted sequence

is the same as the joint probability distribution of the original sequence.

A sequence E1, E2, E3, ... of events is said to be exchangeable precisely if the sequence of its indicator functions is exchangeable.

Independent and identically distributed random variables are exchangeable.

The distribution function FX1,...,Xn(x1, ... ,xn) of a finite sequence of exchangeable random variables is symmetric in its arguments x1, ... ,xn.

Examples

- Any weighted average of iid sequences of random variables is exchangeable. See in particular de Finetti's theorem.

- Suppose an urn contains n red and m blue marbles. Suppose marbles are drawn without replacement until the urn is empty. Let Xi be the indicator random variable of the event that the ith marble drawn is red. Then {Xi}i=1,...n is an exchangeable sequence. This sequence cannot be extended to any longer exchangeable sequence.

- Let

have a bivariate normal distribution with parameters

have a bivariate normal distribution with parameters  ,

,  and an arbitrary correlation coefficient

and an arbitrary correlation coefficient  . The random variables

. The random variables  and

and  are then exchangeable, but independent only if

are then exchangeable, but independent only if  . The density function is

. The density function is ![p(x, y) = p(y, x) \propto \exp\left[-\frac{1}{2(1-\rho^2)}(x^2%2By^2-2\rho xy)\right].](/2012-wikipedia_en_all_nopic_01_2012/I/bee760bfa4b0cc62ca4d303daa36c087.png)

Properties

- de Finetti's theorem characterizes exchangeable sequences as mixtures of i.i.d. sequences — while an exchangeable sequence need not itself be i.i.d., it can be expressed as a mixture of underlying i.i.d. sequences.

- An infinite exchangeable sequence is strictly stationary, thus a law of large numbers in the form of Birkhoff-Khinchin theorem applies.

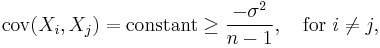

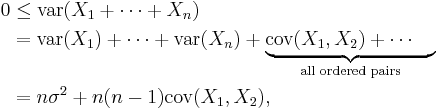

- Covariance: for a finite exchangeable sequence { Xi }i = 1, 2, 3, ... of length n:

- where σ 2 = var(X1).

- "Constant" in this case means not depending on the values of the indices i and j as long as i ≠ j.

- This may be seen as follows:

- and then solve the inequality for the covariance. Equality is achieved in a simple urn model: An urn contains 1 red marble and n − 1 green marbles, and these are sampled without replacement until the urn is empty. Let Xi = 1 if the red marble is drawn on the ith trial and 0 otherwise.

- A finite sequence that achieves the lower covariance bound cannot be extended to a longer exchangeable sequence.

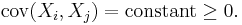

- For an infinite exchangeable sequence,

Applications

The von Neumann extractor is a randomness extractor that depends on exchangeability: it gives a method to take an exchangeable sequence of 0s and 1s (Bernoulli trials), with some probability p of 0 and  of 1, and produce a (shorter) exchangeable sequence of 0s and 1s with probability 1/2.

of 1, and produce a (shorter) exchangeable sequence of 0s and 1s with probability 1/2.

Partition the sequence into non-overlapping pairs: if the two elements of the pair are equal (00 or 11), discard it; if the two elements of the pair are unequal (01 or 10), keep the first. This yields a sequence of Bernoulli trials with  as, by exchangeability, the odds of a given pair being 01 or 10 are equal.

as, by exchangeability, the odds of a given pair being 01 or 10 are equal.

See also

- Permutation tests, a statistical test based on exchanging between groups

- U-statistic

References

- Aldous, David J., Exchangeability and related topics, in: École d'Été de Probabilités de Saint-Flour XIII — 1983, Lecture Notes in Math. 1117, pp. 1–198, Springer, Berlin, 1985. ISBN 978-3-540-15203-3 doi:10.1007/BFb0099421

- Kingman, J. F. C., Uses of exchangeability, Ann. Probability 6 (1978) 83–197 MR494344 JSTOR 2243211

- Chow, Yuan Shih and Teicher, Henry, Probability theory. Independence, interchangeability, martingales, Springer Texts in Statistics, 3rd ed., Springer, New York, 1997. xxii+488 pp. ISBN 0-387-98228-0

- Spizzichino, Fabio Subjective probability models for lifetimes. Monographs on Statistics and Applied Probability, 91. Chapman & Hall/CRC, Boca Raton, FL, 2001. xx+248 pp. ISBN 1-58488-060-0