Dyadics

Dyadics are mathematical objects, representing linear functions of vectors. Dyadic notation was first established by Gibbs in 1884.

Contents |

Definition

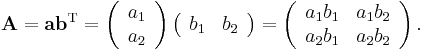

Dyad A is formed by two vectors a and b (complex in general). Here, upper-case bold variables denote dyads (as well as general dyadics) whereas lower-case bold variables denote vectors.

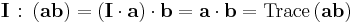

In matrix notation :

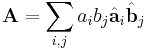

In general algebraic form:

where  and

and  are unit vectors (also known as coordinate axes) and i,j goes from 1 to the space dimension.

are unit vectors (also known as coordinate axes) and i,j goes from 1 to the space dimension.

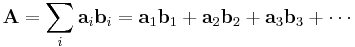

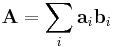

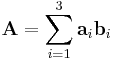

A dyadic polynomial A, otherwise known as a dyadic, is formed from multiple vectors

A dyadic which cannot be reduced to a sum of less than 3 dyads is said to be complete. In this case, the forming vectors are non-coplanar, see Chen (1983).

The following table classifies dyadics:

| Determinant | Adjoint | Matrix and its rank | |

| Zero | = 0 | = 0 | = 0; rank 0: all zeroes |

| Linear | = 0 | = 0 | ≠ 0; rank 1: at least one non-zero element and all 2x2 subdeterminants zero (single dyadic) |

| Planar | = 0 | ≠ 0 (single dyadic) | ≠ 0; rank 2: at least one non-zero 2x2 subdeterminant |

| Complete | ≠ 0 | ≠ 0 | ≠ 0; rank 3: non-zero determinant |

Dyadics algebra

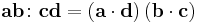

Dyadic with vector

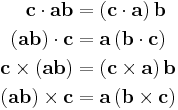

There are 4 operations for a vector with a dyadic

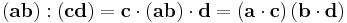

Dyadic with dyadic

There are 5 operations for a dyadic to another dyadic:

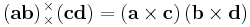

Simple-dot product

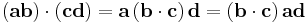

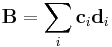

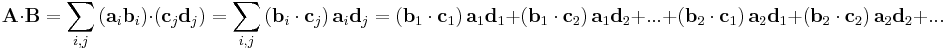

For 2 general dyadics A and B:

Double-dot product

There are two ways to define the double dot product. Many sources use a definition of the double dot product rooted in the matrix double-dot product,

whereas other sources use a definition unique (usually referred to as the "colon product") to dyads:

One must be careful when deciding which convention to use. As there are no analogous matrix operations for the remaining dyadic products, no ambiguities in their definitions appear.

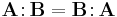

The double-dot product is commutative.

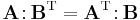

There is a special double dot product with a transpose

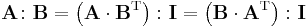

Another identity is:

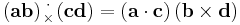

Dot–cross product

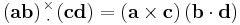

Cross–dot product

Double-cross product

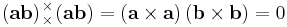

We can see that, for any dyad formed from two vectors a and b, its double cross product is zero.

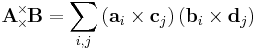

However, for 2 general dyadics, their double-cross product is defined as:

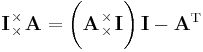

For a dyadic double-cross product on itself, the result will generally be non-zero. For example, a dyadic A composed of six different vectors

has a non-zero self-double-cross product of

Unit dyadic

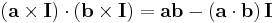

For any vector a, there exist a unit dyadic I, such that

For any base of 3 vectors a, b and c, with reciprocal base  ,

,  and

and  , the unit dyadic is defined by

, the unit dyadic is defined by

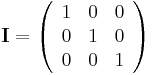

In Cartesian coordinates,

For an orthonormal base  ,

,

The corresponding matrix is

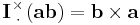

Rotation dyadic

For any vector a,

is a 90 degree right hand rotation dyadic around a.

![\mathbf{A}

\!\!\!\begin{array}{c}

_\times \\

^\times

\end{array}\!\!\!

\mathbf{A} = 2 \left[\left(\mathbf{a}_1\times \mathbf{a}_2\right)\left(\mathbf{b}_1\times \mathbf{b}_2\right)%2B\left(\mathbf{a}_2\times \mathbf{a}_3\right)\left(\mathbf{b}_2\times \mathbf{b}_3\right)%2B\left(\mathbf{a}_3\times \mathbf{a}_1\right)\left(\mathbf{b}_3\times \mathbf{b}_1\right)\right]](/2012-wikipedia_en_all_nopic_01_2012/I/3d64f89e390e61852325f734cf13cd02.png)