Curvilinear coordinates

Curvilinear coordinates are a coordinate system for Euclidean space in which the coordinate lines may be curved. These coordinates may be derived from a set of Cartesian coordinates by using a transformation that is locally invertible (a one-to-one map) at each point. This means that one can convert a point given in a Cartesian coordinate system to its curvilinear coordinates and back. The name curvilinear coordinates, coined by the French mathematician Lamé, derives from the fact that the coordinate surfaces of the curvilinear systems are curved.

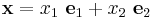

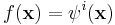

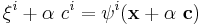

In two dimensional Cartesian coordinates, we can represent a point in space by the coordinates ( ) and in vector form as

) and in vector form as  where

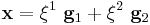

where  are basis vectors. We can describe the same point in curvilinear coordinates in a similar manner, except that the coordinates are now (

are basis vectors. We can describe the same point in curvilinear coordinates in a similar manner, except that the coordinates are now ( ) and the position vector is

) and the position vector is  . The quantities

. The quantities  and

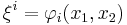

and  are related by the curvilinear transformation

are related by the curvilinear transformation  . The basis vectors

. The basis vectors  and

and  are related by

are related by

The coordinate lines in a curvilinear coordinate systems are level curves of  and

and  in the two-dimensional plane.

in the two-dimensional plane.

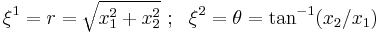

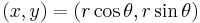

An example of a curvilinear coordinate system in two-dimensions is the polar coordinate system. In that case the transformation is

Other well-known examples of curvilinear systems are cylindrical and spherical polar coordinates for R3. While a Cartesian coordinate surface is a plane, e.g., z = 0 defines the x-y plane, the coordinate surface r = 1 in spherical polar coordinates is the surface of a unit sphere in R3—which obviously is curved.

Coordinates are often used to define the location or distribution of physical quantities which may be scalars, vectors, or tensors. Depending on the application, a curvilinear coordinate system may be simpler to use than the Cartesian coordinate system. For instance, a physical problem with spherical symmetry defined in R3 (e.g., motion in the field of a point mass/charge), is usually easier to solve in spherical polar coordinates than in Cartesian coordinates. Also boundary conditions may enforce symmetry. One would describe the motion of a particle in a rectangular box in Cartesian coordinates, whereas one would prefer spherical coordinates for a particle in a sphere.

Many of the concepts in vector calculus, which are given in Cartesian or spherical polar coordinates, can be formulated in arbitrary curvilinear coordinates. This gives a certain economy of thought, as it is possible to derive general expressions, valid for any curvilinear coordinate system, for concepts such as the gradient, divergence, curl, and the Laplacian.

Curvilinear Coordinates from a mathematical perspective

From a more general and abstract perspective, a curvilinear coordinate system is simply a coordinate patch on the differentiable manifold En (n-dimensional Euclidian space) that is diffeomorphic to the Cartesian coordinate patch on the manifold.[1] Note that two diffeomorphic coordinate patches on a differential manifold need not overlap differentiably. With this simple definition of a curvilinear coordinate system, all the results that follow below are simply applications of standard theorems in differential topology.

General curvilinear coordinates

In Cartesian coordinates, the position of a point P(x,y,z) is determined by the intersection of three mutually perpendicular planes, x = const, y = const, z = const. The coordinates x, y and z are related to three new quantities q1,q2, and q3 by the equations:

- x = x(q1,q2,q3) direct transformation

- y = y(q1,q2,q3) (curvilinear to Cartesian coordinates)

- z = z(q1,q2,q3)

The above equation system can be solved for the arguments q1, q2, and q3 with solutions in the form:

- q1 = q1(x, y, z) inverse transformation

- q2 = q2(x, y, z) (Cartesian to curvilinear coordinates)

- q3 = q3(x, y, z)

The transformation functions are such that there's a one-to-one relationship between points in the "old" and "new" coordinates, that is, those functions are bijections, and fulfil the following requirements within their domains:

- 1) They are smooth functions

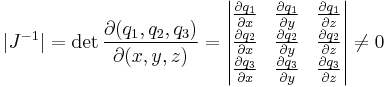

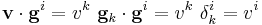

- 2) The inverse Jacobian determinant

is not zero; that is, the transformation is invertible according to the inverse function theorem. The condition that the Jacobian determinant is not zero reflects the fact that three surfaces from different families intersect in one and only one point and thus determine the position of this point in a unique way.[2]

A given point may be described by specifying either x, y, z or q1, q2, q3 while each of the inverse equations describes a surface in the new coordinates and the intersection of three such surfaces locates the point in the three-dimensional space (Fig. 1). The surfaces q1 = const, q2 = const, q3 = const are called the coordinate surfaces; the space curves formed by their intersection in pairs are called the coordinate lines. The coordinate axes are determined by the tangents to the coordinate lines at the intersection of three surfaces. They are not in general fixed directions in space, as is true for simple Cartesian coordinates. The quantities (q1, q2, q3 ) are the curvilinear coordinates of a point P(q1, q2, q3 ).

In general, (q1, q2 ... qn ) are curvilinear coordinates in n-dimensional space.

Example: Spherical coordinates

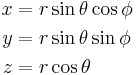

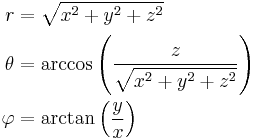

Spherical coordinates are one of the most used curvilinear coordinate systems in such fields as Earth sciences, cartography, and physics (quantum physics, relativity, etc.). The curvilinear coordinates (q1, q2, q3) in this system are, respectively, r (radial distance or polar radius, r ≥ 0), θ (zenith or latitude, 0 ≤ θ ≤ 180°), and φ (azimuth or longitude, 0 ≤ φ ≤ 360°). The direct relationship between Cartesian and spherical coordinates is given by:

Solving the above equation system for r, θ, and φ gives the inverse relations between spherical and Cartesian coordinates:

The respective spherical coordinate surfaces are derived in terms of Cartesian coordinates by fixing the spherical coordinates in the above inverse transformations to a constant value. Thus (Fig.2), r = const are concentric spherical surfaces centered at the origin, O, of the Cartesian coordinates, θ = const are circular conical surfaces with apex in O and axis the Oz axis, φ = const are half-planes bounded by the Oz axis and perpendicular to the xOy Cartesian coordinate plane. Each spherical coordinate line is formed at the pairwise intersection of the surfaces, corresponding to the other two coordinates: r lines (radial distance) are beams Or at the intersection of the cones θ = const and the half-planes φ = const; θ lines (meridians) are semicircles formed by the intersection of the spheres r = const and the half-planes φ = const ; and φ lines (parallels) are circles in planes parallel to xOy at the intersection of the spheres r = const and the cones θ = const. The location of a point P(r,θ,φ) is determined by the point of intersection of the three coordinate surfaces, or, alternatively, by the point of intersection of the three coordinate lines. The θ and φ axes in P(r,θ,φ) are the mutually perpendicular (orthogonal) tangents to the meridian and parallel of this point, while the r axis is directed along the radial distance and is orthogonal to both θ and φ axes.

The surfaces described by the inverse transformations are smooth functions within their defined domains. The Jacobian (functional determinant) of the inverse transformations is:

Curvilinear local basis

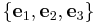

The concept of a basis

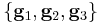

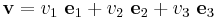

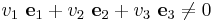

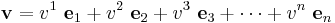

To define a vector in terms of coordinates, an additional coordinate-associated structure, called basis, is needed. A basis in three-dimensional space is a set of three linearly independent vectors  , called basis vectors. Each basis vector is associated with a coordinate in the respective dimension. Any vector

, called basis vectors. Each basis vector is associated with a coordinate in the respective dimension. Any vector  can be represented as a sum of vectors

can be represented as a sum of vectors  formed by multiplication of a basis vector (

formed by multiplication of a basis vector ( ) by a scalar coefficient (

) by a scalar coefficient ( ), called component. Each vector, then, has exactly one component in each dimension and can be represented by the vector sum:

), called component. Each vector, then, has exactly one component in each dimension and can be represented by the vector sum:

A requirement for the coordinate system and its basis is that if at least one  then

then

This condition is called linear independence. Linear independence implies that there cannot exist bases with basis vectors of zero magnitude because the latter will give zero-magnitude vectors when multiplied by any component. Non-coplanar vectors are linearly independent, and any triple of non-coplanar vectors can serve as a basis in three dimensions.

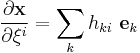

Basis vectors in curvilinear coordinates

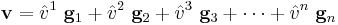

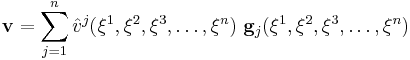

For general curvilinear coordinates, basis vectors and components vary from point to point. Consider a  -dimensional vector

-dimensional vector  that is expressed in a particular Cartesian coordinate system as

that is expressed in a particular Cartesian coordinate system as

If we change the basis vectors to  , then the same vector

, then the same vector  may be expressed as

may be expressed as

where  are the components of the vector in the new basis. Therefore, the vector sum that describes vector

are the components of the vector in the new basis. Therefore, the vector sum that describes vector  in the new basis is composed of different vectors, although the sum itself remains the same.

in the new basis is composed of different vectors, although the sum itself remains the same.

A coordinate basis whose basis vectors change their direction and/or magnitude from point to point is called local basis. All bases associated with curvilinear coordinates are necessarily local. Global bases, that is, bases composed of basis vectors that are the same in all points can be associated only with linear or affine coordinates. Therefore, for a curvilinear coordinate system with coordinates ( ), the vector

), the vector  can be expressed as

can be expressed as

Covariant and contravariant bases

Basis vectors are usually associated with a coordinate system by two methods:

- they can be built along the coordinate axes (collinear with axes) or

- they can be built to be perpendicular (normal) to the coordinate surfaces.

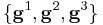

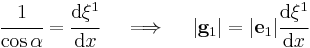

In the first case (axis-collinear), basis vectors transform like covariant vectors while in the second case (normal to coordinate surfaces), basis vectors transform like contravariant vectors. Those two types of basis vectors are distinguished by the position of their indices: covariant vectors are designated with lower indices while contravariant vectors are designated with upper indices. Thus, depending on the method by which they are built, for a general curvilinear coordinate system there are two sets of basis vectors for every point:  is the covariant basis, and

is the covariant basis, and  is the contravariant basis.

is the contravariant basis.

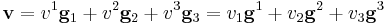

We can express a vector ( ) in terms either basis, i.e.,

) in terms either basis, i.e.,

A vector is covariant or contravariant if, respectively, its components are covariant or contravariant. From the above vector sums, it can be seen that contravariant vectors are represented with covariant basis vectors, and covariant vectors are represented with contravariant basis vectors.

A key convention in the representation of vectors and tensors in terms of indexed components and basis vectors is invariance in the sense that vector components which transform in a covariant manner (or contravariant manner) are paired with basis vectors that transform in a contravariant manner (or covariant manner).

Covariant basis

As stated above, contravariant vectors are vectors with contravariant components whose location is determined using covariant basis vectors that are built along the coordinate axes. In analogy to the other coordinate elements, transformation of the covariant basis of general curvilinear coordinates is described starting from the Cartesian coordinate system whose basis is called the standard basis. The standard basis in three-dimensional space is a global basis that is composed of 3 mutually orthogonal vectors  each of unit length. Regardless of the method of building the basis (axis-collinear or normal to coordinate surfaces), in the Cartesian system the result is a single set of basis vectors, namely, the standard basis.

each of unit length. Regardless of the method of building the basis (axis-collinear or normal to coordinate surfaces), in the Cartesian system the result is a single set of basis vectors, namely, the standard basis.

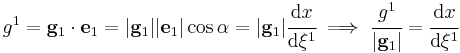

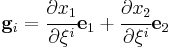

Constructing a covariant basis in one dimension

Consider the one-dimensional curve shown in Fig. 3. At point P, taken as an origin, x is one of the Cartesian coordinates, and  is one of the curvilinear coordinates (Fig. 3). The local basis vector is

is one of the curvilinear coordinates (Fig. 3). The local basis vector is  and it is built on the

and it is built on the  axis which is a tangent to

axis which is a tangent to  coordinate line at the point P. The axis

coordinate line at the point P. The axis  and thus the vector

and thus the vector  form an angle α with the Cartesian x axis and the Cartesian basis vector

form an angle α with the Cartesian x axis and the Cartesian basis vector  .

.

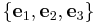

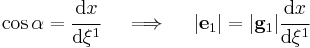

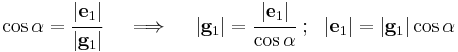

It can be seen from triangle PAB that

where  are the magnitudes of the two basis vectors, i.e., the scalar intercepts PB and PA. Note that PA is also the projection of

are the magnitudes of the two basis vectors, i.e., the scalar intercepts PB and PA. Note that PA is also the projection of  on the x axis.

on the x axis.

However, this method for basis vector transformations using directional cosines is inapplicable to curvilinear coordinates for the following reason: By increasing the distance from P, the angle between the curved line  and Cartesian axis x increasingly deviates from α. At the distance PB the true angle is that which the tangent at point C forms with the x axis and the latter angle is clearly different from α. The angles that the

and Cartesian axis x increasingly deviates from α. At the distance PB the true angle is that which the tangent at point C forms with the x axis and the latter angle is clearly different from α. The angles that the  line and

line and  axis form with the x axis become closer in value the closer one moves towards point P and become exactly equal at P. Let point E be located very close to P, so close that the distance PE is infinitesimally small. Then PE measured on the

axis form with the x axis become closer in value the closer one moves towards point P and become exactly equal at P. Let point E be located very close to P, so close that the distance PE is infinitesimally small. Then PE measured on the  axis almost coincides with PE measured on the

axis almost coincides with PE measured on the  line. At the same time, the ratio

line. At the same time, the ratio  (PD being the projection of PE on the x axis) becomes almost exactly equal to cos α.

(PD being the projection of PE on the x axis) becomes almost exactly equal to cos α.

Let the infinitesimally small intercepts PD and PE be labelled, respectively, as dx and  . Then

. Then

and

and  .

.

Thus, the directional cosines can be substituted in transformations with the more exact ratios between infinitesimally small coordinate intercepts. From the foregoing discussion, it follows that the component (projection) of  on the x axis is

on the x axis is

.

.

If  and

and  are smooth (continuously differentiable) functions the transformation ratios can be written as

are smooth (continuously differentiable) functions the transformation ratios can be written as

and

and  ,

,

That is, those ratios are partial derivatives of coordinates belonging to one system with respect to coordinates belonging to the other system.

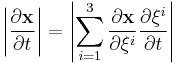

Constructing a covariant basis in three dimensions

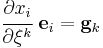

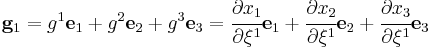

Doing the same for the coordinates in the other 2 dimensions,  can be expressed as:

can be expressed as:

Similar equations hold for  and

and  so that the standard basis

so that the standard basis  is transformed to a local (ordered and normalised) basis

is transformed to a local (ordered and normalised) basis  by the following system of equations:

by the following system of equations:

Vectors  in the above equation system are unit vectors (magnitude = 1) directed along the 3 axes of the curvilinear coordinate system. However, basis vectors in general curvilinear system are not required to be of unit length: they can be of arbitrary magnitude and direction.

in the above equation system are unit vectors (magnitude = 1) directed along the 3 axes of the curvilinear coordinate system. However, basis vectors in general curvilinear system are not required to be of unit length: they can be of arbitrary magnitude and direction.

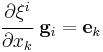

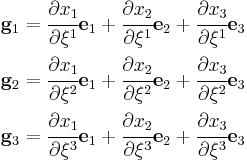

By analogous reasoning, one can obtain the inverse transformation from local basis to standard basis:

The above systems of linear equations can be written in matrix form as

and

and .

.

These are the equations that can be used to transform an Cartesian basis into a curvilinear basis, and vice versa.

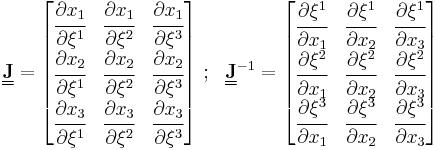

The Jacobian of the transformation

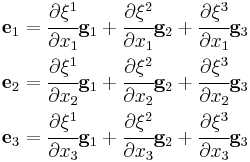

The Jacobian matrices of the transformation are the matrices  and

and  . In three dimensions, the expanded forms of these matrices are

. In three dimensions, the expanded forms of these matrices are

In the second equation system (the inverse transformation), the unknowns are the curvilinear basis vectors which are subject to the condition that in each point of the curvilinear coordinate system there must exist one and only one set of basis vectors. This condition is satisfied if and only if the equation system has a single solution. From linear algebra, it is known that a linear equation system has a single solution only if the determinant of its system matrix is non-zero. For the second equation system, the determinant of the system matrix is

which shows the rationale behind the above requirement concerning the inverse Jacobian determinant.

Another, very important, feature of the above transformations is the nature of the derivatives: in front of the Cartesian basis vectors stand derivatives of Cartesian coordinates while in front of the curvilinear basis vectors stand derivatives of curvililear coordinates. In general, the following definition holds:

Covariant vector is an object that in the system of coordinates x is defined by n ordered numbers or functions (components) ai(x1, x2, x3) and in systemit is defined by n ordered components āi(

) which are connected with ai (x1, x2, x3) in each point of space by the transformation:

.

Mnemonic: Coordinates co-vary with the vector.

This definition is so general that it applies to covariance in the very abstract sense, and includes not only basis vectors, but also all vectors, components, tensors, pseudovectors, and pseudotensors (in the last two there is an additional sign flip). It also serves to define tensors in one of their most usual treatments.

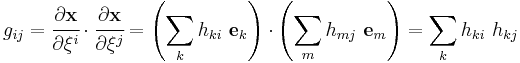

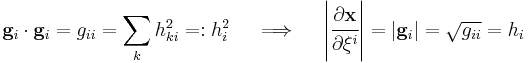

Lamé coefficients

The partial derivative coefficients through which vector transformation is achieved are called also scale factors or Lamé coefficients (named after Gabriel Lamé)

.

.

However, this designation is very rarely used, being largely replaced with √gik, the components of the metric tensor.

Vector and tensor algebra in three-dimensional curvilinear coordinates

- Note: the Einstein summation convention of summing on repeated indices is used below.

Elementary vector and tensor algebra in curvilinear coordinates is used in some of the older scientific literature in mechanics and physics and can be indispensable to understanding work from the early and mid 1900s, for example the text by Green and Zerna.[3] Some useful relations in the algebra of vectors and second-order tensors in curvilinear coordinates are given in this section. The notation and contents are primarily from Ogden,[4], Naghdi,[5] Simmonds,[6] Green and Zerna,[3] Basar and Weichert,[7] and Ciarlet.[8]

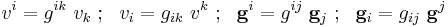

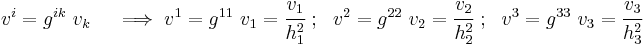

Vectors in curvilinear coordinates

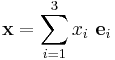

Let  be an arbitrary basis for three-dimensional Euclidean space. In general, the basis vectors are neither unit vectors nor mutually orthogonal. However, they are required to be linearly independent. Then a vector

be an arbitrary basis for three-dimensional Euclidean space. In general, the basis vectors are neither unit vectors nor mutually orthogonal. However, they are required to be linearly independent. Then a vector  can be expressed as[6](p27)

can be expressed as[6](p27)

The components  are the contravariant components of the vector

are the contravariant components of the vector  .

.

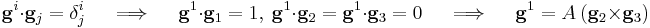

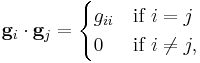

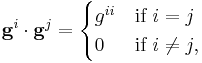

The reciprocal basis  is defined by the relation [6](pp28–29)

is defined by the relation [6](pp28–29)

where  is the Kronecker delta.

is the Kronecker delta.

The vector  can also be expressed in terms of the reciprocal basis:

can also be expressed in terms of the reciprocal basis:

The components  are the covariant components of the vector

are the covariant components of the vector  .

.

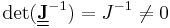

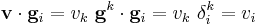

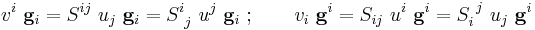

Relations between components and basis vectors

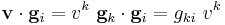

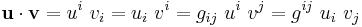

From these definitions we can see that[6](pp30–32)

Also,

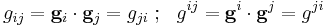

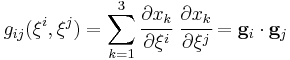

Metric tensor

The quantities  ,

,  are defined as[6](p39)

are defined as[6](p39)

From the above equations we have

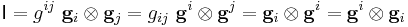

Identity map

The identity map  defined by

defined by  can be shown to be[6](p39)

can be shown to be[6](p39)

Scalar (Dot) product

The scalar product of two vectors in curvilinear coordinates is[6](p32)

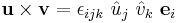

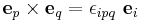

Vector (Cross) product

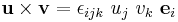

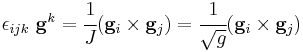

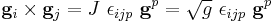

The cross product of two vectors is given by[6](pp32–34)

where  is the permutation symbol and

is the permutation symbol and  is a Cartesian basis vector. In curvilinear coordinates, the equivalent expression is

is a Cartesian basis vector. In curvilinear coordinates, the equivalent expression is

where  is the third-order alternating tensor.

is the third-order alternating tensor.

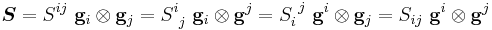

Second-order tensors in curvilinear coordinates

A second-order tensor can be expressed as

The components  are called the contravariant components,

are called the contravariant components,  the mixed right-covariant components,

the mixed right-covariant components,  the mixed left-covariant components, and

the mixed left-covariant components, and  the covariant components of the second-order tensor.

the covariant components of the second-order tensor.

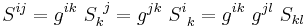

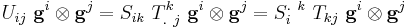

Relations between components

The components of the second-order tensor are related by

Action of a second-order tensor on a vector

The action  can be expressed in curvilinear coordinates as

can be expressed in curvilinear coordinates as

Inner product of two second-order tensors

The inner product of two second-order tensors  can be expressed in curvilinear coordinates as

can be expressed in curvilinear coordinates as

Alternatively,

Determinant of a second-order tensor

If  is a second-order tensor, then the determinant is defined by the relation

is a second-order tensor, then the determinant is defined by the relation

where  are arbitrary vectors and

are arbitrary vectors and

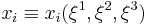

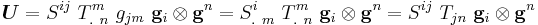

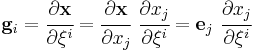

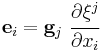

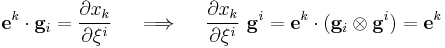

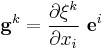

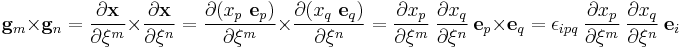

Relations between curvilinear and Cartesian basis vectors

Let ( ) be the usual Cartesian basis vectors for the Euclidean space of interest and let

) be the usual Cartesian basis vectors for the Euclidean space of interest and let

where  is a second-order transformation tensor that maps

is a second-order transformation tensor that maps  to

to  . Then,

. Then,

From this relation we can show that

Let  be the Jacobian of the transformation. Then, from the definition of the determinant,

be the Jacobian of the transformation. Then, from the definition of the determinant,

Since

we have

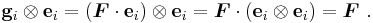

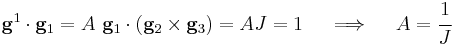

A number of interesting results can be derived using the above relations.

First, consider

Then

Similarly, we can show that

Therefore, using the fact that ![[g^{ij}] = [g_{ij}]^{-1}](/2012-wikipedia_en_all_nopic_01_2012/I/b3ac1a141e719b68057c78e3f610e21f.png) ,

,

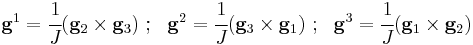

Another interesting relation is derived below. Recall that

where  is a, yet undetermined, constant. Then

is a, yet undetermined, constant. Then

This observation leads to the relations

In index notation,

where  is the usual permutation symbol.

is the usual permutation symbol.

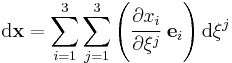

We have not identified an explicit expression for the transformation tensor  because an alternative form of the mapping between curvilinear and Cartesian bases is more useful. Assuming a sufficient degree of smoothness in the mapping (and a bit of abuse of notation), we have

because an alternative form of the mapping between curvilinear and Cartesian bases is more useful. Assuming a sufficient degree of smoothness in the mapping (and a bit of abuse of notation), we have

Similarly,

From these results we have

and

Vector products

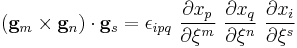

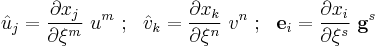

The cross product of two vectors is given by

where  is the permutation symbol and

is the permutation symbol and  is a Cartesian basis vector. Therefore,

is a Cartesian basis vector. Therefore,

and

Hence,

Returning back to the vector product and using the relations

gives us

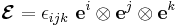

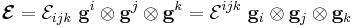

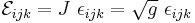

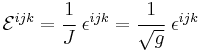

The alternating tensor

In an orthonormal right-handed basis, the third-order alternating tensor is defined as

In a general curvilinear basis the same tensor may be expressed as

It can be shown that

Now,

Hence,

Similarly, we can show that

Vector and tensor calculus in three-dimensional curvilinear coordinates

- Note: the Einstein summation convention of summing on repeated indices is used below.

Simmonds,[6] in his book on tensor analysis, quotes Albert Einstein saying[9]

The magic of this theory will hardly fail to impose itself on anybody who has truly understood it; it represents a genuine triumph of the method of absolute differential calculus, founded by Gauss, Riemann, Ricci, and Levi-Civita.

Vector and tensor calculus in general curvilinear coordinates is used in tensor analysis on four-dimensional curvilinear manifolds in general relativity,[10] in the mechanics of curved shells,[8] in examining the invariance properties of Maxwell's equations which has been of interest in metamaterials[11][12] and in many other fields.

Some useful relations in the calculus of vectors and second-order tensors in curvilinear coordinates are given in this section. The notation and contents are primarily from Ogden,[4] Simmonds,[6] Green and Zerna,[3] Basar and Weichert,[7] and Ciarlet.[8]

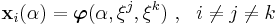

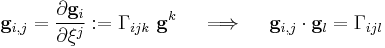

Basic definitions

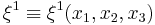

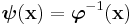

Let the position of a point in space be characterized by three coordinate variables  .

.

The coordinate curve  represents a curve on which

represents a curve on which  are constant. Let

are constant. Let  be the position vector of the point relative to some origin. Then, assuming that such a mapping and its inverse exist and are continuous, we can write [4](p55)

be the position vector of the point relative to some origin. Then, assuming that such a mapping and its inverse exist and are continuous, we can write [4](p55)

The fields  are called the curvilinear coordinate functions of the curvilinear coordinate system

are called the curvilinear coordinate functions of the curvilinear coordinate system  .

.

The  coordinate curves are defined by the one-parameter family of functions given by

coordinate curves are defined by the one-parameter family of functions given by

with  fixed.

fixed.

Tangent vector to coordinate curves

The tangent vector to the curve  at the point

at the point  (or to the coordinate curve

(or to the coordinate curve  at the point

at the point  ) is

) is

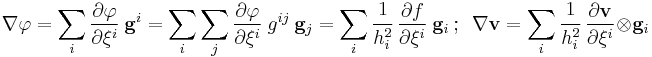

Gradient of a scalar field

Let  be a scalar field in space. Then

be a scalar field in space. Then

The gradient of the field  is defined by

is defined by

where  is an arbitrary constant vector. If we define the components

is an arbitrary constant vector. If we define the components  of vector

of vector  such that

such that

then

If we set  , then since

, then since  , we have

, we have

which provides a means of extracting the contravariant component of a vector  .

.

If  is the covariant (or natural) basis at a point, and if

is the covariant (or natural) basis at a point, and if  is the contravariant (or reciprocal) basis at that point, then

is the contravariant (or reciprocal) basis at that point, then

A brief rationale for this choice of basis is given in the next section.

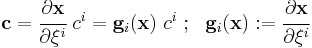

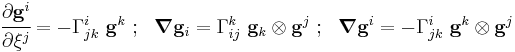

Gradient of a vector field

A similar process can be used to arrive at the gradient of a vector field  . The gradient is given by

. The gradient is given by

If we consider the gradient of the position vector field  , then we can show that

, then we can show that

The vector field  is tangent to the

is tangent to the  coordinate curve and forms a natural basis at each point on the curve. This basis, as discussed at the beginning of this article, is also called the covariant curvilinear basis. We can also define a reciprocal basis, or contravariant curvilinear basis,

coordinate curve and forms a natural basis at each point on the curve. This basis, as discussed at the beginning of this article, is also called the covariant curvilinear basis. We can also define a reciprocal basis, or contravariant curvilinear basis,  . All the algebraic relations between the basis vectors, as discussed in the section on tensor algebra, apply for the natural basis and its reciprocal at each point

. All the algebraic relations between the basis vectors, as discussed in the section on tensor algebra, apply for the natural basis and its reciprocal at each point  .

.

Since  is arbitrary, we can write

is arbitrary, we can write

Note that the contravariant basis vector  is perpendicular to the surface of constant

is perpendicular to the surface of constant  and is given by

and is given by

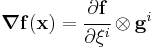

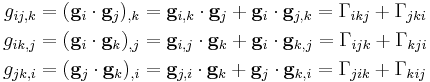

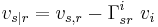

Christoffel symbols of the first kind

The Christoffel symbols of the first kind are defined as

To express  in terms of

in terms of  we note that

we note that

Since  we have

we have  . Using these to rearrange the above relations gives

. Using these to rearrange the above relations gives

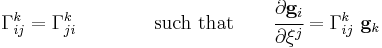

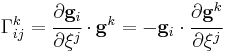

Christoffel symbols of the second kind

The Christoffel symbols of the second kind are defined as

This implies that

Other relations that follow are

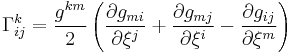

Another particularly useful relation, which shows that the Christoffel symbol depends only on the metric tensor and its derivatives, is

Explicit expression for the gradient of a vector field

The following expressions for the gradient of a vector field in curvilinear coordinates are quite useful.

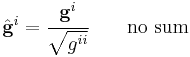

Representing a physical vector field

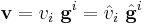

The vector field  can be represented as

can be represented as

where  are the covariant components of the field,

are the covariant components of the field,  are the physical components, and

are the physical components, and

is the normalized contravariant basis vector.

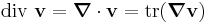

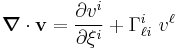

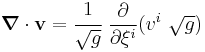

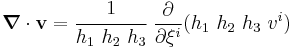

Divergence of a vector field

The divergence of a vector field ( )is defined as

)is defined as

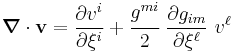

In terms of components with respect to a curvilinear basis

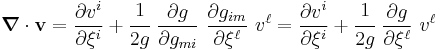

Alternative expression for the divergence of a vector field

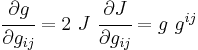

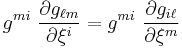

An alternative equation for the divergence of a vector field is frequently used. To derive this relation recall that

Now,

Noting that, due to the symmetry of  ,

,

we have

Recall that if ![[g_{ij}]](/2012-wikipedia_en_all_nopic_01_2012/I/afee6ae63b30bfada5d3c6562c6f125f.png) is the matrix whose components are

is the matrix whose components are  , then the inverse of the matrix is

, then the inverse of the matrix is ![[g_{ij}]^{-1} = [g^{ij}]](/2012-wikipedia_en_all_nopic_01_2012/I/5092deb0373c271b209c72a25614eb03.png) . The inverse of the matrix is given by

. The inverse of the matrix is given by

where  are the cofactor matrices of the components

are the cofactor matrices of the components  . From matrix algebra we have

. From matrix algebra we have

Hence,

Plugging this relation into the expression for the divergence gives

A little manipulation leads to the more compact form

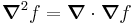

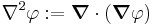

Laplacian of a scalar field

The Laplacian of a scalar field  is defined as

is defined as

Using the alternative expression for the divergence of a vector field gives us

Now

Therefore,

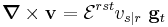

Curl of a vector field

The curl of a vector field  in covariant curvilinear coordinates can be written as

in covariant curvilinear coordinates can be written as

where

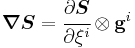

Gradient of a second-order tensor field

The gradient of a second order tensor field can similarly be expressed as

Explicit expressions for the gradient

If we consider the expression for the tensor in terms of a contravariant basis, then

We may also write

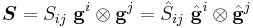

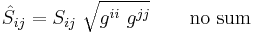

Representing a physical second-order tensor field

The physical components of a second-order tensor field can be obtained by using a normalized contravariant basis, i.e.,

where the hatted basis vectors have been normalized. This implies that

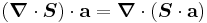

Divergence of a second-order tensor field

The divergence of a second-order tensor field is defined using

where  is an arbitrary constant vector. [13] In curvilinear coordinates,

is an arbitrary constant vector. [13] In curvilinear coordinates,

Orthogonal curvilinear coordinates

Assume, for the purposes of this section, that the curvilinear coordinate system is orthogonal, i.e.,

or equivalently,

where  . As before,

. As before,  are covariant basis vectors and

are covariant basis vectors and  are contravariant basis vectors. Also, let (

are contravariant basis vectors. Also, let ( ) be a background, fixed, Cartesian basis. A list of orthogonal curvilinear coordinates is given below.

) be a background, fixed, Cartesian basis. A list of orthogonal curvilinear coordinates is given below.

|

||||||||

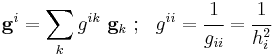

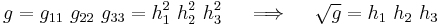

Metric tensor in orthogonal curvilinear coordinates

Let  be the position vector of the point

be the position vector of the point  with respect to the origin of the coordinate system. The notation can be simplified by noting that

with respect to the origin of the coordinate system. The notation can be simplified by noting that  . At each point we can construct a small line element

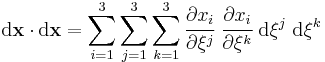

. At each point we can construct a small line element  . The square of the length of the line element is the scalar product

. The square of the length of the line element is the scalar product  and is called the metric of the space. Recall that the space of interest is assumed to be Euclidean when we talk of curvilinear coordinates. Let us express the position vector in terms of the background, fixed, Cartesian basis, i.e.,

and is called the metric of the space. Recall that the space of interest is assumed to be Euclidean when we talk of curvilinear coordinates. Let us express the position vector in terms of the background, fixed, Cartesian basis, i.e.,

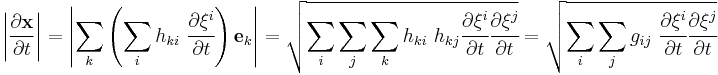

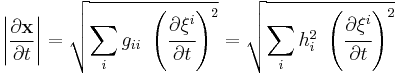

Using the chain rule, we can then express  in terms of three-dimensional orthogonal curvilinear coordinates

in terms of three-dimensional orthogonal curvilinear coordinates  as

as

Therefore the metric is given by

The symmetric quantity

is called the fundamental (or metric) tensor of the Euclidean space in curvilinear coordinates.

Note also that

where  are the Lamé coefficients.

are the Lamé coefficients.

If we define the scale factors,  , using

, using

we get a relation between the fundamental tensor and the Lamé coefficients.

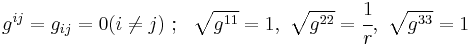

Example: Polar coordinates

If we consider polar coordinates for R2, note that

(r, θ) are the curvilinear coordinates, and the Jacobian determinant of the transformation (r,θ) → (r cos θ, r sin θ) is r.

The orthogonal basis vectors are gr = (cos θ, sin θ), gθ = (−r sin θ, r cos θ). The normalized basis vectors are er = (cos θ, sin θ), eθ = (−sin θ, cos θ) and the scale factors are hr = 1 and hθ= r. The fundamental tensor is g11 =1, g22 =r2, g12 = g21 =0.

Line and surface integrals

If we wish to use curvilinear coordinates for vector calculus calculations, adjustments need to be made in the calculation of line, surface and volume integrals. For simplicity, we again restrict the discussion to three dimensions and orthogonal curvilinear coordinates. However, the same arguments apply for  -dimensional problems though there are some additional terms in the expressions when the coordinate system is not orthogonal.

-dimensional problems though there are some additional terms in the expressions when the coordinate system is not orthogonal.

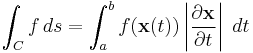

Line integrals

Normally in the calculation of line integrals we are interested in calculating

where x(t) parametrizes C in Cartesian coordinates. In curvilinear coordinates, the term

by the chain rule. And from the definition of the Lamé coefficients,

and thus

Now, since  when

when  , we have

, we have

and we can proceed normally.

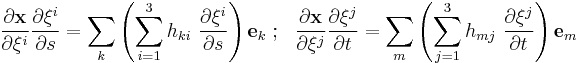

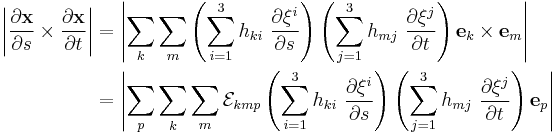

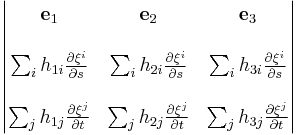

Surface integrals

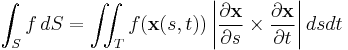

Likewise, if we are interested in a surface integral, the relevant calculation, with the parameterization of the surface in Cartesian coordinates is:

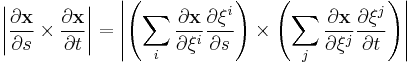

Again, in curvilinear coordinates, we have

and we make use of the definition of curvilinear coordinates again to yield

Therefore,

where  is the permutation symbol.

is the permutation symbol.

In determinant form, the cross product in terms of curvilinear coordinates will be:

Grad, curl, div, Laplacian

In orthogonal curvilinear coordinates of  dimensions, where

dimensions, where

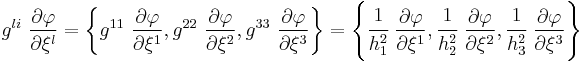

one can express the gradient of a scalar or vector field as

For an orthogonal basis

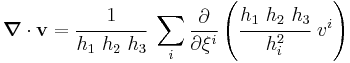

The divergence of a vector field can then be written as

Also,

Therefore,

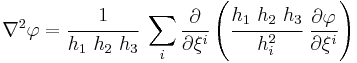

We can get an expression for the Laplacian in a similar manner by noting that

Then we have

The expressions for the gradient, divergence, and Laplacian can be directly extended to  -dimensions.

-dimensions.

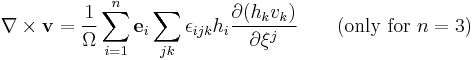

The curl of a vector field is given by

where  is the product of all

is the product of all  and

and  is the Levi-Civita symbol.

is the Levi-Civita symbol.

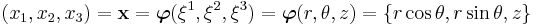

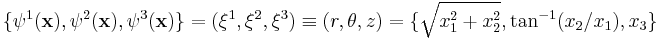

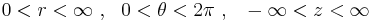

Example: Cylindrical polar coordinates

For cylindrical coordinates we have

and

where

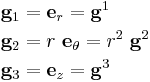

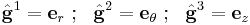

Then the covariant and contravariant basis vectors are

where  are the unit vectors in the

are the unit vectors in the  directions.

directions.

Note that the components of the metric tensor are such that

which shows that the basis is orthogonal.

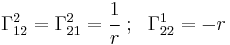

The non-zero components of the Christoffel symbol of the second kind are

Representing a physical vector field

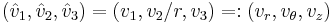

The normalized contravariant basis vectors in cylindrical polar coordinates are

and the physical components of a vector  are

are

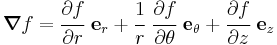

Gradient of a scalar field

The gradient of a scalar field,  , in cylindrical coordinates can now be computed from the general expression in curvilinear coordinates and has the form

, in cylindrical coordinates can now be computed from the general expression in curvilinear coordinates and has the form

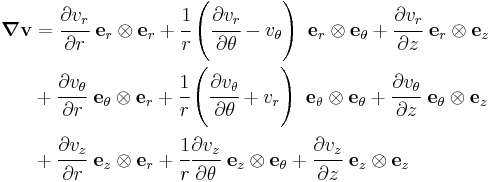

Gradient of a vector field

Similarly, the gradient of a vector field,  , in cylindrical coordinates can be shown to be

, in cylindrical coordinates can be shown to be

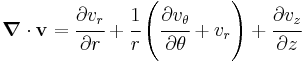

Divergence of a vector field

Using the equation for the divergence of a vector field in curvilinear coordinates, the divergence in cylindrical coordinates can be shown to be

Laplacian of a scalar field

The Laplacian is more easily computed by noting that  . In cylindrical polar coordinates

. In cylindrical polar coordinates

Hence,

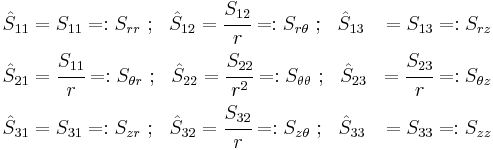

Representing a physical second-order tensor field

The physical components of a second-order tensor field are those obtained when the tensor is expressed in terms of a normalized contravariant basis. In cylindrical polar coordinates these components are

Gradient of a second-order tensor field

Using the above definitions we can show that the gradient of a second-order tensor field in cylindrical polar coordinates can be expressed as

Divergence of a second-order tensor field

The divergence of a second-order tensor field in cylindrical polar coordinates can be obtained from the expression for the gradient by collecting terms where the scalar product of the two outer vectors in the dyadic products is nonzero. Therefore,

Fictitious forces in general curvilinear coordinates

An inertial coordinate system is defined as a system of space and time coordinates x1, x2, x3, t in terms of which the equations of motion of a particle free of external forces are simply d2xj/dt2 = 0.[14] In this context, a coordinate system can fail to be “inertial” either due to non-straight time axis or non-straight space axes (or both). In other words, the basis vectors of the coordinates may vary in time at fixed positions, or they may vary with position at fixed times, or both. When equations of motion are expressed in terms of any non-inertial coordinate system (in this sense), extra terms appear, called Christoffel symbols. Strictly speaking, these terms represent components of the absolute acceleration (in classical mechanics), but we may also choose to continue to regard d2xj/dt2 as the acceleration (as if the coordinates were inertial) and treat the extra terms as if they were forces, in which case they are called fictitious forces.[15] The component of any such fictitious force normal to the path of the particle and in the plane of the path’s curvature is then called centrifugal force.[16]

This more general context makes clear the correspondence between the concepts of centrifugal force in rotating coordinate systems and in stationary curvilinear coordinate systems. (Both of these concepts appear frequently in the literature.[17][18][19]) For a simple example, consider a particle of mass m moving in a circle of radius r with angular speed w relative to a system of polar coordinates rotating with angular speed W. The radial equation of motion is mr” = Fr + mr(w+W)2. Thus the centrifugal force is mr times the square of the absolute rotational speed A = w + W of the particle. If we choose a coordinate system rotating at the speed of the particle, then W = A and w = 0, in which case the centrifugal force is mrA2, whereas if we choose a stationary coordinate system we have W = 0 and w = A, in which case the centrifugal force is again mrA2. The reason for this equality of results is that in both cases the basis vectors at the particle’s location are changing in time in exactly the same way. Hence these are really just two different ways of describing exactly the same thing, one description being in terms of rotating coordinates and the other being in terms of stationary curvilinear coordinates, both of which are non-inertial according to the more abstract meaning of that term.

When describing general motion, the actual forces acting on a particle are often referred to the instantaneous osculating circle tangent to the path of motion, and this circle in the general case is not centered at a fixed location, and so the decomposition into centrifugal and Coriolis components is constantly changing. This is true regardless of whether the motion is described in terms of stationary or rotating coordinates.

See also

- Covariance and contravariance

- Basic introduction to the mathematics of curved spacetime

- Orthogonal coordinates

- Frenet-Serret formulas

- Covariant derivative

- Tensor derivative (continuum mechanics)

- Curvilinear perspective

- Del in cylindrical and spherical coordinates

References

- Notes

- ^ Boothby, W. M. (2002). An Introduction to Differential Manifolds and Riemannian Geometry (revised ed.). New York, NY: Academic Press.

- ^ McConnell, A. J. (1957). Application of Tensor Analysis. New York, NY: Dover Publications, Inc.. Ch. 9, sec. 1. ISBN 0486603733.

- ^ a b c Green, A. E.; Zerna, W. (1968). Theoretical Elasticity. Oxford University Press. ISBN 0198534868.

- ^ a b c Ogden, R. W. (2000). Nonlinear elastic deformations. Dover.

- ^ Naghdi, P. M. (1972). "Theory of shells and plates". In S. Flügge. Handbook of Physics. VIa/2. pp. 425–640.

- ^ a b c d e f g h i j Simmonds, J. G. (1994). A brief on tensor analysis. Springer. ISBN 0387906398.

- ^ a b Basar, Y.; Weichert, D. (2000). Numerical continuum mechanics of solids: fundamental concepts and perspectives. Springer.

- ^ a b c Ciarlet, P. G. (2000). Theory of Shells. 1. Elsevier Science.

- ^ Einstein, A. (1915). "Contribution to the Theory of General Relativity". In Laczos, C.. The Einstein Decade. p. 213. ISBN 0521381053.

- ^ Misner, C. W.; Thorne, K. S.; Wheeler, J. A. (1973). Gravitation. W. H. Freeman and Co.. ISBN 0716703440.

- ^ Greenleaf, A.; Lassas, M.; Uhlmann, G. (2003). "Anisotropic conductivities that cannot be detected by EIT". Physiological measurement 24 (2): 413–419. doi:10.1088/0967-3334/24/2/353. PMID 12812426.

- ^ Leonhardt, U.; Philbin, T.G. (2006). "General relativity in electrical engineering". New Journal of Physics 8: 247.

- ^ "The divergence of a tensor field". Introduction to Elasticity/Tensors. Wikiversity. http://en.wikiversity.org/wiki/Introduction_to_Elasticity/Tensors#The_divergence_of_a_tensor_field. Retrieved 2010-11-26.

- ^ Friedman, Michael (1989). The Foundations of Space–Time Theories. Princeton University Press. ISBN 0691072396.

- ^ Stommel, Henry M.; Moore, Dennis W. (1989). An Introduction to the Coriolis Force. Columbia University Press. ISBN 0231066368.

- ^ Beer; Johnston (1972). Statics and Dynamics (2nd ed.). McGraw-Hill. p. 485. ISBN 0077366506.

- ^ Hildebrand, Francis B. (1992). Methods of Applied Mathematics. Dover. p. 156. ISBN 0135792010.

- ^ McQuarrie, Donald Allan (2000). Statistical Mechanics. University Science Books. ISBN 0060443669.

- ^ Weber, Hans-Jurgen; Arfken, George Brown (2004). Essential Mathematical Methods for Physicists. Academic Press. p. 843. ISBN 0120598779.

- Further reading

![\mathbf{u}\times\mathbf{v} = [(\mathbf{g}_m\times\mathbf{g}_n)\cdot\mathbf{g}_s]~u^m~v^n~\mathbf{g}^s

= \mathcal{E}_{smn}~u^m~v^n~\mathbf{g}^s](/2012-wikipedia_en_all_nopic_01_2012/I/f059e7deb6097cbb1d9214f208a0ebe1.png)

![\left[\boldsymbol{S}\cdot\mathbf{u}, \boldsymbol{S}\cdot\mathbf{v}, \boldsymbol{S}\cdot\mathbf{w}\right] = \det\boldsymbol{S}\left[\mathbf{u}, \mathbf{v}, \mathbf{w}\right]](/2012-wikipedia_en_all_nopic_01_2012/I/0bee565473156b81a72669690dea247f.png)

![\left[\mathbf{u},\mathbf{v},\mathbf{w}\right]�:= \mathbf{u}\cdot(\mathbf{v}\times\mathbf{w})~.](/2012-wikipedia_en_all_nopic_01_2012/I/44c10ba03c0a978edcc3869b099e4c99.png)

![\mathbf{g}^i = \boldsymbol{F}^{-\rm{T}}\cdot\mathbf{e}^i ~;~~ g^{ij} = [\boldsymbol{F}^{-\rm{1}}\cdot\boldsymbol{F}^{-\rm{T}}]_{ij} ~;~~ g_{ij} = [g^{ij}]^{-1} = [\boldsymbol{F}^{\rm{T}}\cdot\boldsymbol{F}]_{ij}](/2012-wikipedia_en_all_nopic_01_2012/I/4b2f9fca6430150ee3aec27531cf3c2f.png)

![\left[\mathbf{g}_1,\mathbf{g}_2,\mathbf{g}_3\right] = \det\boldsymbol{F}\left[\mathbf{e}_1,\mathbf{e}_2,\mathbf{e}_3\right] ~.](/2012-wikipedia_en_all_nopic_01_2012/I/75e314577f553d40e4aeda80a648b858.png)

![\left[\mathbf{e}_1,\mathbf{e}_2,\mathbf{e}_3\right] = 1](/2012-wikipedia_en_all_nopic_01_2012/I/0c75fce963df2b3a8c8572097323bc46.png)

![J = \det\boldsymbol{F} = \left[\mathbf{g}_1,\mathbf{g}_2,\mathbf{g}_3\right] = \mathbf{g}_1\cdot(\mathbf{g}_2\times\mathbf{g}_3)](/2012-wikipedia_en_all_nopic_01_2012/I/9060045bcdb5da20f73ca32219f6f02a.png)

![g�:= \det[g_{ij}]\,](/2012-wikipedia_en_all_nopic_01_2012/I/bf5cdc02671cbe2fbf845a97080923a8.png)

![g = \det[\boldsymbol{F}^{\rm{T}}]\cdot\det[\boldsymbol{F}] = J\cdot J = J^2](/2012-wikipedia_en_all_nopic_01_2012/I/90e16ca8f2c77ef493c324767d3ff88a.png)

![\det[g^{ij}] = \cfrac{1}{J^2}](/2012-wikipedia_en_all_nopic_01_2012/I/4ab852492257b9a89daecd4b1b9efe96.png)

![\mathbf{u}\times\mathbf{v} = \epsilon_{ijk}~\hat{u}_j~\hat{v}_k~\mathbf{e}_i

= \epsilon_{ijk}~\frac{\partial x_j}{\partial \xi^m}~\frac{\partial x_k}{\partial \xi^n}~\frac{\partial x_i}{\partial \xi^s}~ u^m~v^n~\mathbf{g}^s

= [(\mathbf{g}_m\times\mathbf{g}_n)\cdot\mathbf{g}_s]~u^m~v^n~\mathbf{g}^s

= \mathcal{E}_{smn}~u^m~v^n~\mathbf{g}^s](/2012-wikipedia_en_all_nopic_01_2012/I/13a14fbbc3781942acd866cf2d6d76e0.png)

![\mathcal{E}_{ijk} = \left[\mathbf{g}_i,\mathbf{g}_j,\mathbf{g}_k\right] =(\mathbf{g}_i\times\mathbf{g}_j)\cdot\mathbf{g}_k ~;~~

\mathcal{E}^{ijk} = \left[\mathbf{g}^i,\mathbf{g}^j,\mathbf{g}^k\right]](/2012-wikipedia_en_all_nopic_01_2012/I/3d5aa4d4c990b49e866b0f2c3dd7f514.png)

![\mathbf{x} = \boldsymbol{\varphi}(\xi^1, \xi^2, \xi^3) ~;~~ \xi^i = \psi^i(\mathbf{x}) = [\boldsymbol{\varphi}^{-1}(\mathbf{x})]^i](/2012-wikipedia_en_all_nopic_01_2012/I/7091773b1184b7ba1ba7a4c3deb7650f.png)

![f(\mathbf{x}) = f[\boldsymbol{\varphi}(\xi^1,\xi^2,\xi^3)] = f_\varphi(\xi^1,\xi^2,\xi^3)](/2012-wikipedia_en_all_nopic_01_2012/I/8158d78dfd3bde6edb584510c31faaa4.png)

![[\boldsymbol{\nabla}f(\mathbf{x})]\cdot\mathbf{c} = \cfrac{\rm{d}}{\rm{d}\alpha} f(\mathbf{x}%2B\alpha\mathbf{c})\biggr|_{\alpha=0}](/2012-wikipedia_en_all_nopic_01_2012/I/4d000647fa49f3fd2061e6d4a9d59c6e.png)

![[\boldsymbol{\nabla}f(\mathbf{x})]\cdot\mathbf{c} = \cfrac{\rm{d}}{\rm{d}\alpha} f_\varphi(\xi^1 %2B \alpha~c^1, \xi^2 %2B \alpha~c^2, \xi^3 %2B \alpha~c^3)\biggr|_{\alpha=0} = \cfrac{\partial f_\varphi}{\partial \xi^i}~c^i = \cfrac{\partial f}{\partial \xi^i}~c^i](/2012-wikipedia_en_all_nopic_01_2012/I/51ad48748aa3a8629ff9e951df6ad93a.png)

![[\boldsymbol{\nabla}\psi^i(\mathbf{x})]\cdot\mathbf{c} = \cfrac{\partial \psi^i}{\partial \xi^j}~c^j = c^i](/2012-wikipedia_en_all_nopic_01_2012/I/c96ea55d1a8eaf6d87a63f0eeb8fc2bc.png)

![[\boldsymbol{\nabla}f(\mathbf{x})]\cdot\mathbf{c} = \cfrac{\partial f}{\partial \xi^i}~c^i = \left(\cfrac{\partial f}{\partial \xi^i}~\mathbf{g}^i\right)

\left(c^i~\mathbf{g}_i\right) \quad \implies \quad \boldsymbol{\nabla}f(\mathbf{x}) = \cfrac{\partial f}{\partial \xi^i}~\mathbf{g}^i](/2012-wikipedia_en_all_nopic_01_2012/I/5f0c9df0c2eb2e844bb36e74faa898c0.png)

![[\boldsymbol{\nabla}\mathbf{f}(\mathbf{x})]\cdot\mathbf{c} = \cfrac{\partial \mathbf{f}}{\partial \xi^i}~c^i](/2012-wikipedia_en_all_nopic_01_2012/I/b87153c66bd97adb7a36192fb3471375.png)

![\Gamma_{ijk} = \frac{1}{2}(g_{ik,j} %2B g_{jk,i} - g_{ij,k})

= \frac{1}{2}[(\mathbf{g}_i\cdot\mathbf{g}_k)_{,j} %2B (\mathbf{g}_j\cdot\mathbf{g}_k)_{,i} - (\mathbf{g}_i\cdot\mathbf{g}_j)_{,k}]](/2012-wikipedia_en_all_nopic_01_2012/I/0d90bdc3091508ed879e4d5dc12c9217.png)

![\begin{align}

\boldsymbol{\nabla}\mathbf{v} & = \left[\cfrac{\partial v^i}{\partial \xi^k} %2B \Gamma^i_{lk}~v^l\right]~\mathbf{g}_i\otimes\mathbf{g}^k \\

& = \left[\cfrac{\partial v_i}{\partial \xi^k} - \Gamma^l_{ki}~v_l\right]~\mathbf{g}^i\otimes\mathbf{g}^k

\end{align}](/2012-wikipedia_en_all_nopic_01_2012/I/00ad2ad2af9555712a6a3cd37aad2ce9.png)

![\boldsymbol{\nabla}\cdot\mathbf{v} = \cfrac{\partial v^i}{\partial \xi^i} %2B \Gamma^i_{\ell i}~v^\ell

= \left[\cfrac{\partial v_i}{\partial \xi^j} - \Gamma^\ell_{ji}~v_\ell\right]~g^{ij}](/2012-wikipedia_en_all_nopic_01_2012/I/7a9e0c9dfaddbaf357aa577b7aa6b0eb.png)

![\Gamma_{\ell i}^i = \Gamma_{i\ell}^i = \cfrac{g^{mi}}{2}\left[\frac{\partial g_{im}}{\partial \xi^\ell} %2B

\frac{\partial g_{\ell m}}{\partial \xi^i} - \frac{\partial g_{il}}{\partial \xi^m}\right]](/2012-wikipedia_en_all_nopic_01_2012/I/1bc376cde6effe3b3748a953048f31a6.png)

![[g^{ij}] = [g_{ij}]^{-1} = \cfrac{A^{ij}}{g} ~;~~ g�:= \det([g_{ij}]) = \det\boldsymbol{g}](/2012-wikipedia_en_all_nopic_01_2012/I/90a6cba02cc161ece84686c2ae6479e1.png)

![g = \det([g_{ij}]) = \sum_i g_{ij}~A^{ij} \quad \implies \quad

\frac{\partial g}{\partial g_{ij}} = A^{ij}](/2012-wikipedia_en_all_nopic_01_2012/I/440c0bbb43fb4848fbd06ff02f7f0008.png)

![[g^{ij}] = \cfrac{1}{g}~\frac{\partial g}{\partial g_{ij}}](/2012-wikipedia_en_all_nopic_01_2012/I/d2f3d50c71901f3b37a1cccadd320756.png)

![\nabla^2 \varphi = \cfrac{1}{\sqrt{g}}~\frac{\partial }{\partial \xi^i}([\boldsymbol{\nabla} \varphi]^i~\sqrt{g})](/2012-wikipedia_en_all_nopic_01_2012/I/46fa3a225618da8d301132cee028cf39.png)

![\boldsymbol{\nabla} \varphi = \frac{\partial \varphi}{\partial \xi^l}~\mathbf{g}^l = g^{li}~\frac{\partial \varphi}{\partial \xi^l}~\mathbf{g}_i

\quad \implies \quad

[\boldsymbol{\nabla} \varphi]^i = g^{li}~\frac{\partial \varphi}{\partial \xi^l}](/2012-wikipedia_en_all_nopic_01_2012/I/d64c1b1426464e6a2e380ad3618f1bcd.png)

![\boldsymbol{\nabla}\boldsymbol{S} = \cfrac{\partial}{\partial \xi^k}[S_{ij}~\mathbf{g}^i\otimes\mathbf{g}^j]\otimes\mathbf{g}^k

= \left[\cfrac{\partial S_{ij}}{\partial \xi^k} - \Gamma^l_{ki}~S_{lj} - \Gamma^l_{kj}~S_{il}\right]~\mathbf{g}^i\otimes\mathbf{g}^j\otimes\mathbf{g}^k](/2012-wikipedia_en_all_nopic_01_2012/I/4595efcb49fdd5e1624b2c6a5d34311f.png)

![\begin{align}

\boldsymbol{\nabla}\boldsymbol{S} & = \left[\cfrac{\partial S^{ij}}{\partial \xi^k} %2B \Gamma^i_{kl}~S^{lj} %2B \Gamma^j_{kl}~S^{il}\right]~\mathbf{g}_i\otimes\mathbf{g}_j\otimes\mathbf{g}^k \\

& = \left[\cfrac{\partial S^i_{~j}}{\partial \xi^k} %2B \Gamma^i_{kl}~S^l_{~j} - \Gamma^l_{kj}~S^i_{~l}\right]~\mathbf{g}_i\otimes\mathbf{g}^j\otimes\mathbf{g}^k \\

& = \left[\cfrac{\partial S_i^{~j}}{\partial \xi^k} - \Gamma^l_{ik}~S_l^{~j} %2B \Gamma^j_{kl}~S_i^{~l}\right]~\mathbf{g}^i\otimes\mathbf{g}_j\otimes\mathbf{g}^k

\end{align}](/2012-wikipedia_en_all_nopic_01_2012/I/fdec19099ff35ea3b2e62f5851346e54.png)

![\begin{align}

\boldsymbol{\nabla}\cdot\boldsymbol{S} & = \left[\cfrac{\partial S_{ij}}{\partial \xi^k} - \Gamma^l_{ki}~S_{lj} - \Gamma^l_{kj}~S_{il}\right]~g^{ik}~\mathbf{g}^j \\

& = \left[\cfrac{\partial S^{ij}}{\partial \xi^i} %2B \Gamma^i_{il}~S^{lj} %2B \Gamma^j_{il}~S^{il}\right]~\mathbf{g}_j \\

& = \left[\cfrac{\partial S^i_{~j}}{\partial \xi^i} %2B \Gamma^i_{il}~S^l_{~j} - \Gamma^l_{ij}~S^i_{~l}\right]~\mathbf{g}^j \\

& = \left[\cfrac{\partial S_i^{~j}}{\partial \xi^k} - \Gamma^l_{ik}~S_l^{~j} %2B \Gamma^j_{kl}~S_i^{~l}\right]~g^{ik}~\mathbf{g}_j

\end{align}](/2012-wikipedia_en_all_nopic_01_2012/I/5227f4f7ef02d9ac7e3fe3180b49c166.png)

![\mathbf{v} = \boldsymbol{\nabla}f = \left[v_r~~ v_\theta~~ v_z\right] = \left[\cfrac{\partial f}{\partial r}~~ \cfrac{1}{r}\cfrac{\partial f}{\partial \theta}~~ \cfrac{\partial f}{\partial z} \right]](/2012-wikipedia_en_all_nopic_01_2012/I/e15b062b56be3ef54a70805e9f2dd347.png)

![\boldsymbol{\nabla}\cdot\mathbf{v} = \boldsymbol{\nabla}^2 f = \cfrac{\partial^2 f}{\partial r^2} %2B

\cfrac{1}{r}\left(\cfrac{1}{r}\cfrac{\partial^2f}{\partial \theta^2} %2B \cfrac{\partial f}{\partial r} \right)

%2B \cfrac{\partial^2 f}{\partial z^2}

= \cfrac{1}{r}\left[\cfrac{\partial}{\partial r}\left(r\cfrac{\partial f}{\partial r}\right)\right] %2B \cfrac{1}{r^2}\cfrac{\partial^2f}{\partial \theta^2} %2B \cfrac{\partial^2 f}{\partial z^2}](/2012-wikipedia_en_all_nopic_01_2012/I/8a9c048d5469b502f97631020d78cc3b.png)

![\begin{align}

\boldsymbol{\nabla} \boldsymbol{S} & = \frac{\partial S_{rr}}{\partial r}~\mathbf{e}_r\otimes\mathbf{e}_r\otimes\mathbf{e}_r %2B

\cfrac{1}{r}\left[\frac{\partial S_{rr}}{\partial \theta} - (S_{\theta r}%2BS_{r\theta})\right]~\mathbf{e}_r\otimes\mathbf{e}_r\otimes\mathbf{e}_\theta %2B

\frac{\partial S_{rr}}{\partial z}~\mathbf{e}_r\otimes\mathbf{e}_r\otimes\mathbf{e}_z \\

& %2B \frac{\partial S_{r\theta}}{\partial r}~\mathbf{e}_r\otimes\mathbf{e}_\theta\otimes\mathbf{e}_r %2B

\cfrac{1}{r}\left[\frac{\partial S_{r\theta}}{\partial \theta} %2B (S_{rr}-S_{\theta\theta})\right]~\mathbf{e}_r\otimes\mathbf{e}_\theta\otimes\mathbf{e}_\theta %2B

\frac{\partial S_{r\theta}}{\partial z}~\mathbf{e}_r\otimes\mathbf{e}_\theta\otimes\mathbf{e}_z \\

& %2B \frac{\partial S_{rz}}{\partial r}~\mathbf{e}_r\otimes\mathbf{e}_z\otimes\mathbf{e}_r %2B

\cfrac{1}{r}\left[\frac{\partial S_{rz}}{\partial \theta} -S_{\theta z}\right]~\mathbf{e}_r\otimes\mathbf{e}_z\otimes\mathbf{e}_\theta %2B

\frac{\partial S_{rz}}{\partial z}~\mathbf{e}_r\otimes\mathbf{e}_z\otimes\mathbf{e}_z \\

& %2B \frac{\partial S_{\theta r}}{\partial r}~\mathbf{e}_\theta\otimes\mathbf{e}_r\otimes\mathbf{e}_r %2B

\cfrac{1}{r}\left[\frac{\partial S_{\theta r}}{\partial \theta} %2B (S_{rr}-S_{\theta\theta})\right]~\mathbf{e}_\theta\otimes\mathbf{e}_r\otimes\mathbf{e}_\theta %2B

\frac{\partial S_{\theta r}}{\partial z}~\mathbf{e}_\theta\otimes\mathbf{e}_r\otimes\mathbf{e}_z \\

& %2B \frac{\partial S_{\theta\theta}}{\partial r}~\mathbf{e}_\theta\otimes\mathbf{e}_\theta\otimes\mathbf{e}_r %2B

\cfrac{1}{r}\left[\frac{\partial S_{\theta\theta}}{\partial \theta} %2B (S_{r\theta}%2BS_{\theta r})\right]~\mathbf{e}_\theta\otimes\mathbf{e}_\theta\otimes\mathbf{e}_\theta %2B

\frac{\partial S_{\theta\theta}}{\partial z}~\mathbf{e}_\theta\otimes\mathbf{e}_\theta\otimes\mathbf{e}_z \\

& %2B \frac{\partial S_{\theta z}}{\partial r}~\mathbf{e}_\theta\otimes\mathbf{e}_z\otimes\mathbf{e}_r %2B

\cfrac{1}{r}\left[\frac{\partial S_{\theta z}}{\partial \theta} %2B S_{rz}\right]~\mathbf{e}_\theta\otimes\mathbf{e}_z\otimes\mathbf{e}_\theta %2B

\frac{\partial S_{\theta z}}{\partial z}~\mathbf{e}_\theta\otimes\mathbf{e}_z\otimes\mathbf{e}_z \\

& %2B \frac{\partial S_{zr}}{\partial r}~\mathbf{e}_z\otimes\mathbf{e}_r\otimes\mathbf{e}_r %2B

\cfrac{1}{r}\left[\frac{\partial S_{zr}}{\partial \theta} - S_{z\theta}\right]~\mathbf{e}_z\otimes\mathbf{e}_r\otimes\mathbf{e}_\theta %2B

\frac{\partial S_{zr}}{\partial z}~\mathbf{e}_z\otimes\mathbf{e}_r\otimes\mathbf{e}_z \\

& %2B \frac{\partial S_{z\theta}}{\partial r}~\mathbf{e}_z\otimes\mathbf{e}_\theta\otimes\mathbf{e}_r %2B

\cfrac{1}{r}\left[\frac{\partial S_{z\theta}}{\partial \theta} %2B S_{zr}\right]~\mathbf{e}_z\otimes\mathbf{e}_\theta\otimes\mathbf{e}_\theta %2B

\frac{\partial S_{z\theta}}{\partial z}~\mathbf{e}_z\otimes\mathbf{e}_\theta\otimes\mathbf{e}_z \\

& %2B \frac{\partial S_{zz}}{\partial r}~\mathbf{e}_z\otimes\mathbf{e}_z\otimes\mathbf{e}_r %2B

\cfrac{1}{r}~\frac{\partial S_{zz}}{\partial \theta}~\mathbf{e}_z\otimes\mathbf{e}_z\otimes\mathbf{e}_\theta %2B

\frac{\partial S_{zz}}{\partial z}~\mathbf{e}_z\otimes\mathbf{e}_z\otimes\mathbf{e}_z

\end{align}](/2012-wikipedia_en_all_nopic_01_2012/I/591e3dda7c3ae45da72754501ad678b9.png)

![\begin{align}

\boldsymbol{\nabla}\cdot \boldsymbol{S} & = \frac{\partial S_{rr}}{\partial r}~\mathbf{e}_r

%2B \frac{\partial S_{r\theta}}{\partial r}~\mathbf{e}_\theta

%2B \frac{\partial S_{rz}}{\partial r}~\mathbf{e}_z \\

& %2B

\cfrac{1}{r}\left[\frac{\partial S_{\theta r}}{\partial \theta} %2B (S_{rr}-S_{\theta\theta})\right]~\mathbf{e}_r %2B

\cfrac{1}{r}\left[\frac{\partial S_{\theta\theta}}{\partial \theta} %2B (S_{r\theta}%2BS_{\theta r})\right]~\mathbf{e}_\theta %2B\cfrac{1}{r}\left[\frac{\partial S_{\theta z}}{\partial \theta} %2B S_{rz}\right]~\mathbf{e}_z \\

& %2B

\frac{\partial S_{zr}}{\partial z}~\mathbf{e}_r %2B

\frac{\partial S_{z\theta}}{\partial z}~\mathbf{e}_\theta %2B

\frac{\partial S_{zz}}{\partial z}~\mathbf{e}_z

\end{align}](/2012-wikipedia_en_all_nopic_01_2012/I/f2da0cc43d2cc6ff56ed6b8864473df7.png)