Bennett's inequality

In probability theory, Bennett's inequality provides an upper bound on the probability that the sum of independent random variables deviates from its expected value by more than any specified amount. Bennett's inequality was proved by George Bennett of the University of New South Wales in 1962.[1]

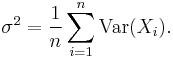

Let X1, … Xn be independent random variables, and assume (for simplicity but without loss of generality) they all have zero expected value. Further assume |Xi| ≤ a almost surely for all i, and let

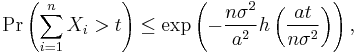

Then for any t ≥ 0,

where h(u) = (1 + u)log(1 + u) – u.[2]

See also

References

- ^ Bennett, G. (1962). "Probability Inequalities for the Sum of Independent Random Variables". Journal of the American Statistical Association 57 (297): 33–45. doi:10.2307/2282438.

- ^ Devroye, Luc; Lugosi, Gábor (2001). Combinatorial methods in density estimation. Springer. p. 11. ISBN 9780387951171. http://books.google.com/books?id=jvT-sUt1HZYC&pg=PA11.