BHHH algorithm

BHHH is an optimization algorithm in econometrics similar to Gauss–Newton algorithm. It is an acronym of the four originators: Berndt, B. Hall, R. Hall, and Jerry Hausman.

Usage

If a nonlinear model is fitted to the data one often needs to estimate coefficients through optimization. A number of optimisation algorithms have the following general structure. Suppose that the function to be optimized is Q(β). Then the algorithms are iterative, defining a sequence of approximations, βk given by

,

,

where  is the coefficient at step k, and

is the coefficient at step k, and  is a parameter which partly determines the particular algorithm. For the BHHH algorithm λk is determined by calculations within a given iterative step, involving a line-search until a point βk+1 is found satisfying certain criteria. In addition, for the BHHH algorithm, Q has the form

is a parameter which partly determines the particular algorithm. For the BHHH algorithm λk is determined by calculations within a given iterative step, involving a line-search until a point βk+1 is found satisfying certain criteria. In addition, for the BHHH algorithm, Q has the form

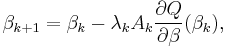

and A is calculated using

In other cases, e.g. Newton-Raphson,  can have other forms. The BHHH algorithm has the advantage that, if certain conditions apply, convergence of the iterative procedure is guaranteed.

can have other forms. The BHHH algorithm has the advantage that, if certain conditions apply, convergence of the iterative procedure is guaranteed.

Literature

- Berndt, E., B. Hall, R. Hall, and J. Hausman, (1974), “Estimation and Inference in Nonlinear Structural Models”, Annals of Economic and Social Measurement, Vol. 3, 653–665.

- Luenberger, D. (1972), Introduction to Linear and Nonlinear Programming, Addison Wesley, Reading Massachusetts.

- Gill, P., W. Murray, and M. Wright, (1981), Practical Optimization, Harcourt Brace and Company, London

- Sokolov, S.N., and I.N. Silin (1962), “Determination of the coordinates of the minima of functionals by the linearization method”, Joint Institute for Nuclear Research preprint D-810, Dubna.

![A_{k}=\left[1/N\sum_{i=1}^{N}\frac{\partial \ln Q_i}{\partial \beta}(\beta_{k})\frac{\partial \ln Q_i}{\partial \beta}(\beta_{k})'\right]^{-1} .](/2012-wikipedia_en_all_nopic_01_2012/I/16daa7e9a1f3e7352b85b95b049caaaa.png)