0.999...

In mathematics, the repeating decimal 0.999... (which may also be written as 0.9,  , 0.(9), or as "0.9" followed by any number of 9s in the repeating decimal) denotes a real number that can be shown to be the number one. In other words, the symbols 0.999... and 1 represent the same number. Proofs of this equality have been formulated with varying degrees of mathematical rigor, taking into account preferred development of the real numbers, background assumptions, historical context, and target audience.

, 0.(9), or as "0.9" followed by any number of 9s in the repeating decimal) denotes a real number that can be shown to be the number one. In other words, the symbols 0.999... and 1 represent the same number. Proofs of this equality have been formulated with varying degrees of mathematical rigor, taking into account preferred development of the real numbers, background assumptions, historical context, and target audience.

In fact, every nonzero, terminating decimal has an equal twin representation with trailing 9s, such as 8.32 and 8.31999... The terminating decimal representation is almost always preferred, contributing to the misconception that it is the only representation. The same phenomenon occurs in all other bases or in any similar representation of the real numbers [1].

The equality of 0.999... and 1 is closely related to the absence of nonzero infinitesimals in the real number system, the most commonly used system in mathematical analysis. Some alternative number systems, such as the hyperreals, do contain nonzero infinitesimals. In most such number systems the standard interpretation of the expression 0.999... makes it equal to 1, but in some of these number systems, the symbol "0.999..." admits other interpretations that fall infinitesimally short of 1.

The equality 0.999... = 1 has long been accepted by mathematicians and taught in textbooks. Nonetheless, some students question or reject it. Some can be persuaded by an appeal to authority from textbooks and teachers, or by arithmetic reasoning, to accept that the two are equal.

Contents |

Algebraic proofs

Algebraic proofs showing that 0.999... represents the number 1 use concepts such as fractions, long division, and digit manipulation to build transformations preserving equality from 0.999... to 1.

Fractions and long division

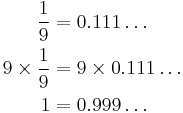

One reason that infinite decimals are a necessary extension of finite decimals is to represent fractions. Using long division, a simple division of integers like 1⁄9 becomes a recurring decimal, 0.111…, in which the digits repeat without end. This decimal yields a quick proof for 0.999… = 1. Multiplication of 9 times 1 produces 9 in each digit, so 9 × 0.111… equals 0.999… and 9 × 1⁄9 equals 1, so 0.999… = 1:

Another form of this proof multiplies ⅓ = 0.333… by 3.

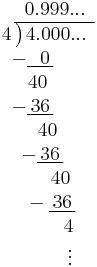

One can give another proof using the a modified form of the usual division algorithm.[2]. For example, consider  one can write out the long division algorithm as follows:

one can write out the long division algorithm as follows:

Digit manipulation

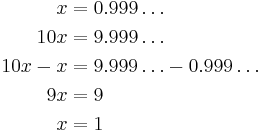

When a number in decimal notation is multiplied by 10, the digits do not change but each digit moves one place to the left. Thus 10 × 0.999... equals 9.999..., which is 9 greater than the original number. To see this, consider that in subtracting 0.999... from 9.999..., each of the digits after the decimal separator cancels, i.e. the result is 9 − 9 = 0 for each such digit. The final step uses algebra:

Discussion

Although these proofs demonstrate that 0.999... = 1, the extent to which they explain the equation depends on the audience. In introductory arithmetic, such proofs help explain why 0.999... = 1 but 0.333... < 0.4. And in introductory algebra, the proofs help explain why the general method of converting between fractions and repeating decimals works. But the proofs shed little light on the fundamental relationship between decimals and the numbers they represent, which underlies the question of how two different decimals can be said to be equal at all.[3] William Byers argues that a student who agrees that 0.999... = 1 because of the above proofs, but hasn't resolved the ambiguity, doesn't really understand the equation.[4] Fred Richman argues that the first argument "gets its force from the fact that most people have been indoctrinated to accept the first equation without thinking".[5]

Once a representation scheme is defined, it can be used to justify the rules of decimal arithmetic used in the above proofs. Moreover, one can directly demonstrate that the decimals 0.999... and 1.000... both represent the same real number; it is built into the definition. This is done below.

Analytic proofs

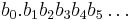

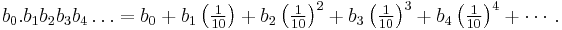

Since the question of 0.999... does not affect the formal development of mathematics, it can be postponed until one proves the standard theorems of real analysis. One requirement is to characterize real numbers that can be written in decimal notation, consisting of an optional sign, a finite sequence of any number of digits forming an integer part, a decimal separator, and a sequence of digits forming a fractional part. For the purpose of discussing 0.999..., the integer part can be summarized as b0 and one can neglect negatives, so a decimal expansion has the form

It should be noted that the fraction part, unlike the integer part, is not limited to a finite number of digits. This is a positional notation, so for example the 5 in 500 contributes ten times as much as the 5 in 50, and the 5 in 0.05 contributes one tenth as much as the 5 in 0.5.

Infinite series and sequences

Perhaps the most common development of decimal expansions is to define them as sums of infinite series. In general:

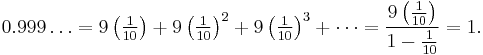

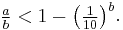

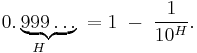

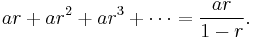

For 0.999... one can apply the convergence theorem concerning geometric series:[6]

- If

then

then

Since 0.999... is such a sum with a common ratio r = 1⁄10, the theorem makes short work of the question:

This proof (actually, that 10 equals 9.999...) appears as early as 1770 in Leonhard Euler's Elements of Algebra.[7]

The sum of a geometric series is itself a result even older than Euler. A typical 18th-century derivation used a term-by-term manipulation similar to the algebraic proof given above, and as late as 1811, Bonnycastle's textbook An Introduction to Algebra uses such an argument for geometric series to justify the same maneuver on 0.999...[8] A 19th-century reaction against such liberal summation methods resulted in the definition that still dominates today: the sum of a series is defined to be the limit of the sequence of its partial sums. A corresponding proof of the theorem explicitly computes that sequence; it can be found in any proof-based introduction to calculus or analysis.[9]

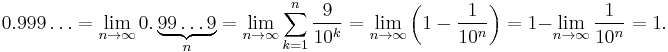

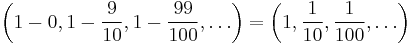

A sequence (x0, x1, x2, ...) has a limit x if the distance |x − xn| becomes arbitrarily small as n increases. The statement that 0.999... = 1 can itself be interpreted and proven as a limit:[10]

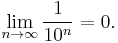

The last step, that 1⁄10n → 0 as n → ∞, is often justified by the Archimedean property of the real numbers. This limit-based attitude towards 0.999... is often put in more evocative but less precise terms. For example, the 1846 textbook The University Arithmetic explains, ".999 +, continued to infinity = 1, because every annexation of a 9 brings the value closer to 1"; the 1895 Arithmetic for Schools says, "...when a large number of 9s is taken, the difference between 1 and .99999... becomes inconceivably small".[11] Such heuristics are often interpreted by students as implying that 0.999... itself is less than 1.

Nested intervals and least upper bounds

The series definition above is a simple way to define the real number named by a decimal expansion. A complementary approach is tailored to the opposite process: for a given real number, define the decimal expansion(s) to name it.

If a real number x is known to lie in the closed interval [0, 10] (i.e., it is greater than or equal to 0 and less than or equal to 10), one can imagine dividing that interval into ten pieces that overlap only at their endpoints: [0, 1], [1, 2], [2, 3], and so on up to [9, 10]. The number x must belong to one of these; if it belongs to [2, 3] then one records the digit "2" and subdivides that interval into [2, 2.1], [2.1, 2.2], ..., [2.8, 2.9], [2.9, 3]. Continuing this process yields an infinite sequence of nested intervals, labeled by an infinite sequence of digits b0, b1, b2, b3, ..., and one writes

In this formalism, the identities 1 = 0.999... and 1 = 1.000... reflect, respectively, the fact that 1 lies in both [0, 1] and [1, 2], so one can choose either subinterval when finding its digits. To ensure that this notation does not abuse the "=" sign, one needs a way to reconstruct a unique real number for each decimal. This can be done with limits, but other constructions continue with the ordering theme.[12]

One straightforward choice is the nested intervals theorem, which guarantees that given a sequence of nested, closed intervals whose lengths become arbitrarily small, the intervals contain exactly one real number in their intersection. So b0.b1b2b3... is defined to be the unique number contained within all the intervals [b0, b0 + 1], [b0.b1, b0.b1 + 0.1], and so on. 0.999... is then the unique real number that lies in all of the intervals [0, 1], [0.9, 1], [0.99, 1], and [0.99...9, 1] for every finite string of 9s. Since 1 is an element of each of these intervals, 0.999... = 1.[13]

The Nested Intervals Theorem is usually founded upon a more fundamental characteristic of the real numbers: the existence of least upper bounds or suprema. To directly exploit these objects, one may define b0.b1b2b3... to be the least upper bound of the set of approximants {b0, b0.b1, b0.b1b2, ...}.[14] One can then show that this definition (or the nested intervals definition) is consistent with the subdivision procedure, implying 0.999... = 1 again. Tom Apostol concludes,

The fact that a real number might have two different decimal representations is merely a reflection of the fact that two different sets of real numbers can have the same supremum.[15]

Proofs from the construction of the real numbers

Some approaches explicitly define real numbers to be certain structures built upon the rational numbers, using axiomatic set theory. The natural numbers – 0, 1, 2, 3, and so on – begin with 0 and continue upwards, so that every number has a successor. One can extend the natural numbers with their negatives to give all the integers, and to further extend to ratios, giving the rational numbers. These number systems are accompanied by the arithmetic of addition, subtraction, multiplication, and division. More subtly, they include ordering, so that one number can be compared to another and found to be less than, greater than, or equal to another number.

The step from rationals to reals is a major extension. There are at least two popular ways to achieve this step, both published in 1872: Dedekind cuts and Cauchy sequences. Proofs that 0.999... = 1 which directly use these constructions are not found in textbooks on real analysis, where the modern trend for the last few decades has been to use an axiomatic analysis. Even when a construction is offered, it is usually applied towards proving the axioms of the real numbers, which then support the above proofs. However, several authors express the idea that starting with a construction is more logically appropriate, and the resulting proofs are more self-contained.[16]

Dedekind cuts

In the Dedekind cut approach, each real number x is defined as the infinite set of all rational numbers that are less than x.[17] In particular, the real number 1 is the set of all rational numbers that are less than 1.[18] Every positive decimal expansion easily determines a Dedekind cut: the set of rational numbers which are less than some stage of the expansion. So the real number 0.999... is the set of rational numbers r such that r < 0, or r < 0.9, or r < 0.99, or r is less than some other number of the form

.[19]

.[19]

Every element of 0.999... is less than 1, so it is an element of the real number 1. Conversely, an element of 1 is a rational number

which implies

Since 0.999... and 1 contain the same rational numbers, they are the same set: 0.999... = 1.

The definition of real numbers as Dedekind cuts was first published by Richard Dedekind in 1872.[20] The above approach to assigning a real number to each decimal expansion is due to an expository paper titled "Is 0.999 ... = 1?" by Fred Richman in Mathematics Magazine, which is targeted at teachers of collegiate mathematics, especially at the junior/senior level, and their students.[21] Richman notes that taking Dedekind cuts in any dense subset of the rational numbers yields the same results; in particular, he uses decimal fractions, for which the proof is more immediate. He also notes that typically the definitions allow { x : x < 1 } to be a cut but not { x : x ≤ 1 } (or vice versa) "Why do that? Precisely to rule out the existence of distinct numbers 0.9* and 1. [...] So we see that in the traditional definition of the real numbers, the equation 0.9* = 1 is built in at the beginning."[22] A further modification of the procedure leads to a different structure where the two are not equal. Although it is consistent, many of the common rules of decimal arithmetic no longer hold, for example the fraction 1/3 has no representation; see "Alternative number systems" below.

Cauchy sequences

Another approach to constructing the real numbers uses the ordering of rationals less directly. First, the distance between x and y is defined as the absolute value |x − y|, where the absolute value |z| is defined as the maximum of z and −z, thus never negative. Then the reals are defined to be the sequences of rationals that have the Cauchy sequence property using this distance. That is, in the sequence (x0, x1, x2, ...), a mapping from natural numbers to rationals, for any positive rational δ there is an N such that |xm − xn| ≤ δ for all m, n > N. (The distance between terms becomes smaller than any positive rational.)[23]

If (xn) and (yn) are two Cauchy sequences, then they are defined to be equal as real numbers if the sequence (xn − yn) has the limit 0. Truncations of the decimal number b0.b1b2b3... generate a sequence of rationals which is Cauchy; this is taken to define the real value of the number.[24] Thus in this formalism the task is to show that the sequence of rational numbers

has the limit 0. Considering the nth term of the sequence, for n=0,1,2,..., it must therefore be shown that

This limit is plain;[25] one possible proof is that for ε = a/b > 0 one can take N = b in the definition of the limit of a sequence. So again 0.999... = 1.

The definition of real numbers as Cauchy sequences was first published separately by Eduard Heine and Georg Cantor, also in 1872.[20] The above approach to decimal expansions, including the proof that 0.999... = 1, closely follows Griffiths & Hilton's 1970 work A comprehensive textbook of classical mathematics: A contemporary interpretation. The book is written specifically to offer a second look at familiar concepts in a contemporary light.[26]

Generalizations

The result that 0.999... = 1 generalizes readily in two ways. First, every nonzero number with a finite decimal notation (equivalently, endless trailing 0s) has a counterpart with trailing 9s. For example, 0.24999... equals 0.25, exactly as in the special case considered. These numbers are exactly the decimal fractions, and they are dense.[27]

Second, a comparable theorem applies in each radix or base. For example, in base 2 (the binary numeral system) 0.111... equals 1, and in base 3 (the ternary numeral system) 0.222... equals 1. Textbooks of real analysis are likely to skip the example of 0.999... and present one or both of these generalizations from the start.[28]

Alternative representations of 1 also occur in non-integer bases. For example, in the golden ratio base, the two standard representations are 1.000... and 0.101010..., and there are infinitely many more representations that include adjacent 1s. Generally, for almost all q between 1 and 2, there are uncountably many base-q expansions of 1. On the other hand, there are still uncountably many q (including all natural numbers greater than 1) for which there is only one base-q expansion of 1, other than the trivial 1.000.... This result was first obtained by Paul Erdős, Miklos Horváth, and István Joó around 1990. In 1998 Vilmos Komornik and Paola Loreti determined the smallest such base, the Komornik–Loreti constant q = 1.787231650.... In this base, 1 = 0.11010011001011010010110011010011...; the digits are given by the Thue–Morse sequence, which does not repeat.[29]

A more far-reaching generalization addresses the most general positional numeral systems. They too have multiple representations, and in some sense the difficulties are even worse. For example:[30]

- In the balanced ternary system, 1/2 = 0.111... = 1.111....

- In the reverse factorial number system (using bases 2,3,4,... for positions after the decimal point), 1 = 1.000... = 0.1234....

Impossibility of unique representation

That all these different number systems suffer from multiple representations for some real numbers can be attributed to a fundamental difference between the real numbers as an ordered set and collections of infinite strings of symbols, ordered lexicographically. Indeed the following two properties account for the difficulty:

- If an interval of the real numbers is partitioned into two non-empty parts L, R, such that every element of L is (strictly) less than every element of R, then either L contains a largest element or R contains a smallest element, but not both.

- The collection of infinite strings of symbols taken from any finite "alphabet", lexicographically ordered, can be partitioned into two non-empty parts L, R, such that every element of L is less than every element of R, while L contains a largest element and R contains a smallest element. Indeed it suffices to take two finite prefixes (initial substrings) p1, p2 of elements from the collection such that they differ only in their final symbol, for which symbol they have successive values, and take for L the set of all strings in the collection whose corresponding prefix is at most p1, and for R the remainder, the strings in the collection whose corresponding prefix is at least p2. Then L has a largest element, starting with p1 and choosing the largest available symbol in all following positions, while R has a smallest element obtained by following p2 by the smallest symbol in all positions.

The first point follows from basic properties of the real numbers: L has a supremum and R has an infimum, which are easily seen to be equal; being a real number it either lies in R or in L, but not both since L and R are supposed to be disjoint. The second point generalizes the 0.999.../1.000... pair obtained for p1 = "0", p2 = "1". In fact one need not use the same alphabet for all positions (so that for instance mixed radix systems can be included) or consider the full collection of possible strings; the only important points are that at each position a finite set of symbols (which may even depend on the previous symbols) can be chosen from (this is needed to ensure maximal and minimal choices), and that making a valid choice for any position should result in a valid infinite string (so one should not allow "9" in each position while forbidding an infinite succession of "9"s). Under these assumptions, the above argument shows that an order preserving map from the collection of strings to an interval of the real numbers cannot be a bijection: either some numbers do not correspond to any string, or some of them correspond to more than one string.

Marko Petkovšek has proven that for any positional system that names all the real numbers, the set of reals with multiple representations is always dense. He calls the proof "an instructive exercise in elementary point-set topology"; it involves viewing sets of positional values as Stone spaces and noticing that their real representations are given by continuous functions.[31]

Applications

One application of 0.999... as a representation of 1 occurs in elementary number theory. In 1802, H. Goodwin published an observation on the appearance of 9s in the repeating-decimal representations of fractions whose denominators are certain prime numbers. Examples include:

- 1/7 = 0.142857142857... and 142 + 857 = 999.

- 1/73 = 0.0136986301369863... and 0136 + 9863 = 9999.

E. Midy proved a general result about such fractions, now called Midy's theorem, in 1836. The publication was obscure, and it is unclear if his proof directly involved 0.999..., but at least one modern proof by W. G. Leavitt does. If one can prove that a decimal of the form 0.b1b2b3... is a positive integer, then it must be 0.999..., which is then the source of the 9s in the theorem.[32] Investigations in this direction can motivate such concepts as greatest common divisors, modular arithmetic, Fermat primes, order of group elements, and quadratic reciprocity.[33]

Returning to real analysis, the base-3 analogue 0.222... = 1 plays a key role in a characterization of one of the simplest fractals, the middle-thirds Cantor set:

- A point in the unit interval lies in the Cantor set if and only if it can be represented in ternary using only the digits 0 and 2.

The nth digit of the representation reflects the position of the point in the nth stage of the construction. For example, the point 2⁄3 is given the usual representation of 0.2 or 0.2000..., since it lies to the right of the first deletion and to the left of every deletion thereafter. The point 1⁄3 is represented not as 0.1 but as 0.0222..., since it lies to the left of the first deletion and to the right of every deletion thereafter.[34]

Repeating nines also turn up in yet another of Georg Cantor's works. They must be taken into account to construct a valid proof, applying his 1891 diagonal argument to decimal expansions, of the uncountability of the unit interval. Such a proof needs to be able to declare certain pairs of real numbers to be different based on their decimal expansions, so one needs to avoid pairs like 0.2 and 0.1999... A simple method represents all numbers with nonterminating expansions; the opposite method rules out repeating nines.[35] A variant that may be closer to Cantor's original argument actually uses base 2, and by turning base-3 expansions into base-2 expansions, one can prove the uncountability of the Cantor set as well.[36]

Skepticism in education

Students of mathematics often reject the equality of 0.999... and 1, for reasons ranging from their disparate appearance to deep misgivings over the limit concept and disagreements over the nature of infinitesimals. There are many common contributing factors to the confusion:

- Students are often "mentally committed to the notion that a number can be represented in one and only one way by a decimal." Seeing two manifestly different decimals representing the same number appears to be a paradox, which is amplified by the appearance of the seemingly well-understood number 1.[37]

- Some students interpret "0.999..." (or similar notation) as a large but finite string of 9s, possibly with a variable, unspecified length. If they accept an infinite string of nines, they may still expect a last 9 "at infinity".[38]

- Intuition and ambiguous teaching lead students to think of the limit of a sequence as a kind of infinite process rather than a fixed value, since a sequence need not reach its limit. Where students accept the difference between a sequence of numbers and its limit, they might read "0.999..." as meaning the sequence rather than its limit.[39]

These ideas are mistaken in the context of the standard real numbers, although some may be valid in other number systems, either invented for their general mathematical utility or as instructive counterexamples to better understand 0.999...

Many of these explanations were found by David Tall, who has studied characteristics of teaching and cognition that lead to some of the misunderstandings he has encountered in his college students. Interviewing his students to determine why the vast majority initially rejected the equality, he found that "students continued to conceive of 0.999... as a sequence of numbers getting closer and closer to 1 and not a fixed value, because 'you haven’t specified how many places there are' or 'it is the nearest possible decimal below 1'".[40]

Of the elementary proofs, multiplying 0.333... = 1⁄3 by 3 is apparently a successful strategy for convincing reluctant students that 0.999... = 1. Still, when confronted with the conflict between their belief of the first equation and their disbelief of the second, some students either begin to disbelieve the first equation or simply become frustrated.[41] Nor are more sophisticated methods foolproof: students who are fully capable of applying rigorous definitions may still fall back on intuitive images when they are surprised by a result in advanced mathematics, including 0.999.... For example, one real analysis student was able to prove that 0.333... = 1⁄3 using a supremum definition, but then insisted that 0.999... < 1 based on her earlier understanding of long division.[42] Others still are able to prove that 1⁄3 = 0.333..., but, upon being confronted by the fractional proof, insist that "logic" supersedes the mathematical calculations.

Joseph Mazur tells the tale of an otherwise brilliant calculus student of his who "challenged almost everything I said in class but never questioned his calculator," and who had come to believe that nine digits are all one needs to do mathematics, including calculating the square root of 23. The student remained uncomfortable with a limiting argument that 9.99... = 10, calling it a "wildly imagined infinite growing process."[43]

As part of Ed Dubinsky's APOS theory of mathematical learning, Dubinsky and his collaborators (2005) propose that students who conceive of 0.999... as a finite, indeterminate string with an infinitely small distance from 1 have "not yet constructed a complete process conception of the infinite decimal". Other students who have a complete process conception of 0.999... may not yet be able to "encapsulate" that process into an "object conception", like the object conception they have of 1, and so they view the process 0.999... and the object 1 as incompatible. Dubinsky et al. also link this mental ability of encapsulation to viewing 1⁄3 as a number in its own right and to dealing with the set of natural numbers as a whole.[44]

In popular culture

With the rise of the Internet, debates about 0.999... have escaped the classroom and are commonplace on newsgroups and message boards, including many that nominally have little to do with mathematics. In the newsgroup sci.math, arguing over 0.999... is a "popular sport", and it is one of the questions answered in its FAQ.[45] The FAQ briefly covers 1⁄3, multiplication by 10, and limits, and it alludes to Cauchy sequences as well.

A 2003 edition of the general-interest newspaper column The Straight Dope discusses 0.999... via 1⁄3 and limits, saying of misconceptions,

The lower primate in us still resists, saying: .999~ doesn't really represent a number, then, but a process. To find a number we have to halt the process, at which point the .999~ = 1 thing falls apart. Nonsense.[46]

The Straight Dope cites a discussion on its own message board that grew out of an unidentified "other message board ... mostly about video games". In the same vein, the question of 0.999... proved such a popular topic in the first seven years of Blizzard Entertainment's Battle.net forums that the company issued a "press release" on April Fools' Day 2004 that it is 1:

We are very excited to close the book on this subject once and for all. We've witnessed the heartache and concern over whether .999~ does or does not equal 1, and we're proud that the following proof finally and conclusively addresses the issue for our customers.[47]

Two proofs are then offered, based on limits and multiplication by 10.

0.999... features also in mathematical folklore, specifically in the following joke:[48]

Q: How many mathematicians does it take to screw in a lightbulb?

A: 0.999999....

In alternative number systems

Although the real numbers form an extremely useful number system, the decision to interpret the notation "0.999..." as naming a real number is ultimately a convention, and Timothy Gowers argues in Mathematics: A Very Short Introduction that the resulting identity 0.999... = 1 is a convention as well:

However, it is by no means an arbitrary convention, because not adopting it forces one either to invent strange new objects or to abandon some of the familiar rules of arithmetic.[49]

One can define other number systems using different rules or new objects; in some such number systems, the above proofs would need to be reinterpreted and one might find that, in a given number system, 0.999... and 1 might not be identical. However, many number systems are extensions of —rather than independent alternatives to— the real number system, so 0.999... = 1 continues to hold. Even in such number systems, though, it is worthwhile to examine alternative number systems, not only for how 0.999... behaves (if, indeed, a number expressed as "0.999..." is both meaningful and unambiguous), but also for the behavior of related phenomena. If such phenomena differ from those in the real number system, then at least one of the assumptions built into the system must break down.

Infinitesimals

Some proofs that 0.999... = 1 rely on the Archimedean property of the real numbers: that there are no nonzero infinitesimals. Specifically, the difference 1 − 0.999... must be smaller than any positive rational number, so it must be an infinitesimal; but since the reals do not contain nonzero infinitesimals, the difference is therefore zero, and therefore the two values are the same.

However, there are mathematically coherent ordered algebraic structures, including various alternatives to the real numbers, which are non-Archimedean. For example, the dual numbers include a new infinitesimal element ε, analogous to the imaginary unit i in the complex numbers except that ε2 = 0. The resulting structure is useful in automatic differentiation. The dual numbers can be given a lexicographic order, in which case the multiples of ε become non-Archimedean elements.[50] Note however that, as an extension of the real numbers, the dual numbers still have 0.999... = 1. On a related note, while ε exists in dual numbers, so does ε/2, so ε is not "the smallest positive dual number," and, indeed, as in the reals, no such number exists.

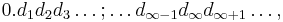

Non-standard analysis provides a number system with a full array of infinitesimals (and their inverses).[51] A. H. Lightstone developed a decimal expansion for hyperreal numbers in (0, 1)∗.[52] Lightstone shows how to associate to each number a sequence of digits,

indexed by the hypernatural numbers. While he does not directly discuss 0.999..., he shows the real number 1/3 is represented by 0.333...;...333... which is a consequence of the transfer principle. As a consequence the number 0.999...;...999... = 1. With this type of decimal representation, not every expansion represents a number. In particular "0.333...;...000..." and "0.999...;...000..." do not correspond to any number.

The standard definition of the number 0.999... is the limit of the sequence 0.9, 0.99, 0.999, ... A different definition considers the equivalence class [(0.9, 0.99, 0.999, ...)] of this sequence in the ultrapower construction, which corresponds to a number that is infinitesimally smaller than 1. More generally, the hyperreal number uH=0.999...;...999000..., with last digit 9 at infinite hypernatural rank H, satisfies a strict inequality uH < 1. Accordingly, Karin Katz and Mikhail Katz have proposed an alternative interpretation of "0.999...":

All such interpretations of "0.999..." are infinitely close to 1. Ian Stewart characterizes this interpretation as an "entirely reasonable" way to rigorously justify the intuition that "there's a little bit missing" from 1 in 0.999....[54] Along with Katz & Katz, Robert Ely also questions the assumption that students' ideas about 0.999... < 1 are erroneous intuitions about the real numbers, interpreting them rather as nonstandard intuitions that could be valuable in the learning of calculus.[55][56] Jose Benardete in his book Infinity: An essay in metaphysics argues that some natural pre-mathematical intuitions cannot be expressed if one is limited to an overly restrictive number system:

- The intelligibility of the continuum has been found--many times over--to require that the domain of real numbers be enlarged to include infinitesimals. This enlarged domain may be styled the domain of continuum numbers. It will now be evident that .9999... does not equal 1 but falls infinitesimally short of it. I think that .9999... should indeed be admitted as a number ... though not as a real number.[57]

Hackenbush

Combinatorial game theory provides alternative reals as well, with infinite Blue-Red Hackenbush as one particularly relevant example. In 1974, Elwyn Berlekamp described a correspondence between Hackenbush strings and binary expansions of real numbers, motivated by the idea of data compression. For example, the value of the Hackenbush string LRRLRLRL... is 0.0101012... = 1/3. However, the value of LRLLL... (corresponding to 0.111...2) is infinitesimally less than 1. The difference between the two is the surreal number 1/ω, where ω is the first infinite ordinal; the relevant game is LRRRR... or 0.000...2.[58]

This is in fact true of the binary expansions of many rational numbers, where the values of the numbers are equal but the corresponding binary tree paths are different. For example, 0.10111...2 = 0.11000...2, which are both equal to 3⁄4, but the first representation corresponds to the binary tree path LRLRRR... while the second corresponds to the different path LRRLLL....

Revisiting subtraction

Another manner in which the proofs might be undermined is if 1 − 0.999... simply does not exist, because subtraction is not always possible. Mathematical structures with an addition operation but not a subtraction operation include commutative semigroups, commutative monoids and semirings. Richman considers two such systems, designed so that 0.999... < 1.

First, Richman defines a nonnegative decimal number to be a literal decimal expansion. He defines the lexicographical order and an addition operation, noting that 0.999... < 1 simply because 0 < 1 in the ones place, but for any nonterminating x, one has 0.999... + x = 1 + x. So one peculiarity of the decimal numbers is that addition cannot always be cancelled; another is that no decimal number corresponds to 1⁄3. After defining multiplication, the decimal numbers form a positive, totally ordered, commutative semiring.[59]

In the process of defining multiplication, Richman also defines another system he calls "cut D", which is the set of Dedekind cuts of decimal fractions. Ordinarily this definition leads to the real numbers, but for a decimal fraction d he allows both the cut (−∞, d ) and the "principal cut" (−∞, d ]. The result is that the real numbers are "living uneasily together with" the decimal fractions. Again 0.999... < 1. There are no positive infinitesimals in cut D, but there is "a sort of negative infinitesimal," 0−, which has no decimal expansion. He concludes that 0.999... = 1 + 0−, while the equation "0.999... + x = 1" has no solution.[60]

p-adic numbers

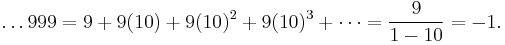

When asked about 0.999..., novices often believe there should be a "final 9," believing 1 − 0.999... to be a positive number which they write as "0.000...1". Whether or not that makes sense, the intuitive goal is clear: adding a 1 to the last 9 in 0.999... would carry all the 9s into 0s and leave a 1 in the ones place. Among other reasons, this idea fails because there is no "last 9" in 0.999....[61] However, there is a system that contains an infinite string of 9s including a last 9.

The p-adic numbers are an alternative number system of interest in number theory. Like the real numbers, the p-adic numbers can be built from the rational numbers via Cauchy sequences; the construction uses a different metric in which 0 is closer to p, and much closer to pn, than it is to 1. The p-adic numbers form a field for prime p and a ring for other p, including 10. So arithmetic can be performed in the p-adics, and there are no infinitesimals.

In the 10-adic numbers, the analogues of decimal expansions run to the left. The 10-adic expansion ...999 does have a last 9, and it does not have a first 9. One can add 1 to the ones place, and it leaves behind only 0s after carrying through: 1 + ...999 = ...000 = 0, and so ...999 = −1.[62] Another derivation uses a geometric series. The infinite series implied by "...999" does not converge in the real numbers, but it converges in the 10-adics, and so one can re-use the familiar formula:

(Compare with the series above.) A third derivation was invented by a seventh-grader who was doubtful over her teacher's limiting argument that 0.999... = 1 but was inspired to take the multiply-by-10 proof above in the opposite direction: if x = ...999 then 10x = ...990, so 10x = x − 9, hence x = −1 again.[62]

As a final extension, since 0.999... = 1 (in the reals) and ...999 = −1 (in the 10-adics), then by "blind faith and unabashed juggling of symbols"[64] one may add the two equations and arrive at ...999.999... = 0. This equation does not make sense either as a 10-adic expansion or an ordinary decimal expansion, but it turns out to be meaningful and true if one develops a theory of "double-decimals" with eventually repeating left ends to represent a familiar system: the real numbers.[65]

Related questions

- Zeno's paradoxes, particularly the paradox of the runner, are reminiscent of the apparent paradox that 0.999... and 1 are equal. The runner paradox can be mathematically modelled and then, like 0.999..., resolved using a geometric series. However, it is not clear if this mathematical treatment addresses the underlying metaphysical issues Zeno was exploring.[66]

- Division by zero occurs in some popular discussions of 0.999..., and it also stirs up contention. While most authors choose to define 0.999..., almost all modern treatments leave division by zero undefined, as it can be given no meaning in the standard real numbers. However, division by zero is defined in some other systems, such as complex analysis, where the extended complex plane, i.e. the Riemann sphere, has a "point at infinity". Here, it makes sense to define 1/0 to be infinity;[67] and, in fact, the results are profound and applicable to many problems in engineering and physics. Some prominent mathematicians argued for such a definition long before either number system was developed.[68]

- Negative zero is another redundant feature of many ways of writing numbers. In number systems, such as the real numbers, where "0" denotes the additive identity and is neither positive nor negative, the usual interpretation of "−0" is that it should denote the additive inverse of 0, which forces −0 = 0.[69] Nonetheless, some scientific applications use separate positive and negative zeroes, as do some computing binary number systems (for example integers stored in the sign and magnitude or ones' complement formats, or floating point numbers as specified by the IEEE floating-point standard).[70][71]

See also

Notes

- ^ Starbird & Starbird 1992.

- ^ Beswick 2004.

- ^ This argument is found in Peressini and Peressini p. 186

- ^ Byers pp. 39–41

- ^ Richman p. 396

- ^ Rudin p. 61, Theorem 3.26; J. Stewart p. 706

- ^ Euler p. 170

- ^ Grattan-Guinness p. 69; Bonnycastle p. 177

- ^ For example, J. Stewart p. 706, Rudin p. 61, Protter and Morrey p. 213, Pugh p. 180, J.B. Conway p. 31

- ^ The limit follows, for example, from Rudin p. 57, Theorem 3.20e. For a more direct approach, see also Finney, Weir, Giordano (2001) Thomas' Calculus: Early Transcendentals 10ed, Addison-Wesley, New York. Section 8.1, example 2(a), example 6(b).

- ^ Davies p. 175; Smith and Harrington p. 115

- ^ Beals p. 22; I. Stewart p. 34

- ^ Bartle and Sherbert pp. 60–62; Pedrick p. 29; Sohrab p. 46

- ^ Apostol pp. 9, 11–12; Beals p. 22; Rosenlicht p. 27

- ^ Apostol p. 12

- ^ The historical synthesis is claimed by Griffiths and Hilton (p.xiv) in 1970 and again by Pugh (p. 10) in 2001; both actually prefer Dedekind cuts to axioms. For the use of cuts in textbooks, see Pugh p. 17 or Rudin p. 17. For viewpoints on logic, Pugh p. 10, Rudin p.ix, or Munkres p. 30

- ^ Enderton (p. 113) qualifies this description: "The idea behind Dedekind cuts is that a real number x can be named by giving an infinite set of rationals, namely all the rationals less than x. We will in effect define x to be the set of rationals smaller than x. To avoid circularity in the definition, we must be able to characterize the sets of rationals obtainable in this way..."

- ^ Rudin pp. 17–20, Richman p. 399, or Enderton p. 119. To be precise, Rudin, Richman, and Enderton call this cut 1*, 1−, and 1R, respectively; all three identify it with the traditional real number 1. Note that what Rudin and Enderton call a Dedekind cut, Richman calls a "nonprincipal Dedekind cut".

- ^ Richman p. 399

- ^ a b J J O'Connor and E F Robertson (October 2005). "History topic: The real numbers: Stevin to Hilbert". MacTutor History of Mathematics. http://www-gap.dcs.st-and.ac.uk/~history/PrintHT/Real_numbers_2.html. Retrieved 2006-08-30.

- ^ Richman

- ^ Richman pp. 398–399

- ^ Griffiths & Hilton §24.2 "Sequences" p. 386

- ^ Griffiths & Hilton pp. 388, 393

- ^ Griffiths & Hilton p. 395

- ^ Griffiths & Hilton pp.viii, 395

- ^ Petkovšek p. 408

- ^ Protter and Morrey p. 503; Bartle and Sherbert p. 61

- ^ Komornik and Loreti p. 636

- ^ Kempner p. 611; Petkovšek p. 409

- ^ Petkovšek pp. 410–411

- ^ Leavitt 1984 p. 301

- ^ Lewittes pp. 1–3; Leavitt 1967 pp. 669, 673; Shrader-Frechette pp. 96–98

- ^ Pugh p. 97; Alligood, Sauer, and Yorke pp. 150–152. Protter and Morrey (p. 507) and Pedrick (p. 29) assign this description as an exercise.

- ^ Maor (p. 60) and Mankiewicz (p. 151) review the former method; Mankiewicz attributes it to Cantor, but the primary source is unclear. Munkres (p. 50) mentions the latter method.

- ^ Rudin p. 50, Pugh p. 98

- ^ Bunch p. 119; Tall and Schwarzenberger p. 6. The last suggestion is due to Burrell (p. 28): "Perhaps the most reassuring of all numbers is 1 ... So it is particularly unsettling when someone tries to pass off 0.9~ as 1."

- ^ Tall and Schwarzenberger pp. 6–7; Tall 2000 p. 221

- ^ Tall and Schwarzenberger p. 6; Tall 2000 p. 221

- ^ Tall 2000 p. 221

- ^ Tall 1976 pp. 10–14

- ^ Pinto and Tall p. 5, Edwards and Ward pp. 416–417

- ^ Mazur pp. 137–141

- ^ Dubinsky et al. 261–262

- ^ As observed by Richman (p. 396). Hans de Vreught (1994). "sci.math FAQ: Why is 0.9999... = 1?". http://www.faqs.org/faqs/sci-math-faq/specialnumbers/0.999eq1/. Retrieved 2006-06-29.

- ^ Cecil Adams (2003-07-11). "An infinite question: Why doesn't .999~ = 1?". The Straight Dope. Chicago Reader. http://www.straightdope.com/columns/030711.html. Retrieved 2006-09-06.

- ^ "Blizzard Entertainment Announces .999~ (Repeating) = 1". Press Release. Blizzard Entertainment. 2004-04-01. http://us.blizzard.com/en-us/company/press/pressreleases.html?040401. Retrieved 2009-11-16.

- ^ Renteln and Dundes, p. 27

- ^ Gowers p. 60

- ^ Berz 439–442

- ^ For a full treatment of non-standard numbers see for example Robinson's Non-standard Analysis.

- ^ Lightstone pp. 245–247

- ^ Katz & Katz 2010

- ^ Stewart 2009, p. 175; the full discussion of 0.999... is spread through pp. 172–175.

- ^ Katz & Katz (2010b)

- ^ R. Ely (2010)

- ^ Benardete, José Amado (1964). Infinity: An essay in metaphysics. Clarendon Press. p. 279. http://books.google.com/books?id=wMgtAAAAMAAJ&&hl=en&ei=3lTSTqSPGMrE4gTNwI1g&sa=X&oi=book_result&ct=result&resnum=1&ved=0CDIQ6AEwAA.

- ^ Berlekamp, Conway, and Guy (pp. 79–80, 307–311) discuss 1 and 1/3 and touch on 1/ω. The game for 0.111...2 follows directly from Berlekamp's Rule.

- ^ Richman pp. 397–399

- ^ Richman pp. 398–400. Rudin (p. 23) assigns this alternative construction (but over the rationals) as the last exercise of Chapter 1.

- ^ Gardiner p. 98; Gowers p. 60

- ^ a b Fjelstad p. 11

- ^ Fjelstad pp. 14–15

- ^ DeSua p. 901

- ^ DeSua pp. 902–903

- ^ Wallace p. 51, Maor p. 17

- ^ See, for example, J.B. Conway's treatment of Möbius transformations, pp. 47–57

- ^ Maor p. 54

- ^ Munkres p. 34, Exercise 1(c)

- ^ Kroemer, Herbert; Kittel, Charles (1980). Thermal Physics (2e ed.). W. H. Freeman. p. 462. ISBN 0-7167-1088-9.

- ^ "Floating point types". MSDN C# Language Specification. http://msdn.microsoft.com/library/en-us/csspec/html/vclrfcsharpspec_4_1_6.asp. Retrieved 2006-08-29.

References

- Alligood, K. T.; Sauer, T. D.; Yorke, J. A. (1996). "4.1 Cantor Sets". Chaos: An introduction to dynamical systems. Springer. ISBN 0-387-94677-2.

- This introductory textbook on dynamical systems is aimed at undergraduate and beginning graduate students. (p. ix)

- Apostol, Tom M. (1974). Mathematical analysis (2e ed.). Addison-Wesley. ISBN 0-201-00288-4.

- A transition from calculus to advanced analysis, Mathematical analysis is intended to be "honest, rigorous, up to date, and, at the same time, not too pedantic." (pref.) Apostol's development of the real numbers uses the least upper bound axiom and introduces infinite decimals two pages later. (pp. 9–11)

- Bartle, R. G.; Sherbert, D. R. (1982). Introduction to real analysis. Wiley. ISBN 0-471-05944-7.

- This text aims to be "an accessible, reasonably paced textbook that deals with the fundamental concepts and techniques of real analysis." Its development of the real numbers relies on the supremum axiom. (pp. vii–viii)

- Beals, Richard (2004). Analysis. Cambridge UP. ISBN 0-521-60047-2.

- Berlekamp, E. R.; Conway, J. H.; Guy, R. K. (1982). Winning Ways for your Mathematical Plays. Academic Press. ISBN 0-12-091101-9.

- Berz, Martin (1992). "Automatic differentiation as nonarchimedean analysis". Computer Arithmetic and Enclosure Methods. Elsevier. pp. 439–450. http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.31.3019.

- Beswick, Kim (2004), "Why Does 0.999... = 1?: A Perennial Question and Number Sense", Australian Mathematics Teacher 60 (4): 7-9

- Bunch, Bryan H. (1982). Mathematical fallacies and paradoxes. Van Nostrand Reinhold. ISBN 0-442-24905-5.

- This book presents an analysis of paradoxes and fallacies as a tool for exploring its central topic, "the rather tenuous relationship between mathematical reality and physical reality". It assumes first-year high-school algebra; further mathematics is developed in the book, including geometric series in Chapter 2. Although 0.999... is not one of the paradoxes to be fully treated, it is briefly mentioned during a development of Cantor's diagonal method. (pp. ix-xi, 119)

- Burrell, Brian (1998). Merriam-Webster's Guide to Everyday Math: A Home and Business Reference. Merriam-Webster. ISBN 0-87779-621-1.

- Byers, William (2007). How Mathematicians Think: Using Ambiguity, Contradiction, and Paradox to Create Mathematics. Princeton UP. ISBN 0-691-12738-7.

- Conway, John B. (1978) [1973]. Functions of one complex variable I (2e ed.). Springer-Verlag. ISBN 0-387-90328-3.

- This text assumes "a stiff course in basic calculus" as a prerequisite; its stated principles are to present complex analysis as "An Introduction to Mathematics" and to state the material clearly and precisely. (p. vii)

- Davies, Charles (1846). The University Arithmetic: Embracing the Science of Numbers, and Their Numerous Applications. A.S. Barnes. http://books.google.com/books?vid=LCCN02026287&pg=PA175.

- DeSua, Frank C. (November 1960). "A system isomorphic to the reals". The American Mathematical Monthly 67 (9): 900–903. doi:10.2307/2309468. JSTOR 2309468.

- Dubinsky, Ed; Weller, Kirk; McDonald, Michael; Brown, Anne (2005). "Some historical issues and paradoxes regarding the concept of infinity: an APOS analysis: part 2". Educational Studies in Mathematics 60 (2): 253–266. doi:10.1007/s10649-005-0473-0.

- Edwards, Barbara; Ward, Michael (May 2004). "Surprises from mathematics education research: Student (mis)use of mathematical definitions". The American Mathematical Monthly 111 (5): 411–425. doi:10.2307/4145268. JSTOR 4145268. http://www.wou.edu/~wardm/FromMonthlyMay2004.pdf.

- Enderton, Herbert B. (1977). Elements of set theory. Elsevier. ISBN 0-12-238440-7.

- An introductory undergraduate textbook in set theory that "presupposes no specific background". It is written to accommodate a course focusing on axiomatic set theory or on the construction of number systems; the axiomatic material is marked such that it may be de-emphasized. (pp. xi–xii)

- Euler, Leonhard (1822) [1770]. John Hewlett and Francis Horner, English translators.. ed. Elements of Algebra (3rd English ed.). Orme Longman. ISBN 0-387-96014-7. http://books.google.com/?id=X8yv0sj4_1YC&pg=PA170.

- Fjelstad, Paul (January 1995). "The repeating integer paradox". The College Mathematics Journal 26 (1): 11–15. doi:10.2307/2687285. JSTOR 2687285.

- Gardiner, Anthony (2003) [1982]. Understanding Infinity: The Mathematics of Infinite Processes. Dover. ISBN 0-486-42538-X.

- Gowers, Timothy (2002). Mathematics: A Very Short Introduction. Oxford UP. ISBN 0-19-285361-9.

- Grattan-Guinness, Ivor (1970). The development of the foundations of mathematical analysis from Euler to Riemann. MIT Press. ISBN 0-262-07034-0.

- Griffiths, H. B.; Hilton, P. J. (1970). A Comprehensive Textbook of Classical Mathematics: A Contemporary Interpretation. London: Van Nostrand Reinhold. ISBN 0-442-02863-6. LCC QA37.2 G75.

- This book grew out of a course for Birmingham-area grammar school mathematics teachers. The course was intended to convey a university-level perspective on school mathematics, and the book is aimed at students "who have reached roughly the level of completing one year of specialist mathematical study at a university". The real numbers are constructed in Chapter 24, "perhaps the most difficult chapter in the entire book", although the authors ascribe much of the difficulty to their use of ideal theory, which is not reproduced here. (pp. vii, xiv)

- Katz, K.; Katz, M. (2010a). "When is .999... less than 1?". The Montana Mathematics Enthusiast 7 (1): 3–30. http://www.math.umt.edu/TMME/vol7no1/.

- Kempner, A. J. (December 1936). "Anormal Systems of Numeration". The American Mathematical Monthly 43 (10): 610–617. doi:10.2307/2300532. JSTOR 2300532.

- Komornik, Vilmos; Loreti, Paola (1998). "Unique Developments in Non-Integer Bases". The American Mathematical Monthly 105 (7): 636–639. doi:10.2307/2589246. JSTOR 2589246.

- Leavitt, W. G. (1967). "A Theorem on Repeating Decimals". The American Mathematical Monthly 74 (6): 669–673. doi:10.2307/2314251. JSTOR 2314251.

- Leavitt, W. G. (September 1984). "Repeating Decimals". The College Mathematics Journal 15 (4): 299–308. doi:10.2307/2686394. JSTOR 2686394.

- Lightstone, A. H. (March 1972). "Infinitesimals". The American Mathematical Monthly 79 (3): 242–251. doi:10.2307/2316619. JSTOR 2316619.

- Mankiewicz, Richard (2000). The story of mathematics. Cassell. ISBN 0-304-35473-2.

- Mankiewicz seeks to represent "the history of mathematics in an accessible style" by combining visual and qualitative aspects of mathematics, mathematicians' writings, and historical sketches. (p. 8)

- Maor, Eli (1987). To infinity and beyond: a cultural history of the infinite. Birkhäuser. ISBN 3-7643-3325-1.

- A topical rather than chronological review of infinity, this book is "intended for the general reader" but "told from the point of view of a mathematician". On the dilemma of rigor versus readable language, Maor comments, "I hope I have succeeded in properly addressing this problem." (pp. x-xiii)

- Mazur, Joseph (2005). Euclid in the Rainforest: Discovering Universal Truths in Logic and Math. Pearson: Pi Press. ISBN 0-13-147994-6.

- Munkres, James R. (2000) [1975]. Topology (2e ed.). Prentice-Hall. ISBN 0-13-181629-2.

- Intended as an introduction "at the senior or first-year graduate level" with no formal prerequisites: "I do not even assume the reader knows much set theory." (p. xi) Munkres' treatment of the reals is axiomatic; he claims of bare-hands constructions, "This way of approaching the subject takes a good deal of time and effort and is of greater logical than mathematical interest." (p. 30)

- Núñez, Rafael (2006). "Do Real Numbers Really Move? Language, Thought, and Gesture: The Embodied Cognitive Foundations of Mathematics". 18 Unconventional Essays on the Nature of Mathematics. Springer. pp. 160–181. ISBN 978-0-387-25717-4. http://www.cogsci.ucsd.edu/~nunez/web/publications.html.

- Pedrick, George (1994). A First Course in Analysis. Springer. ISBN 0-387-94108-8.

- Peressini, Anthony; Peressini, Dominic (2007). "Philosophy of Mathematics and Mathematics Education". In Bart van Kerkhove, Jean Paul van Bendegem. Perspectives on Mathematical Practices. Logic, Epistemology, and the Unity of Science. 5. Springer. ISBN 978-1-4020-5033-6.

- Petkovšek, Marko (May 1990). "Ambiguous Numbers are Dense". American Mathematical Monthly 97 (5): 408–411. doi:10.2307/2324393. JSTOR 2324393.

- Pinto, Márcia; Tall, David (2001). "Following students' development in a traditional university analysis course". PME25. pp. v4: 57–64. http://www.warwick.ac.uk/staff/David.Tall/pdfs/dot2001j-pme25-pinto-tall.pdf. Retrieved 2009-05-03.

- Protter, M. H.; Morrey, C. B. (1991). A first course in real analysis (2e ed.). Springer. ISBN 0-387-97437-7.

- This book aims to "present a theoretical foundation of analysis that is suitable for students who have completed a standard course in calculus." (p. vii) At the end of Chapter 2, the authors assume as an axiom for the real numbers that bounded, nodecreasing sequences converge, later proving the nested intervals theorem and the least upper bound property. (pp. 56–64) Decimal expansions appear in Appendix 3, "Expansions of real numbers in any base". (pp. 503–507)

- Pugh, Charles Chapman (2001). Real mathematical analysis. Springer-Verlag. ISBN 0-387-95297-7.

- While assuming familiarity with the rational numbers, Pugh introduces Dedekind cuts as soon as possible, saying of the axiomatic treatment, "This is something of a fraud, considering that the entire structure of analysis is built on the real number system." (p. 10) After proving the least upper bound property and some allied facts, cuts are not used in the rest of the book.

- Renteln, Paul; Dundes, Allan (January 2005). "Foolproof: A Sampling of Mathematical Folk Humor". Notices of the AMS 52 (1): 24–34. http://www.ams.org/notices/200501/fea-dundes.pdf. Retrieved 2009-05-03.

- Richman, Fred (December 1999). "Is 0.999... = 1?". Mathematics Magazine 72 (5): 396–400. doi:10.2307/2690798. JSTOR 2690798. Free HTML preprint: Richman, Fred (1999-06-08). "Is 0.999... = 1?". http://www.math.fau.edu/Richman/HTML/999.htm. Retrieved 2006-08-23. Note: the journal article contains material and wording not found in the preprint.

- Robinson, Abraham (1996). Non-standard analysis (Revised ed.). Princeton University Press. ISBN 0-691-04490-2.

- Rosenlicht, Maxwell (1985). Introduction to Analysis. Dover. ISBN 0-486-65038-3. This book gives a "careful rigorous" introduction to real analysis. It gives the axioms of the real numbers and then constructs them (pp. 27–31) as infinite decimals with 0.999... = 1 as part of the definition.

- Rudin, Walter (1976) [1953]. Principles of mathematical analysis (3e ed.). McGraw-Hill. ISBN 0-07-054235-X.

- A textbook for an advanced undergraduate course. "Experience has convinced me that it is pedagogically unsound (though logically correct) to start off with the construction of the real numbers from the rational ones. At the beginning, most students simply fail to appreciate the need for doing this. Accordingly, the real number system is introduced as an ordered field with the least-upper-bound property, and a few interesting applications of this property are quickly made. However, Dedekind's construction is not omitted. It is now in an Appendix to Chapter 1, where it may be studied and enjoyed whenever the time is ripe." (p. ix)

- Shrader-Frechette, Maurice (March 1978). "Complementary Rational Numbers". Mathematics Magazine 51 (2): 90–98. doi:10.2307/2690144. JSTOR 2690144.

- Smith, Charles; Harrington, Charles (1895). Arithmetic for Schools. Macmillan. ISBN 0-665-54808-7. http://books.google.com/books?vid=LCCN02029670&pg=PA115.

- Sohrab, Houshang (2003). Basic Real Analysis. Birkhäuser. ISBN 0-8176-4211-0.

- Starbird, M.; Starbird, T. (March 1992). "Required Redundancy in the Representation of Reals". Proceedings of the American Mathematical Society (AMS) 114 (3): 769-774. JSTOR 2159403.

- Stewart, Ian (1977). The Foundations of Mathematics. Oxford UP. ISBN 0-19-853165-6.

- Stewart, Ian (2009). Professor Stewart's Hoard of Mathematical Treasures. Profile Books. ISBN 978-1-84668-292-6.

- Stewart, James (1999). Calculus: Early transcendentals (4e ed.). Brooks/Cole. ISBN 0-534-36298-2.

- This book aims to "assist students in discovering calculus" and "to foster conceptual understanding". (p. v) It omits proofs of the foundations of calculus.

- Tall, D. O.; Schwarzenberger, R. L. E. (1978). "Conflicts in the Learning of Real Numbers and Limits". Mathematics Teaching 82: 44–49. http://www.warwick.ac.uk/staff/David.Tall/pdfs/dot1978c-with-rolph.pdf. Retrieved 2009-05-03.

- Tall, David (1976/7). "Conflicts and Catastrophes in the Learning of Mathematics". Mathematical Education for Teaching 2 (4): 2–18. http://www.warwick.ac.uk/staff/David.Tall/pdfs/dot1976a-confl-catastrophy.pdf. Retrieved 2009-05-03.

- Tall, David (2000). "Cognitive Development In Advanced Mathematics Using Technology". Mathematics Education Research Journal 12 (3): 210–230. http://www.warwick.ac.uk/staff/David.Tall/pdfs/dot2001b-merj-amt.pdf. Retrieved 2009-05-03.

- von Mangoldt, Dr. Hans (1911). "Reihenzahlen" (in German). Einführung in die höhere Mathematik (1st ed.). Leipzig: Verlag von S. Hirzel.

- Wallace, David Foster (2003). Everything and more: a compact history of infinity. Norton. ISBN 0-393-00338-8.

Further reading

- Burkov, S. E. (1987). "One-dimensional model of the quasicrystalline alloy". Journal of Statistical Physics 47 (3/4): 409. doi:10.1007/BF01007518.

- Burn, Bob (March 1997). "81.15 A Case of Conflict". The Mathematical Gazette 81 (490): 109–112. doi:10.2307/3618786. JSTOR 3618786.

- Calvert, J. B.; Tuttle, E. R.; Martin, Michael S.; Warren, Peter (February 1981). "The Age of Newton: An Intensive Interdisciplinary Course". The History Teacher 14 (2): 167–190. doi:10.2307/493261. JSTOR 493261.

- Choi, Younggi; Do, Jonghoon (November 2005). "Equality Involved in 0.999... and (-8)⅓". For the Learning of Mathematics 25 (3): 13–15, 36. JSTOR 40248503.

- Choong, K. Y.; Daykin, D. E.; Rathbone, C. R. (April 1971). "Rational Approximations to π". Mathematics of Computation 25 (114): 387–392. doi:10.2307/2004936. JSTOR 2004936.

- Edwards, B. (1997). "An undergraduate student's understanding and use of mathematical definitions in real analysis". In Dossey, J., Swafford, J.O., Parmentier, M., Dossey, A.E.. Proceedings of the 19th Annual Meeting of the North American Chapter of the International Group for the Psychology of Mathematics Education. 1. Columbus, OH: ERIC Clearinghouse for Science, Mathematics and Environmental Education. pp. 17–22.

- Eisenmann, Petr (2008). "Why is it not true that 0.999... < 1?". The Teaching of Mathematics 11 (1): 35–40. http://elib.mi.sanu.ac.rs/files/journals/tm/20/tm1114.pdf.

- Ely, Robert (2010). "Nonstandard student conceptions about infinitesimals". Journal for Research in Mathematics Education 41 (2): 117–146.

- This article is a field study involving a student who developed a Leibnizian-style theory of infinitesimals to help her understand calculus, and in particular to account for 0.999... falling short of 1 by an infinitesimal 0.000...1.

- Ferrini-Mundy, J.; Graham, K. (1994). Research in calculus learning: Understanding of limits, derivatives and integrals. In Kaput, J.; Dubinsky, E.. "Research issues in undergraduate mathematics learning". MAA Notes 33: 31–45.

- Lewittes, Joseph (2006). "Midy's Theorem for Periodic Decimals". arXiv:math.NT/0605182 [math.NT].

- Katz, Karin Usadi; Katz, Mikhail G. (2010b). "Zooming in on infinitesimal 1 − .9.. in a post-triumvirate era". Educational Studies in Mathematics 74 (3): 259. arXiv:1003.1501. doi:10.1007/s10649-010-9239-4.

- Gardiner, Tony (June 1985). "Infinite processes in elementary mathematics: How much should we tell the children?". The Mathematical Gazette 69 (448): 77–87. doi:10.2307/3616921. JSTOR 3616921.

- Monaghan, John (December 1988). "Real Mathematics: One Aspect of the Future of A-Level". The Mathematical Gazette 72 (462): 276–281. doi:10.2307/3619940. JSTOR 3619940.

- Navarro, Maria Angeles; Carreras, Pedro Pérez (2010). "A Socratic methodological proposal for the study of the equality 0.999…=1". The Teaching of Mathematics 13 (1): 17–34. http://elib.mi.sanu.ac.rs/files/journals/tm/24/tm1312.pdf.

- Przenioslo, Malgorzata (March 2004). "Images of the limit of function formed in the course of mathematical studies at the university". Educational Studies in Mathematics 55 (1–3): 103–132. doi:10.1023/B:EDUC.0000017667.70982.05.

- Sandefur, James T. (February 1996). "Using Self-Similarity to Find Length, Area, and Dimension". The American Mathematical Monthly 103 (2): 107–120. doi:10.2307/2975103. JSTOR 2975103.

- Sierpińska, Anna (November 1987). "Humanities students and epistemological obstacles related to limits". Educational Studies in Mathematics 18 (4): 371–396. doi:10.1007/BF00240986. JSTOR 3482354.

- Szydlik, Jennifer Earles (May 2000). "Mathematical Beliefs and Conceptual Understanding of the Limit of a Function". Journal for Research in Mathematics Education 31 (3): 258–276. doi:10.2307/749807. JSTOR 749807.

- Tall, David O. (2009). "Dynamic mathematics and the blending of knowledge structures in the calculus". ZDM Mathematics Education 41 (4): 481–492. doi:10.1007/s11858-009-0192-6.

- Tall, David O. (May 1981). "Intuitions of infinity". Mathematics in School 10 (3): 30–33. JSTOR 30214290.

External links

- .999999... = 1? from cut-the-knot

- Why does 0.9999... = 1 ?

- Ask A Scientist: Repeating Decimals

- Proof of the equality based on arithmetic

- Repeating Nines

- Point nine recurring equals one

- David Tall's research on mathematics cognition

- What is so wrong with thinking of real numbers as infinite decimals?

- Theorem 0.999... on Metamath

- A Friendly Chat About Whether 0.999... = 1