Malaria

| Malaria | |

|---|---|

| Classification and external resources | |

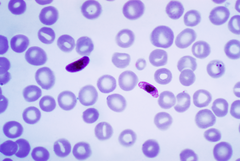

Plasmodium falciparum ring-forms and gametocytes in human blood. |

|

| ICD-10 | B50. |

| ICD-9 | 084 |

| OMIM | 248310 |

| DiseasesDB | 7728 |

| MedlinePlus | 000621 |

| eMedicine | med/1385 emerg/305 ped/1357 |

| MeSH | C03.752.250.552 |

Malaria is a mosquito-borne infectious disease caused by a eukaryotic protist of the genus Plasmodium. It is widespread in tropical and subtropical regions, including parts of the Americas (22 countries), Asia, and Africa. Each year, there are approximately 350–500 million cases of malaria,[1] killing between one and three million people, the majority of whom are young children in sub-Saharan Africa.[2] Ninety percent of malaria-related deaths occur in sub-Saharan Africa. Malaria is commonly associated with poverty, and can indeed be a cause of poverty[3] and a major hindrance to economic development.

Five species of the plasmodium parasite can infect humans; the most serious forms of the disease are caused by Plasmodium falciparum. Malaria caused by Plasmodium vivax, Plasmodium ovale and Plasmodium malariae causes milder disease in humans that is not generally fatal. A fifth species, Plasmodium knowlesi, is a zoonosis that causes malaria in macaques but can also infect humans.[4][5]

Malaria is naturally transmitted by the bite of a female Anopheles mosquito. When a mosquito bites an infected person, a small amount of blood is taken, which contains malaria parasites. These develop within the mosquito, and about one week later, when the mosquito takes its next blood meal, the parasites are injected with the mosquito's saliva into the person being bitten. After a period of between two weeks and several months (occasionally years) spent in the liver, the malaria parasites start to multiply within red blood cells, causing symptoms that include fever, and headache. In severe cases the disease worsens leading to hallucinations, coma, and death.

A wide variety of antimalarial drugs are available to treat malaria. In the last 5 years, treatment of P. falciparum infections in endemic countries has been transformed by the use of combinations of drugs containing an artemisinin derivative. Severe malaria is treated with intravenous or intramuscular quinine or, increasingly, the artemisinin derivative artesunate.[6] Several drugs are also available to prevent malaria in travellers to malaria-endemic countries (prophylaxis). Resistance has developed to several antimalarial drugs, most notably chloroquine.[7]

Malaria transmission can be reduced by preventing mosquito bites by distribution of inexpensive mosquito nets and insect repellents, or by mosquito-control measures such as spraying insecticides inside houses and draining standing water where mosquitoes lay their eggs.

Although many are under development, the challenge of producing a widely available vaccine that provides a high level of protection for a sustained period is still to be met.[8]

Contents |

Signs and symptoms

Symptoms of malaria include fever, shivering, arthralgia (joint pain), vomiting, anemia (caused by hemolysis), hemoglobinuria, retinal damage,[10] and convulsions. The classic symptom of malaria is cyclical occurrence of sudden coldness followed by rigor and then fever and sweating lasting four to six hours, occurring every two days in P. vivax and P. ovale infections, while every three days for P. malariae.[11] P. falciparum can have recurrent fever every 36–48 hours or a less pronounced and almost continuous fever. For reasons that are poorly understood, but that may be related to high intracranial pressure, children with malaria frequently exhibit abnormal posturing, a sign indicating severe brain damage.[12] Malaria has been found to cause cognitive impairments, especially in children. It causes widespread anemia during a period of rapid brain development and also direct brain damage. This neurologic damage results from cerebral malaria to which children are more vulnerable.[13][14] Cerebral malaria is associated with retinal whitening,[15] which may be a useful clinical sign in distinguishing malaria from other causes of fever.[16]

| Species | Appearance | Periodicity | Persistent in liver? |

|---|---|---|---|

| Plasmodium vivax |

|

tertian | yes |

| Plasmodium ovale |

|

tertian | yes |

| Plasmodium falciparum |

|

tertian | no |

| Plasmodium malariae |

|

quartan | no |

Severe malaria is almost exclusively caused by P. falciparum infection, and usually arises 6–14 days after infection.[17] Consequences of severe malaria include coma and death if untreated—young children and pregnant women are especially vulnerable. Splenomegaly (enlarged spleen), severe headache, cerebral ischemia, hepatomegaly (enlarged liver), hypoglycemia, and hemoglobinuria with renal failure may occur. Renal failure is a feature of blackwater fever, where hemoglobin from lysed red blood cells leaks into the urine. Severe malaria can progress extremely rapidly and cause death within hours or days.[17] In the most severe cases of the disease, fatality rates can exceed 20%, even with intensive care and treatment.[18] In endemic areas, treatment is often less satisfactory and the overall fatality rate for all cases of malaria can be as high as one in ten.[19] Over the longer term, developmental impairments have been documented in children who have suffered episodes of severe malaria.[20]

Chronic malaria is seen in both P. vivax and P. ovale, but not in P. falciparum. Here, the disease can relapse months or years after exposure, due to the presence of latent parasites in the liver. Describing a case of malaria as cured by observing the disappearance of parasites from the bloodstream can, therefore, be deceptive. The longest incubation period reported for a P. vivax infection is 30 years.[17] Approximately one in five of P. vivax malaria cases in temperate areas involve overwintering by hypnozoites (i.e., relapses begin the year after the mosquito bite).[21]

Causes

Malaria parasites

Malaria parasites are members of the genus Plasmodium (phylum Apicomplexa). In humans malaria is caused by P. falciparum, P. malariae, P. ovale, P. vivax and P. knowlesi.[22][23] P. falciparum is the most common cause of infection and is responsible for about 80% of all malaria cases, and is also responsible for about 90% of the deaths from malaria.[24] Parasitic Plasmodium species also infect birds, reptiles, monkeys, chimpanzees and rodents.[25] There have been documented human infections with several simian species of malaria, namely P. knowlesi, P. inui, P. cynomolgi,[26] P. simiovale, P. brazilianum, P. schwetzi and P. simium; however, with the exception of P. knowlesi, these are mostly of limited public health importance.[27]

Malaria parasites contain apicoplasts, an organelle usually found in plants, complete with their own functioning genomes. These apicoplast are thought to have originated through the endosymbiosis of algae[28] and play a crucial role in various aspects of parasite metabolism e.g. fatty acid bio-synthesis.[29] To date, 466 proteins have been found to be produced by apicoplasts[30] and these are now being looked at as possible targets for novel anti-malarial drugs.

Mosquito vectors and the Plasmodium life cycle

The parasite's primary (definitive) hosts and transmission vectors are female mosquitoes of the Anopheles genus, while humans and other vertebrates are secondary hosts. Young mosquitoes first ingest the malaria parasite by feeding on an infected human carrier and the infected Anopheles mosquitoes carry Plasmodium sporozoites in their salivary glands. A mosquito becomes infected when it takes a blood meal from an infected human. Once ingested, the parasite gametocytes taken up in the blood will further differentiate into male or female gametes and then fuse in the mosquito gut. This produces an ookinete that penetrates the gut lining and produces an oocyst in the gut wall. When the oocyst ruptures, it releases sporozoites that migrate through the mosquito's body to the salivary glands, where they are then ready to infect a new human host. This type of transmission is occasionally referred to as anterior station transfer.[31] The sporozoites are injected into the skin, alongside saliva, when the mosquito takes a subsequent blood meal.

Only female mosquitoes feed on blood, thus males do not transmit the disease. The females of the Anopheles genus of mosquito prefer to feed at night. They usually start searching for a meal at dusk, and will continue throughout the night until taking a meal. Malaria parasites can also be transmitted by blood transfusions, although this is rare.[32]

Pathogenesis

Malaria in humans develops via two phases: an exoerythrocytic and an erythrocytic phase. The exoerythrocytic phase involves infection of the hepatic system, or liver, whereas the erythrocytic phase involves infection of the erythrocytes, or red blood cells. When an infected mosquito pierces a person's skin to take a blood meal, sporozoites in the mosquito's saliva enter the bloodstream and migrate to the liver. Within 30 minutes of being introduced into the human host, the sporozoites infect hepatocytes, multiplying asexually and asymptomatically for a period of 6–15 days. Once in the liver, these organisms differentiate to yield thousands of merozoites, which, following rupture of their host cells, escape into the blood and infect red blood cells, thus beginning the erythrocytic stage of the life cycle.[33] The parasite escapes from the liver undetected by wrapping itself in the cell membrane of the infected host liver cell.[34]

Within the red blood cells, the parasites multiply further, again asexually, periodically breaking out of their hosts to invade fresh red blood cells. Several such amplification cycles occur. Thus, classical descriptions of waves of fever arise from simultaneous waves of merozoites escaping and infecting red blood cells.

Some P. vivax and P. ovale sporozoites do not immediately develop into exoerythrocytic-phase merozoites, but instead produce hypnozoites that remain dormant for periods ranging from several months (6–12 months is typical) to as long as three years. After a period of dormancy, they reactivate and produce merozoites. Hypnozoites are responsible for long incubation and late relapses in these two species of malaria.[35]

The parasite is relatively protected from attack by the body's immune system because for most of its human life cycle it resides within the liver and blood cells and is relatively invisible to immune surveillance. However, circulating infected blood cells are destroyed in the spleen. To avoid this fate, the P. falciparum parasite displays adhesive proteins on the surface of the infected blood cells, causing the blood cells to stick to the walls of small blood vessels, thereby sequestering the parasite from passage through the general circulation and the spleen.[36] This "stickiness" is the main factor giving rise to hemorrhagic complications of malaria. High endothelial venules (the smallest branches of the circulatory system) can be blocked by the attachment of masses of these infected red blood cells. The blockage of these vessels causes symptoms such as in placental and cerebral malaria. In cerebral malaria the sequestrated red blood cells can breach the blood brain barrier possibly leading to coma.[37]

Although the red blood cell surface adhesive proteins (called PfEMP1, for Plasmodium falciparum erythrocyte membrane protein 1) are exposed to the immune system, they do not serve as good immune targets, because of their extreme diversity; there are at least 60 variations of the protein within a single parasite and effectively limitless versions within parasite populations.[36] The parasite switches between a broad repertoire of PfEMP1 surface proteins, thus staying one step ahead of the pursuing immune system.

Some merozoites turn into male and female gametocytes. If a mosquito pierces the skin of an infected person, it potentially picks up gametocytes within the blood. Fertilization and sexual recombination of the parasite occurs in the mosquito's gut, thereby defining the mosquito as the definitive host of the disease. New sporozoites develop and travel to the mosquito's salivary gland, completing the cycle. Pregnant women are especially attractive to the mosquitoes,[38] and malaria in pregnant women is an important cause of stillbirths, infant mortality and low birth weight,[39] particularly in P. falciparum infection, but also in other species infection, such as P. vivax.[40]

Diagnosis

Since Charles Laveran first visualised the malaria parasite in blood in 1880,[41] the mainstay of malaria diagnosis has been the microscopic examination of blood.

Fever and septic shock are commonly misdiagnosed as severe malaria in Africa, leading to a failure to treat other life-threatening illnesses. In malaria-endemic areas, parasitemia does not ensure a diagnosis of severe malaria, because parasitemia can be incidental to other concurrent disease. Recent investigations suggest that malarial retinopathy is better (collective sensitivity of 95% and specificity of 90%) than any other clinical or laboratory feature in distinguishing malarial from non-malarial coma.[42]

Although blood is the sample most frequently used to make a diagnosis, both saliva and urine have been investigated as alternative, less invasive specimens.[41]

Symptomatic diagnosis

Areas that cannot afford even simple laboratory diagnostic tests often use only a history of subjective fever as the indication to treat for malaria. Using Giemsa-stained blood smears from children in Malawi, one study showed that when clinical predictors (rectal temperature, nailbed pallor, and splenomegaly) were used as treatment indications, rather than using only a history of subjective fevers, a correct diagnosis increased from 21% to 41% of cases, and unnecessary treatment for malaria was significantly decreased.[43]

Microscopic examination of blood films

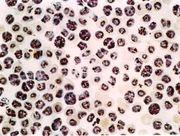

The most economic, preferred, and reliable diagnosis of malaria is microscopic examination of blood films because each of the four major parasite species has distinguishing characteristics. Two sorts of blood film are traditionally used. Thin films are similar to usual blood films and allow species identification because the parasite's appearance is best preserved in this preparation. Thick films allow the microscopist to screen a larger volume of blood and are about eleven times more sensitive than the thin film, so picking up low levels of infection is easier on the thick film, but the appearance of the parasite is much more distorted and therefore distinguishing between the different species can be much more difficult. With the pros and cons of both thick and thin smears taken into consideration, it is imperative to utilize both smears while attempting to make a definitive diagnosis.[44]

From the thick film, an experienced microscopist can detect parasite levels (or parasitemia) down to as low as 0.0000001% of red blood cells. Diagnosis of species can be difficult because the early trophozoites ("ring form") of all four species look identical and it is never possible to diagnose species on the basis of a single ring form; species identification is always based on several trophozoites.

One important thing to note is that P. malariae and P. knowlesi (which is the most common cause of malaria in South-east Asia) look very similar under the microscope. However, P. knowlesi parasitemia increases very fast and causes more severe disease than P. malariae, so it is important to identify and treat infections quickly. Therefore modern methods such as PCR (see "Molecular methods" below) or monoclonal antibody panels that can distinguish between the two should be used in this part of the world. [45]

Antigen tests

For areas where microscopy is not available, or where laboratory staff are not experienced at malaria diagnosis, there are commercial antigen detection tests that require only a drop of blood.[46] Immunochromatographic tests (also called: Malaria Rapid Diagnostic Tests, Antigen-Capture Assay or "Dipsticks") been developed, distributed and fieldtested. These tests use finger-stick or venous blood, the completed test takes a total of 15–20 minutes, and the results are read visually as the presence or absence of colored stripes on the dipstick, so they are suitable for use in the field. The threshold of detection by these rapid diagnostic tests is in the range of 100 parasites/µl of blood (commercial kits can range from about 0.002% to 0.1% parasitemia) compared to 5 by thick film microscopy. One disadvantage is that dipstick tests are qualitative but not quantitative - they can determine if parasites are present in the blood, but not how many.

The first rapid diagnostic tests were using P. falciparum glutamate dehydrogenase as antigen.[47] PGluDH was soon replaced by P.falciparum lactate dehydrogenase, a 33 kDa oxidoreductase [EC 1.1.1.27]. It is the last enzyme of the glycolytic pathway, essential for ATP generation and one of the most abundant enzymes expressed by P.falciparum. PLDH does not persist in the blood but clears about the same time as the parasites following successful treatment. The lack of antigen persistence after treatment makes the pLDH test useful in predicting treatment failure. In this respect, pLDH is similar to pGluDH. Depending on which monoclonal antibodies are used, this type of assay can distinguish between all five different species of human malaria parasites, because of antigenic differences between their pLDH isoenzymes.

Molecular methods

Molecular methods are available in some clinical laboratories and rapid real-time assays (for example, QT-NASBA based on the polymerase chain reaction)[48] are being developed with the hope of being able to deploy them in endemic areas.

PCR (and other molecular methods) is more accurate than microscopy. However, it is expensive, and requires a specialized laboratory. Moreover, levels of parasitemia are not necessarily correlative with the progression of disease, particularly when the parasite is able to adhere to blood vessel walls. Therefore more sensitive, low-tech diagnosis tools need to be developed in order to detect low levels of parasitemia in the field. [49]

Prevention

Methods used to prevent the spread of disease, or to protect individuals in areas where malaria is endemic, include prophylactic drugs, mosquito eradication, and the prevention of mosquito bites. The continued existence of malaria in an area requires a combination of high human population density, high mosquito population density, and high rates of transmission from humans to mosquitoes and from mosquitoes to humans. If any of these is lowered sufficiently, the parasite will sooner or later disappear from that area, as happened in North America, Europe and much of Middle East. However, unless the parasite is eliminated from the whole world, it could become re-established if conditions revert to a combination that favors the parasite's reproduction. Many countries are seeing an increasing number of imported malaria cases due to extensive travel and migration.

Many researchers argue that prevention of malaria may be more cost-effective than treatment of the disease in the long run, but the capital costs required are out of reach of many of the world's poorest people. Economic adviser Jeffrey Sachs estimates that malaria can be controlled for US$3 billion in aid per year.[50]

The distribution of funding varies among countries. Countries with large populations do not receive the same amount of support. The 34 countries that received a per capita annual support of less than $1 included some of the poorest countries in Africa.

Brazil, Eritrea, India, and Vietnam have, unlike many other developing nations, successfully reduced the malaria burden. Common success factors included conducive country conditions, a targeted technical approach using a package of effective tools, data-driven decision-making, active leadership at all levels of government, involvement of communities, decentralized implementation and control of finances, skilled technical and managerial capacity at national and sub-national levels, hands-on technical and programmatic support from partner agencies, and sufficient and flexible financing.[51]

Vector control

Efforts to eradicate malaria by eliminating mosquitoes have been successful in some areas. Malaria was once common in the United States and southern Europe, but vector control programs, in conjunction with the monitoring and treatment of infected humans, eliminated it from those regions. In some areas, the draining of wetland breeding grounds and better sanitation were adequate. Malaria was eliminated from the northern parts of the USA in the early 20th century by such methods, and the use of the pesticide DDT eliminated it from the South by 1951.[52] In 2002, there were 1,059 cases of malaria reported in the US, including eight deaths, but in only five of those cases was the disease contracted in the United States.

Before DDT, malaria was successfully eradicated or controlled also in several tropical areas by removing or poisoning the breeding grounds of the mosquitoes or the aquatic habitats of the larva stages, for example by filling or applying oil to places with standing water. These methods have seen little application in Africa for more than half a century.[53]

Sterile insect technique is emerging as a potential mosquito control method. Progress towards transgenic, or genetically modified, insects suggest that wild mosquito populations could be made malaria-resistant. Researchers at Imperial College London created the world's first transgenic malaria mosquito,[54] with the first plasmodium-resistant species announced by a team at Case Western Reserve University in Ohio in 2002.[55] Successful replacement of current populations with a new genetically modified population, relies upon a drive mechanism, such as transposable elements to allow for non-Mendelian inheritance of the gene of interest. However, this approach contains many difficulties and success is a distant prospect.[56] An even more futuristic method of vector control is the idea that lasers could be used to kill flying mosquitoes.[57]

Prophylactic drugs

Several drugs, most of which are also used for treatment of malaria, can be taken preventively. Modern drugs used include mefloquine (Lariam), doxycycline (available generically), and the combination of atovaquone and proguanil hydrochloride (Malarone). Doxycycline and the atovaquone and proguanil combination are the best tolerated with mefloquine associated with higher rates of neurological and psychiatric symptoms.[58] The choice of which drug to use depends on which drugs the parasites in the area are resistant to, as well as side-effects and other considerations. The prophylactic effect does not begin immediately upon starting taking the drugs, so people temporarily visiting malaria-endemic areas usually begin taking the drugs one to two weeks before arriving and must continue taking them for 4 weeks after leaving (with the exception of atovaquone proguanil that only needs be started 2 days prior and continued for 7 days afterwards). Generally, these drugs are taken daily or weekly, at a lower dose than would be used for treatment of a person who had actually contracted the disease. Use of prophylactic drugs is seldom practical for full-time residents of malaria-endemic areas, and their use is usually restricted to short-term visitors and travelers to malarial regions. This is due to the cost of purchasing the drugs, negative side effects from long-term use, and because some effective anti-malarial drugs are difficult to obtain outside of wealthy nations.

Quinine was used historically however the development of more effective alternatives such as quinacrine, chloroquine, and primaquine in the 20th century reduced its use. Today, quinine is not generally used for prophylaxis. The use of prophylactic drugs where malaria-bearing mosquitoes are present may encourage the development of partial immunity.[59]

Indoor residual spraying

Indoor residual spraying (IRS) is the practice of spraying insecticides on the interior walls of homes in malaria affected areas. After feeding, many mosquito species rest on a nearby surface while digesting the bloodmeal, so if the walls of dwellings have been coated with insecticides, the resting mosquitos will be killed before they can bite another victim, transferring the malaria parasite.

The first pesticide used for IRS was DDT.[52] Although it was initially used exclusively to combat malaria, its use quickly spread to agriculture. In time, pest-control, rather than disease-control, came to dominate DDT use, and this large-scale agricultural use led to the evolution of resistant mosquitoes in many regions. The DDT resistance shown by Anopheles mosquitoes can be compared to antibiotic resistance shown by bacteria. The overuse of anti-bacterial soaps and antibiotics led to antibiotic resistance in bacteria, similar to how overspraying of DDT on crops led to DDT resistance in Anopheles mosquitoes. During the 1960s, awareness of the negative consequences of its indiscriminate use increased, ultimately leading to bans on agricultural applications of DDT in many countries in the 1970s. Since the use of DDT has been limited or banned for agricultural use for some time, DDT may now be more effective as a method of disease-control.

Although DDT has never been banned for use in malaria control and there are several other insecticides suitable for IRS, some advocates have claimed that bans are responsible for tens of millions of deaths in tropical countries where DDT had once been effective in controlling malaria. Furthermore, most of the problems associated with DDT use stem specifically from its industrial-scale application in agriculture, rather than its use in public health.[60]

The World Health Organization (WHO) currently advises the use of 12 different insecticides in IRS operations. These include DDT and a series of alternative insecticides (such as the pyrethroids permethrin and deltamethrin), to combat malaria in areas where mosquitoes are DDT-resistant and to slow the evolution of resistance.[61] This public health use of small amounts of DDT is permitted under the Stockholm Convention on Persistent Organic Pollutants (POPs), which prohibits the agricultural use of DDT.[62] However, because of its legacy, many developed countries discourage DDT use even in small quantities.[63][64]

One problem with all forms of Indoor Residual Spraying is insecticide resistance via evolution of mosquitos. According to a study published on Mosquito Behavior and Vector Control, mosquito species that are affected by IRS are endophilic species (species that tend to rest and live indoors), and due to the irritation caused by spraying, their evolutionary descendants are trending towards becoming exophilic (species that tend to rest and live out of doors), meaning that they are not as affected—if affected at all—by the IRS, rendering it somewhat useless as a defense mechanism.[65]

Mosquito nets and bedclothes

Mosquito nets help keep mosquitoes away from people and greatly reduce the infection and transmission of malaria. The nets are not a perfect barrier and they are often treated with an insecticide designed to kill the mosquito before it has time to search for a way past the net. Insecticide-treated nets (ITNs) are estimated to be twice as effective as untreated nets and offer greater than 70% protection compared with no net.[66] Although ITNs are proven to be very effective against malaria, less than 2% of children in urban areas in Sub-Saharan Africa are protected by ITNs. Since the Anopheles mosquitoes feed at night, the preferred method is to hang a large "bed net" above the center of a bed such that it drapes down and covers the bed completely.

The distribution of mosquito nets impregnated with insecticides such as permethrin or deltamethrin has been shown to be an extremely effective method of malaria prevention, and it is also one of the most cost-effective methods of prevention. These nets can often be obtained for around US$2.50 to US$3.50 (€2 to €3) from the United Nations, the World Health Organization (WHO), and others. ITNs have been shown to be the most cost-effective prevention method against malaria and are part of WHO’s Millennium Development Goals (MDGs).

While some experts argue that international organizations should distribute ITNs and LLINs to people for free in order to maximize coverage (since such a policy would reduce price barriers), others insist that cost-sharing between the international organization and recipients would lead to greater usage of the net (arguing that people will value a net more if they pay for it). Additionally, proponents of cost-sharing argue that such a policy ensures that nets are efficiently allocated to those people who most need them (or are most vulnerable to infection). Through a "selection effect", they argue, those people who most need the bed nets will choose to purchase them, while those less in need will opt out.

However, a randomized controlled trial study of ITNs uptake among pregnant women in Kenya, conducted by economists Pascaline Dupas and Jessica Cohen, found that cost-sharing does not necessarily increase the usage intensity of ITNs, nor does it induce uptake by those most vulnerable to infection, as compared to a policy of free distribution.[67] In some cases, cost-sharing can actually decrease demand for mosquito nets by erecting a price barrier. Dupas and Cohen’s findings support the argument that free distribution of ITNs can be more effective than cost-sharing in both increasing coverage and saving lives. In a cost-effectiveness analysis, Dupas and Cohen note that "cost-sharing is at best marginally more cost-effective than free distribution, but free distribution leads to many more lives saved."[67]

The researchers base their conclusions about the cost-effectiveness of free distribution on the proven spillover benefits of increased ITN usage.[68] When a large number of nets are distributed in one residential area, their chemical additives help reduce the number of mosquitoes in the environment. With fewer mosquitoes in the environment, the chances of malaria infection for recipients and non-recipients are significantly reduced. (In other words, the importance of the physical barrier effect of ITNs decreases relative to the positive externality effect of the nets in creating a mosquito-free environment when ITNs are highly concentrated in one residential cluster or community.)

For maximum effectiveness, the nets should be re-impregnated with insecticide every six months. This process poses a significant logistical problem in rural areas. New technologies like Olyset or DawaPlus allow for production of long-lasting insecticidal mosquito nets (LLINs), which release insecticide for approximately 5 years,[69] and cost about US$5.50. ITNs protect people sleeping under the net and simultaneously kill mosquitoes that contact the net. Some protection is also provided to others by this method, including people sleeping in the same room but not under the net.

While distributing mosquito nets is a major component of malaria prevention, community education and awareness on the dangers of malaria are associated with distribution campaigns to make sure people who receive a net know how to use it. "Hang Up" campaigns, such as the ones conducted by volunteers of the International Red Cross and Red Crescent Movement consist of visiting households that received a net at the end of the campaign or just before the rainy season, ensuring that the net is being used properly and that the people most vulnerable to malaria, such as young children and the elderly, sleep under it. A study conducted by the CDC in Sierra Leone showed a 22 percent increase in net utilization following a personal visit from a volunteer living in the same community promoting net usage. A study in Togo showed similar improvements.[70]

Mosquito nets are often unaffordable to people in developing countries, especially for those most at risk. Only 1 out of 20 people in Africa own a bed net. Nets are also often distributed though vaccine campaigns using voucher subsidies, such as the measles campaign for children. A study among Afghan refugees in Pakistan found that treating top-sheets and chaddars (head coverings) with permethrin has similar effectiveness to using a treated net, but is much cheaper.[71] Another alternative approach uses spores of the fungus Beauveria bassiana, sprayed on walls and bed nets, to kill mosquitoes. While some mosquitoes have developed resistance to chemicals, they have not been found to develop a resistance to fungal infections.[72]

Vaccination

Immunity (or, more accurately, tolerance) does occur naturally, but only in response to repeated infection with multiple strains of malaria.[73]

Vaccines for malaria are under development, with no completely effective vaccine yet available. The first promising studies demonstrating the potential for a malaria vaccine were performed in 1967 by immunizing mice with live, radiation-attenuated sporozoites, providing protection to about 60% of the mice upon subsequent injection with normal, viable sporozoites.[74] Since the 1970s, there has been a considerable effort to develop similar vaccination strategies within humans. It was determined that an individual can be protected from a P. falciparum infection if they receive over 1,000 bites from infected, irradiated mosquitoes.[75]

It has been generally accepted that it is impractical to provide at-risk individuals with this vaccination strategy, but that has been recently challenged with work being done by Dr. Stephen Hoffman, one of the key researchers who originally sequenced the genome of Plasmodium falciparum. His work most recently has revolved around solving the logistical problem of isolating and preparing the parasites equivalent to 1000 irradiated mosquitoes for mass storage and inoculation of human beings. The company has recently received several multi-million dollar grants from the Bill & Melinda Gates Foundation and the U.S. government to begin early clinical studies in 2007 and 2008.[76] The Seattle Biomedical Research Institute (SBRI), funded by the Malaria Vaccine Initiative, assures potential volunteers that "the [2009] clinical trials won't be a life-threatening experience. While many volunteers [in Seattle] will actually contract malaria, the cloned strain used in the experiments can be quickly cured, and does not cause a recurring form of the disease. Some participants will get experimental drugs or vaccines, while others will get placebo."[77]

Instead, much work has been performed to try and understand the immunological processes that provide protection after immunization with irradiated sporozoites. After the mouse vaccination study in 1967,[74] it was hypothesized that the injected sporozoites themselves were being recognized by the immune system, which was in turn creating antibodies against the parasite. It was determined that the immune system was creating antibodies against the circumsporozoite protein (CSP) which coated the sporozoite.[78] Moreover, antibodies against CSP prevented the sporozoite from invading hepatocytes.[79] CSP was therefore chosen as the most promising protein on which to develop a vaccine against the malaria sporozoite. It is for these historical reasons that vaccines based on CSP are the most numerous of all malaria vaccines.

Presently, there is a huge variety of vaccine candidates on the table. Pre-erythrocytic vaccines (vaccines that target the parasite before it reaches the blood), in particular vaccines based on CSP, make up the largest group of research for the malaria vaccine. There have been recent breakthroughs in vaccines that seek to avoid more severe pathologies of malaria by preventing adherence of the parasite to blood venules and placenta, but financing is not yet in place for trials.[80] Other potential vaccines include those that seek to induce immunity to the blood stages of the infection and transmission-blocking vaccines that would stop the development of the parasite in the mosquito right after the mosquito has taken a bloodmeal from an infected person.[81] It is hoped that the knowledge of the P. falciparum genome, the sequencing of which was completed in 2002,[82] will provide targets for new drugs or vaccines.[83]

The first vaccine developed that has undergone field trials, is the SPf66, developed by Manuel Elkin Patarroyo in 1987. It presents a combination of antigens from the sporozoite (using CS repeats) and merozoite parasites. During phase I trials a 75% efficacy rate was demonstrated and the vaccine appeared to be well tolerated by subjects and immunogenic. The phase IIb and III trials were less promising, with the efficacy falling to between 38.8% and 60.2%. A trial was carried out in Tanzania in 1993 demonstrating the efficacy to be 31% after a years follow up, however the most recent (though controversial) study in The Gambia did not show any effect. Despite the relatively long trial periods and the number of studies carried out, it is still not known how the SPf66 vaccine confers immunity; it therefore remains an unlikely solution to malaria. The CSP was the next vaccine developed that initially appeared promising enough to undergo trials. It is also based on the circumsporoziote protein, but additionally has the recombinant (Asn-Ala-Pro15Asn-Val-Asp-Pro)2-Leu-Arg(R32LR) protein covalently bound to a purified Pseudomonas aeruginosa toxin (A9). However at an early stage a complete lack of protective immunity was demonstrated in those inoculated. The study group used in Kenya had an 82% incidence of parasitaemia whilst the control group only had an 89% incidence. The vaccine intended to cause an increased T-lymphocyte response in those exposed, this was also not observed.

The efficacy of Patarroyo's vaccine has been disputed with some US scientists concluding in The Lancet (1997) that "the vaccine was not effective and should be dropped" while the Colombian accused them of "arrogance" putting down their assertions to the fact that he came from a developing country.

The RTS,S/AS02A vaccine is the candidate furthest along in vaccine trials. It is being developed by a partnership between the PATH Malaria Vaccine Initiative (a grantee of the Gates Foundation), the pharmaceutical company, GlaxoSmithKline, and the Walter Reed Army Institute of Research.[84] In the vaccine, a portion of CSP has been fused to the immunogenic "S antigen" of the hepatitis B virus; this recombinant protein is injected alongside the potent AS02A adjuvant.[81] In October 2004, the RTS,S/AS02A researchers announced results of a Phase IIb trial, indicating the vaccine reduced infection risk by approximately 30% and severity of infection by over 50%. The study looked at over 2,000 Mozambican children.[85] More recent testing of the RTS,S/AS02A vaccine has focused on the safety and efficacy of administering it earlier in infancy: In October 2007, the researchers announced results of a phase I/IIb trial conducted on 214 Mozambican infants between the ages of 10 and 18 months in which the full three-dose course of the vaccine led to a 62% reduction of infection with no serious side-effects save some pain at the point of injection.[86] Further research will delay this vaccine from commercial release until around 2011.[87]

On 6 April 2010, Crucell, a Dutch biopharmaceutical company, has signed a binding letter of agreement with GlaxoSmithKline Biologicals (GSK) to collaborate on developing malaria vaccine candidate.

Other methods

Education in recognizing the symptoms of malaria has reduced the number of cases in some areas of the developing world by as much as 20%. Recognizing the disease in the early stages can also stop the disease from becoming a killer. Education can also inform people to cover over areas of stagnant, still water e.g. Water Tanks which are ideal breeding grounds for the parasite and mosquito, thus cutting down the risk of the transmission between people. This is most put in practice in urban areas where there are large centers of population in a confined space and transmission would be most likely in these areas.

The Malaria Control Project is currently using downtime computing power donated by individual volunteers around the world (see Volunteer computing and BOINC) to simulate models of the health effects and transmission dynamics in order to find the best method or combination of methods for malaria control. This modeling is extremely computer intensive due to the simulations of large human populations with a vast range of parameters related to biological and social factors that influence the spread of the disease. It is expected to take a few months using volunteered computing power compared to the 40 years it would have taken with the current resources available to the scientists who developed the program.[88]

An example of the importance of computer modeling in planning malaria eradication programs is shown in the paper by Águas and others. They showed that eradication of malaria is crucially dependent on finding and treating the large number of people in endemic areas with asymptomatic malaria, who act as a reservoir for infection.[89] The malaria parasites do not affect animal species and therefore eradication of the disease from the human population would be expected to be effective.

Other interventions for the control of malaria include mass drug administrations and intermittent preventive therapy.

Furthering attempts to reduce transmission rates, a proposed alternative to mosquito nets is the mosquito laser, or photonic fence, which identifies female mosquitoes and shoots them using a medium-powered laser[90]. The device is currently undergoing commercial development, although instructions for a DIY version of the photonic fence have also been published.[91]

Another way of reducing the malaria transmitted to humans from mosquitoes has been developed by the University of Arizona. They have engineered a mosquito to become resistant to malaria. This was reported on the 16 July 2010 in the journal PLoS Pathogens.[92] With the ultimate end being that the release of this GM mosquito into the environment, Gareth Lycett, a malaria researcher from Liverpool School of Tropical Medicine told the BBC that "It is another step on the journey towards potentially assisting malaria control through GM mosquito release."[93]

Treatment

The treatment of malaria depends on the severity of the disease. Uncomplicated malaria is treated with oral drugs. Whether patients who can take oral drugs have to be admitted depends on the assessment and the experience of the clinician. Severe malaria requires the parenteral administration of antimalarial drugs. The traditional treatment for severe malaria has been quinine but there is evidence that the artemisinins are also superior for the treatment of severe malaria. A large clinical trial is currently under way to compare the efficacy of quinine and artesunate in the treatment of severe malaria in African children. Active malaria infection with P. falciparum is a medical emergency requiring hospitalization. Infection with P. vivax, P. ovale or P. malariae can often be treated on an outpatient basis. Treatment of malaria involves supportive measures as well as specific antimalarial drugs. Most antimalarial drugs are produced industrially and are sold at pharmacies. However, as the cost of such medicines are often too high for most people in the developing world, some herbal remedies (such as Artemisia annua tea[94]) have also been developed, and have gained support from international organisations such as Médecins Sans Frontières. When properly treated, someone with malaria can expect a complete recovery.[95]

Counterfeit drugs

Sophisticated counterfeits have been found in several Asian countries such as Cambodia,[96] China,[97] Indonesia, Laos, Thailand, Vietnam and are an important cause of avoidable death in those countries.[98] WHO have said that studies indicate that up to 40% of artesunate based malaria medications are counterfeit, especially in the Greater Mekong region and have established a rapid alert system to enable information about counterfeit drugs to be rapidly reported to the relevant authorities in participating countries.[99] There is no reliable way for doctors or lay people to detect counterfeit drugs without help from a laboratory. Companies are attempting to combat the persistence of counterfeit drugs by using new technology to provide security from source to distribution.

Epidemiology

Malaria causes about 250 million cases of fever and approximately one million deaths annually.[101] The vast majority of cases occur in children under 5 years old;[102] pregnant women are also especially vulnerable. Despite efforts to reduce transmission and increase treatment, there has been little change in which areas are at risk of this disease since 1992.[103] Indeed, if the prevalence of malaria stays on its present upwards course, the death rate could double in the next twenty years.[104] Precise statistics are unknown because many cases occur in rural areas where people do not have access to hospitals or the means to afford health care. As a consequence, the majority of cases are undocumented.[104]

Although co-infection with HIV and malaria does cause increased mortality, this is less of a problem than with HIV/tuberculosis co-infection, due to the two diseases usually attacking different age-ranges, with malaria being most common in the young and active tuberculosis most common in the old.[105] Although HIV/malaria co-infection produces less severe symptoms than the interaction between HIV and TB, HIV and malaria do contribute to each other's spread. This effect comes from malaria increasing viral load and HIV infection increasing a person's susceptibility to malaria infection.[106]

Malaria is presently endemic in a broad band around the equator, in areas of the Americas, many parts of Asia, and much of Africa; however, it is in sub-Saharan Africa where 85– 90% of malaria fatalities occur.[107] The geographic distribution of malaria within large regions is complex, and malaria-afflicted and malaria-free areas are often found close to each other.[108] In drier areas, outbreaks of malaria can be predicted with reasonable accuracy by mapping rainfall.[109] Malaria is more common in rural areas than in cities; this is in contrast to dengue fever where urban areas present the greater risk.[110] For example, the cities of Vietnam, Laos and Cambodia are essentially malaria-free, but the disease is present in many rural regions.[111] By contrast, in Africa malaria is present in both rural and urban areas, though the risk is lower in the larger cities.[112] The global endemic levels of malaria have not been mapped since the 1960s. However, the Wellcome Trust, UK, has funded the Malaria Atlas Project[113] to rectify this, providing a more contemporary and robust means with which to assess current and future malaria disease burden.

History

Malaria has infected humans for over 50,000 years, and Plasmodium may have been a human pathogen for the entire history of the species.[114] Close relatives of the human malaria parasites remain common in chimpanzees.[115] References to the unique periodic fevers of malaria are found throughout recorded history, beginning in 2700 BC in China.[116] Malaria may have contributed to the decline of the Roman Empire,[117] and was so pervasive in Rome that it was known as the "Roman fever".[118] The term malaria originates from Medieval Italian: mala aria — "bad air"; the disease was formerly called ague or marsh fever due to its association with swamps and marshland.[119] Malaria was once common in most of Europe and North America,[120] where it is no longer endemic,[121] though imported cases do occur. Malaria was the most important health hazard encountered by U.S. troops in the South Pacific during World War II, where about 500,000 men were infected.[122] Sixty thousand American soldiers died of malaria during the North African and South Pacific campaigns.[123]

Scientific studies on malaria made their first significant advance in 1880, when a French army doctor working in the military hospital of Constantine in Algeria named Charles Louis Alphonse Laveran observed parasites for the first time, inside the red blood cells of people suffering from malaria. He, therefore, proposed that malaria is caused by this organism, the first time a protist was identified as causing disease.[124] For this and later discoveries, he was awarded the 1907 Nobel Prize for Physiology or Medicine. The malarial parasite was called Plasmodium by the Italian scientists Ettore Marchiafava and Angelo Celli.[125] A year later, Carlos Finlay, a Cuban doctor treating patients with yellow fever in Havana, provided strong evidence that mosquitoes were transmitting disease to and from humans.[126] This work followed earlier suggestions by Josiah C. Nott,[127] and work by Patrick Manson on the transmission of filariasis.[128]

It was Britain's Sir Ronald Ross, working in the Presidency General Hospital in Calcutta, who finally proved in 1898 that malaria is transmitted by mosquitoes. He did this by showing that certain mosquito species transmit malaria to birds. He isolated malaria parasites from the salivary glands of mosquitoes that had fed on infected birds.[129] For this work, Ross received the 1902 Nobel Prize in Medicine. After resigning from the Indian Medical Service, Ross worked at the newly established Liverpool School of Tropical Medicine and directed malaria-control efforts in Egypt, Panama, Greece and Mauritius.[130] The findings of Finlay and Ross were later confirmed by a medical board headed by Walter Reed in 1900. Its recommendations were implemented by William C. Gorgas in the health measures undertaken during construction of the Panama Canal. This public-health work saved the lives of thousands of workers and helped develop the methods used in future public-health campaigns against the disease.

The first effective treatment for malaria came from the bark of cinchona tree, which contains quinine. This tree grows on the slopes of the Andes, mainly in Peru. The indigenous peoples of Peru made a tincture of cinchona to control malaria. The Jesuits noted the efficacy of the practice and introduced the treatment to Europe during the 1640s, where it was rapidly accepted.[131] It was not until 1820 that the active ingredient, quinine, was extracted from the bark, isolated and named by the French chemists Pierre Joseph Pelletier and Joseph Bienaimé Caventou.[132]

In the early 20th century, before antibiotics became available, Julius Wagner-Jauregg discovered that patients with syphilis could be treated by intentionally infecting them with malaria; the resulting fever would kill the syphilis spirochetes, and quinine could be administered to control the malaria. Although some patients died from malaria, this was considered preferable to the almost-certain death from syphilis.[133]

The first successful continuous malaria culture was established in 1976 by William Trager and James B. Jensen, which facilitated research into the molecular biology of the parasite and the development of new drugs.[134][135]

Although the blood stage and mosquito stages of the malaria life cycle were identified in the 19th and early 20th centuries, it was not until the 1980s that the latent liver form of the parasite was observed.[136][137] The discovery of this latent form of the parasite explained why people could appear to be cured of malaria but suffer relapse years after the parasite had disappeared from their bloodstreams.

Genetic resistance to malaria

Malaria is thought to have been the greatest selective pressure on the human genome in recent history.[138] This is due to the high levels of mortality and morbidity caused by malaria, especially the P. falciparum species.

Sickle-cell disease

The most-studied influence of the malaria parasite upon the human genome is a hereditary blood disease, sickle-cell disease. The sickle-cell trait causes disease, but even those only partially affected by sickle-cell have substantial protection against malaria.

In sickle-cell disease, there is a mutation in the HBB gene, which encodes the beta-globin subunit of haemoglobin. The normal allele encodes a glutamate at position six of the beta-globin protein, whereas the sickle-cell allele encodes a valine. This change from a hydrophilic to a hydrophobic amino acid encourages binding between haemoglobin molecules, with polymerization of haemoglobin deforming red blood cells into a "sickle" shape. Such deformed cells are cleared rapidly from the blood, mainly in the spleen, for destruction and recycling.

In the merozoite stage of its life cycle, the malaria parasite lives inside red blood cells, and its metabolism changes the internal chemistry of the red blood cell. Infected cells normally survive until the parasite reproduces, but, if the red cell contains a mixture of sickle and normal haemoglobin, it is likely to become deformed and be destroyed before the daughter parasites emerge. Thus, individuals heterozygous for the mutated allele, known as sickle-cell trait, may have a low and usually unimportant level of anaemia, but also have a greatly reduced chance of serious malaria infection. This is a classic example of heterozygote advantage.

Individuals homozygous for the mutation have full sickle-cell disease and in traditional societies rarely live beyond adolescence. However, in populations where malaria is endemic, the frequency of sickle-cell genes is around 10%. The existence of four haplotypes of sickle-type hemoglobin suggests that this mutation has emerged independently at least four times in malaria-endemic areas, further demonstrating its evolutionary advantage in such affected regions. There are also other mutations of the HBB gene that produce haemoglobin molecules capable of conferring similar resistance to malaria infection. These mutations produce haemoglobin types HbE and HbC, which are common in Southeast Asia and Western Africa, respectively.

Thalassaemias

Another well-documented set of mutations found in the human genome associated with malaria are those involved in causing blood disorders known as thalassaemias. Studies in Sardinia and Papua New Guinea have found that the gene frequency of β-thalassaemias is related to the level of malarial endemicity in a given population. A study on more than 500 children in Liberia found that those with β-thalassaemia had a 50% decreased chance of getting clinical malaria. Similar studies have found links between gene frequency and malaria endemicity in the α+ form of α-thalassaemia. Presumably these genes have also been selected in the course of human evolution.

Duffy antigens

The Duffy antigens are antigens expressed on red blood cells and other cells in the body acting as a chemokine receptor. The expression of Duffy antigens on blood cells is encoded by Fy genes (Fya, Fyb, Fyc etc.). Plasmodium vivax malaria uses the Duffy antigen to enter blood cells. However, it is possible to express no Duffy antigen on red blood cells (Fy-/Fy-). This genotype confers complete resistance to P. vivax infection. The genotype is very rare in European, Asian and American populations, but is found in almost all of the indigenous population of West and Central Africa.[140] This is thought to be due to very high exposure to P. vivax in Africa in the last few thousand years.

G6PD

Glucose-6-phosphate dehydrogenase (G6PD) is an enzyme that normally protects from the effects of oxidative stress in red blood cells. However, a genetic deficiency in this enzyme results in increased protection against severe malaria.

HLA and interleukin-4

HLA-B53 is associated with low risk of severe malaria. This MHC class I molecule presents liver stage and sporozoite antigens to T-Cells. Interleukin-4, encoded by IL4, is produced by activated T cells and promotes proliferation and differentiation of antibody-producing B cells. A study of the Fulani of Burkina Faso, who have both fewer malaria attacks and higher levels of antimalarial antibodies than do neighboring ethnic groups, found that the IL4-524 T allele was associated with elevated antibody levels against malaria antigens, which raises the possibility that this might be a factor in increased resistance to malaria.[141]

Resistance in South Asia

The lowest Himalayan Foothills and Inner Terai or Doon Valleys of Nepal and India are highly malarial due to a warm climate and marshes sustained during the dry season by groundwater percolating down from the higher hills. Malarial forests were intentionally maintained by the rulers of Nepal as a defensive measure. Humans attempting to live in this zone suffered much higher mortality than at higher elevations or below on the drier Gangetic Plain.

However, the Tharu people had lived in this zone long enough to evolve resistance via multiple genes. Medical studies among the Tharu and non-Tharu population of the Terai yielded the evidence that the prevalence of cases of residual malaria is nearly seven times lower among Tharus. The basis for their resistance to malaria is most likely a genetic factor. Endogamy along caste and ethnic lines appear to have confined these to the Tharu community.[142] Otherwise these genes probably would have become nearly universal in South Asia and beyond because of their considerable survival value and the apparent lack of negative effects comparable to Sickle Cell Anemia.

Society and culture

Malaria is not just a disease commonly associated with poverty but also a cause of poverty and a major hindrance to economic development. Tropical regions are affected most, however malaria’s furthest extent reaches into some temperate zones with extreme seasonal changes. The disease has been associated with major negative economic effects on regions where it is widespread. During the late 19th and early 20th centuries, it was a major factor in the slow economic development of the American southern states.[143]. A comparison of average per capita GDP in 1995, adjusted for parity of purchasing power, between countries with malaria and countries without malaria gives a fivefold difference ($1,526 USD versus $8,268 USD). In countries where malaria is common, average per capita GDP has risen (between 1965 and 1990) only 0.4% per year, compared to 2.4% per year in other countries.[144]

Poverty is both cause and effect, however, since the poor do not have the financial capacities to prevent or treat the disease. The lowest income group in Malawi carries (1994) the burden of having 32% of their annual income used on this disease compared with the 4% of household incomes from low-to-high groups.[145] In its entirety, the economic impact of malaria has been estimated to cost Africa $12 billion USD every year. The economic impact includes costs of health care, working days lost due to sickness, days lost in education, decreased productivity due to brain damage from cerebral malaria, and loss of investment and tourism.[102] In some countries with a heavy malaria burden, the disease may account for as much as 40% of public health expenditure, 30-50% of inpatient admissions, and up to 50% of outpatient visits.[146]

Malaria and wars

Throughout history, the contraction of malaria (via natural outbreaks as well as via infliction of the disease as a biological warfare agent) has played a prominent role in the fortunes of government rulers, nation-states, military personnel, and military actions. "Malaria Site: History of Malaria During Wars" addresses the devastating impact of malaria in numerous well-known conflicts, beginning in June 323 B.C. That site's authors note: "Many great warriors succumbed to malaria after returning from the warfront and advance of armies into continents was prevented by malaria. In many conflicts, more troops were killed by malaria than in combat."[147] The Centers for Disease Control ("CDC") traces the history of malaria and its impacts farther back, to 2700 BCE.[148].

In 1910, Nobel Prize in Medicine-winner Ronald Ross (himself a malaria survivor), published a book titled The Prevention of Malaria that included the chapter: "The Prevention of Malaria in War." The chapter's author, Colonel C. H. Melville, Professor of Hygiene at Royal Army Medical College in London, addressed the prominent role that malaria has historically played during wars and advised: "A specially selected medical officer should be placed in charge of these operations with executive and disciplinary powers [...]."

Significant financial investments have been made to fund procure existing and create new anti-malarial agents. During World War I and World War II, the supplies of the natural anti-malaria drugs, cinchona bark and quinine, proved to be inadequate to supply military personnel and substantial funding was funnelled into research and development of other drugs and vaccines. American military organizations conducting such research intiatives include the Navy Medical Research Center, Walter Reed Army Institute of Research, and the U.S. Army Medical Research Institute of Infectious Diseases of the US Armed Forces.[149]

Additionally, initatives have been founded such as Malaria Control in War Areas (MCWA), established in 1942, and its successor, the Communicable Disease Center (now known as the Centers for Disease Control) established in 1946. According to the CDC, MCWA "was established to control malaria around military training bases in the southern United States and its territories, where malaria was still problematic" and, during these activities, to "train state and local health department officials in malaria control techniques and strategies." The CDC's Malaria Division continued that mission, successfully reducing malaria in the United States, after which the organization expanded its focus to include "prevention, surveillance, and technical support both domestically and internationally."[150]

A sampling of publications addressing malaria and wars includes:

- Cantlie, N. (1950, February). "Health Discipline". U.S. Armed Forces Medical Journal. Volume 1: 232-287.

- Fairley, N. H. (1946, January). "Researches on Paludrine (M. 4888)". In Malaria. Tr. Roy. Soc. Trop. Med. & Hyg. 40 (2), pp. 105-161.

- Hardenbergh, William A. (1955). "Control of Insects". In Medical Department, United States Army. Preventive Medicine in World War II. Volume II. Environmental Hygiene. Washington: U.S. Government Printing Office, pp. 179-232.

- Macculloch, John (1829). "Malaria; an Essay on the Production and Propagation of This Poison and on the Nature and Localities of the Places by Which It Is Produced: With an Enumeration of the Diseases caused by It, and of the Means of Preventing or Diminishing Them, Both at Home and in the Naval and Military Service". Philadelphia: Thomas Kite.

- MacDonald, A. G. (1928). "Prevention of Malaria." In History of the Great War Based on Official Documents. Medical Services, Hygiene of the War, edited by W. G. Macpherson, W. H, Horrocks, and W. W. 0. Beveridge. London: His Majesty's Stationery Office, Vol. II, pp. 189-238.

- Medical Department, United States Army, Office Of Medical History (1963). "Communicable Diseases: Malaria". Preventive Medicine in World War II. Volume 5. Washington, DC: Office of the Surgeon General. URL: URL: http://history.amedd.army.mil/booksdocs/wwii/Malaria/chapterI.htm.

- Melville, C. H. (1910). "The Prevention of Malaria in War". In The Prevention of Malaria by Ronald Ross. 2d edition. London: John Murray, pp. 577-599.

- Most, Harry (1963). "Clinical Trials of Antimalarial Drugs". In Medical Department, United States Army. Internal Medicine in World War II. Volume II. Infectious Diseases. Washington: U.S. Government Printing Office.

- Pringle, John (1910). Observations on the Diseases of the Army. 4th edition. London: A. Millar.

- Russell, P. P. (1946, January). "Lessons in Malariology from World War II". American Journal of Tropical Medicine. Volume 26: 5-13.

Malaria in popular culture

- Joseph Conrad, author of the novella Heart of Darkness (published in serial form in 1899 and as a novella in 1902), contracted malaria while on a four-month steamboat voyage along the Congo River.[151] One character in the book, an ivory trader named Kurtz, dies from the disease [152] and the novella's narrator, Charles Marlow, is weakened by it.

- In making the movie The African Queen (1951), most of the crew and the leading lady, Katherine Hepburn, endured malaria.[153][154][155]

- In the movie Apocalypse Now (1979), Captain Benjamin L. Willard is a special operations veteran stationed in Southeast Asia during the Vietnam war. Willard is assigned a classified mission to assassinate rogue soldier, Colonel Walter E. Kurtz, who has gone insane and is commanding a legion of his own Montagnard troops in neutral Cambodia. Upon finding Kurtz's location, Willard narrates: "It smelled like slow death in there, malaria, and nightmares. This was the end of the river, all right."[156]

- In Barbara Kingsolver's novel, Poisonwood Bible (1988), Ruth May - the youngest daughter of an American Christian missionary who brings his family to live in the Belgian Congo of the 1960's - stops taking her quinine tablets and contracts malaria.[157]

See also

- Eradication of infectious diseases

- Globalization and disease

- Medicines for Malaria Venture (MMV)

- Roll Back Malaria (RBM) Partnership

- Save My Soul - Music to Prevent Malaria (album)

- The Global Fund to Fight AIDS, Tuberculosis and Malaria

- Tropical diseases

- J. Gordon Edwards (entomologist and mountaineer)

- Rachel Carson

- Buzz and Bite

References

- ↑ Malaria Facts. Centers for Disease Control and Prevention.

- ↑ Snow RW, Guerra CA, Noor AM, Myint HY, Hay SI (2005). "The global distribution of clinical episodes of Plasmodium falciparum malaria". Nature 434 (7030): 214–7. doi:10.1038/nature03342. PMID 15759000.

- ↑ "Malaria: Disease Impacts and Long-Run Income Differences" (PDF). Institute for the Study of Labor. http://ftp.iza.org/dp2997.pdf. Retrieved 2008-12-10.

- ↑ Fong YL, Cadigan FC, Coatney GR (1971). "A presumptive case of naturally occurring Plasmodium knowlesi malaria in man in Malaysia". Trans. R. Soc. Trop. Med. Hyg. 65 (6): 839–40. doi:10.1016/0035-9203(71)90103-9. PMID 5003320.

- ↑ Singh B, Kim Sung L, Matusop A, et al. (March 2004). "A large focus of naturally acquired Plasmodium knowlesi infections in human beings". Lancet 363 (9414): 1017–24. doi:10.1016/S0140-6736(04)15836-4. PMID 15051281. http://linkinghub.elsevier.com/retrieve/pii/S0140673604158364.

- ↑ Dondorp AM, Day NP (July 2007). "The treatment of severe malaria.". Trans. R. Soc. Trop. Med. Hyg. 101 (7): 633–4. doi:10.1016/j.trstmh.2007.03.011. PMID 17434195. http://linkinghub.elsevier.com/retrieve/pii/S0035-9203(07)00093-4.

- ↑ Wellems TE (October 2002). "Plasmodium chloroquine resistance and the search for a replacement antimalarial drug". Science 298 (5591): 124–6. doi:10.1126/science.1078167. PMID 12364789. http://www.sciencemag.org/cgi/pmidlookup?view=long&pmid=12364789.

- ↑ Kilama W, Ntoumi F (October 2009). "Malaria: a research agenda for the eradication era". Lancet 374 (9700): 1480–2. doi:10.1016/S0140-6736(09)61884-5. PMID 19880004. http://linkinghub.elsevier.com/retrieve/pii/S0140-6736(09)61884-5.

- ↑ WebMD > Malaria symptoms Last Updated: May 16, 2007

- ↑ Beare NA, Taylor TE, Harding SP, Lewallen S, Molyneux ME (November 1, 2006). "Malarial retinopathy: a newly established diagnostic sign in severe malaria". Am. J. Trop. Med. Hyg. 75 (5): 790–7. PMID 17123967. PMC 2367432. http://www.ajtmh.org/cgi/pmidlookup?view=long&pmid=17123967.

- ↑ Malaria life cycle & pathogenesis. Malaria in Armenia. Accessed October 31, 2006.

- ↑ Idro, R; Otieno G, White S, Kahindi A, Fegan G, Ogutu B, Mithwani S, Maitland K, Neville BG, Newton CR. "Decorticate, decerebrate and opisthotonic posturing and seizures in Kenyan children with cerebral malaria". Malaria Journal 4 (57): 57. doi:10.1186/1475-2875-4-57. PMID 16336645.

- ↑ Boivin MJ (October 2002). "Effects of early cerebral malaria on cognitive ability in Senegalese children". J Dev Behav Pediatr 23 (5): 353–64. PMID 12394524. http://meta.wkhealth.com/pt/pt-core/template-journal/lwwgateway/media/landingpage.htm?issn=0196-206X&volume=23&issue=5&spage=353.

- ↑ Holding PA, Snow RW (2001). "Impact of Plasmodium falciparum malaria on performance and learning: review of the evidence". Am. J. Trop. Med. Hyg. 64 (1-2 Suppl): 68–75. PMID 11425179. http://www.ajtmh.org/cgi/content/abstract/64/1_suppl/68. – Scholar search}}

- ↑ Maude RJ, Hassan MU, Beare NAV (June 1, 2009). "Severe retinal whitening in an adult with cerebral malaria". Am J Trop Med Hyg 80 (6): 881. PMID 19478242. PMC 2843443. http://www.ajtmh.org/cgi/content/full/80/6/881.

- ↑ Beare NAV, Taylor TE, Harding SP, Lewallen S, Molyneux ME (2006). "Malarial retinopathy: a newly established diagnostic sign in severe malaria". Am J Trop Med Hyg 75 (5): 790–797. PMID 17123967.

- ↑ 17.0 17.1 17.2 Trampuz A, Jereb M, Muzlovic I, Prabhu R (2003). "Clinical review: Severe malaria". Crit Care 7 (4): 315–23. doi:10.1186/cc2183. PMID 12930555.

- ↑ Kain K, Harrington M, Tennyson S, Keystone J (1998). "Imported malaria: prospective analysis of problems in diagnosis and management". Clin Infect Dis 27 (1): 142–9. doi:10.1086/514616. PMID 9675468.

- ↑ Mockenhaupt F, Ehrhardt S, Burkhardt J, Bosomtwe S, Laryea S, Anemana S, Otchwemah R, Cramer J, Dietz E, Gellert S, Bienzle U (2004). "Manifestation and outcome of severe malaria in children in northern Ghana". Am J Trop Med Hyg 71 (2): 167–72. PMID 15306705.

- ↑ Carter JA, Ross AJ, Neville BG, Obiero E, Katana K, Mung'ala-Odera V, Lees JA, Newton CR (2005). "Developmental impairments following severe falciparum malaria in children". Trop Med Int Health 10 (1): 3–10. doi:10.1111/j.1365-3156.2004.01345.x. PMID 15655008.

- ↑ Adak T, Sharma V, Orlov V (1998). "Studies on the Plasmodium vivax relapse pattern in Delhi, India". Am J Trop Med Hyg 59 (1): 175–9. PMID 9684649.

- ↑ Mueller I, Zimmerman PA, Reeder JC (June 2007). "Plasmodium malariae and Plasmodium ovale--the "bashful" malaria parasites". Trends Parasitol. 23 (6): 278–83. doi:10.1016/j.pt.2007.04.009. PMID 17459775.

- ↑ Singh B, Kim Sung L, Matusop A, et al. (March 2004). "A large focus of naturally acquired Plasmodium knowlesi infections in human beings". Lancet 363 (9414): 1017–24. doi:10.1016/S0140-6736(04)15836-4. PMID 15051281.

- ↑ Mendis K, Sina B, Marchesini P, Carter R (2001). "The neglected burden of Plasmodium vivax malaria" (PDF). Am J Trop Med Hyg 64 (1-2 Suppl): 97–106. PMID 11425182. http://www.ajtmh.org/cgi/reprint/64/1_suppl/97.pdf.

- ↑ Escalante A, Ayala F (1994). "Phylogeny of the malarial genus Plasmodium, derived from rRNA gene sequences". Proc Natl Acad Sci USA 91 (24): 11373–7. doi:10.1073/pnas.91.24.11373. PMID 7972067.

- ↑ Garnham, PCC (1966). Malaria parasites and other haemosporidia. Oxford: Blackwell Scientific Publications. ISBN 186983500X.

- ↑ Collins, WE; Barnwell, JW (2009). "Plasmodium knowlesi: Finally being recognized". J Infect Dis 199 (8): 1107–1108. doi:10.1086/597415. PMID 19284287.

- ↑ Köhler, Sabine; Delwiche, CF; Denny, PW; Tilney, LG; Webster, P; Wilson, RJ; Palmer, JD; Roos, DS (March 1997). "A Plastid of Probable Green Algal Origin in Apicomplexan Parasites". Science 275 (5305): 1485–1489. doi:10.1126/science.275.5305.1485. PMID 9045615.

- ↑ Gardner, Malcom; Tettelin, H; Carucci, DJ; Cummings, LM; Aravind, L; Koonin, EV; Shallom, S; Mason, T et al. (November 1998). "Chromosome 2 Sequence of the Human Malaria Parasite Plasmodium falciparum". Science 282 (5391): 1126–1132. doi:10.1126/science.282.5391.1126. PMID 9804551.

- ↑ Foth, Bernado; Ralph, SA; Tonkin, CJ; Struck, NS; Fraunholz, M; Roos, DS; Cowman, AF; McFadden, GI (January 2003). "Dissecting Apicoplast Targeting in the Malaria Parasite Plasmodium falciparum". Science 299 (5607): 705–708. doi:10.1126/science.1078599. PMID 12560551. http://www.sciencemag.org/cgi/content/abstract/299/5607/705?maxtoshow=&HITS=10&hits=10&RESULTFORMAT=&fulltext=apicoplast&searchid=1&FIRSTINDEX=0&resourcetype=HWCIT.

- ↑ Talman A, Domarle O, McKenzie F, Ariey F, Robert V (2004). "Gametocytogenesis: the puberty of Plasmodium falciparum". Malar J 3: 24. doi:10.1186/1475-2875-3-24. PMID 15253774.

- ↑ Marcucci C, Madjdpour C, Spahn D (2004). "Allogeneic blood transfusions: benefit, risks and clinical indications in countries with a low or high human development index". Br Med Bull 70: 15–28. doi:10.1093/bmb/ldh023. PMID 15339855.

- ↑ Bledsoe GH (December 2005). "Malaria primer for clinicians in the United States". South. Med. J. 98 (12): 1197–204; quiz 1205, 1230. PMID 16440920. http://www.sma.org/pdfs/objecttypes/smj/91C48D32-BCD4-FF25-565C69314AF7EB48/1196.pdf.

- ↑ Sturm A, Amino R, van de Sand C, Regen T, Retzlaff S, Rennenberg A, Krueger A, Pollok JM, Menard R, Heussler VT (2006). "Manipulation of host hepatocytes by the malaria parasite for delivery into liver sinusoids". Science 313 (5791): 1287–1490. doi:10.1126/science.1129720. PMID 16888102.

- ↑ Cogswell FB (January 1992). "The hypnozoite and relapse in primate malaria". Clin. Microbiol. Rev. 5 (1): 26–35. PMID 1735093. PMC 358221. http://cmr.asm.org/cgi/pmidlookup?view=long&pmid=1735093.

- ↑ 36.0 36.1 Chen Q, Schlichtherle M, Wahlgren M (July 2000). "Molecular aspects of severe malaria". Clin. Microbiol. Rev. 13 (3): 439–50. doi:10.1128/CMR.13.3.439-450.2000. PMID 10885986. PMC 88942. http://cmr.asm.org/cgi/pmidlookup?view=long&pmid=10885986.

- ↑ Adams S, Brown H, Turner G (2002). "Breaking down the blood-brain barrier: signaling a path to cerebral malaria?". Trends Parasitol 18 (8): 360–6. doi:10.1016/S1471-4922(02)02353-X. PMID 12377286.

- ↑ Lindsay S, Ansell J, Selman C, Cox V, Hamilton K, Walraven G (2000). "Effect of pregnancy on exposure to malaria mosquitoes". Lancet 355 (9219): 1972. doi:10.1016/S0140-6736(00)02334-5. PMID 10859048.

- ↑ van Geertruyden J, Thomas F, Erhart A, D'Alessandro U (August 1, 2004). "The contribution of malaria in pregnancy to perinatal mortality". Am J Trop Med Hyg 71 (2 Suppl): 35–40. PMID 15331817. http://www.ajtmh.org/cgi/content/full/71/2_suppl/35.

- ↑ Rodriguez-Morales AJ, Sanchez E, Vargas M, Piccolo C, Colina R, Arria M, Franco-Paredes C (2006). "Pregnancy outcomes associated with Plasmodium vivax malaria in northeastern Venezuela". Am J Trop Med Hyg 74 (5): 755–757. PMID 16687675.

- ↑ 41.0 41.1 Sutherland, CJ; Hallett, R (2009). "Detecting malaria parasites outside the blood". J Infect Dis 199 (11): 1561–1563. doi:10.1086/598857. PMID 19432543.

- ↑ Beare NA, Taylor TE, Harding SP, Lewallen S, Molyneux ME (November 2006). "Malarial retinopathy: a newly established diagnostic sign in severe malaria". Am. J. Trop. Med. Hyg. 75 (5): 790–7. PMID 17123967. PMC 2367432. http://www.ajtmh.org/cgi/pmidlookup?view=long&pmid=17123967.

- ↑ Redd S, Kazembe P, Luby S, Nwanyanwu O, Hightower A, Ziba C, Wirima J, Chitsulo L, Franco C, Olivar M (2006). "Clinical algorithm for treatment of Plasmodium falciparum malaria in children". Lancet 347 (8996): 80. doi:10.1016/S0140-6736(96)90404-3. PMID 8551881..

- ↑ Warhurst DC, Williams JE (1996). "Laboratory diagnosis of malaria". J Clin Pathol 49 (7): 533–38. doi:10.1136/jcp.49.7.533. PMID 8813948.

- ↑ McCutchan, Thomas F.; Robert C. Piper, and Michael T. Makler (November 2008). "Use of Malaria Rapid Diagnostic Test to Identify Plasmodium knowlesi Infection". Emerging Infectious Disease (Centers for Disease Control) 14 (11): 1750–1752. doi:10.3201/eid1411.080840.

- ↑ Pattanasin S, Proux S, Chompasuk D, Luwiradaj K, Jacquier P, Looareesuwan S, Nosten F (2003). "Evaluation of a new Plasmodium lactate dehydrogenase assay (OptiMAL-IT) for the detection of malaria". Transact Royal Soc Trop Med 97 (6): 672–4. doi:10.1016/S0035-9203(03)80100-1. PMID 16117960.

- ↑ Ling IT., Cooksley S., Bates PA., Hempelmann E., Wilson RJM. (1986). "Antibodies to the glutamate dehydrogenase of Plasmodium falciparum". Parasitology 92,: 313–24. doi:10.1017/S0031182000064088. PMID 3086819.

- ↑ Mens, PF; Schoone, GJ; Kager, PA; Schallig, HD (2006). "Detection and identification of human Plasmodium species with real-time quantitative nucleic acid sequence-based amplification". Malaria Journal 5 (80): 80. doi:10.1186/1475-2875-5-80. PMID 17018138.

- ↑ Redd S, Kazembe P, Luby S, Nwanyanwu O, Hightower A, Ziba C, Wirima J, Chitsulo L, Franco C, Olivar M (2006). "Clinical algorithm for treatment of Plasmodium falciparum malaria in children". Lancet 347 (8996): 80. doi:10.1016/S0140-6736(96)90404-3. PMID 8551881..

- ↑ Medical News Today, 2007

- ↑ Barat L (2006). "Four malaria success stories: how malaria burden was successfully reduced in Brazil, Eritrea, India, and Vietnam". Am J Trop Med Hyg 74 (1): 12–6. PMID 16407339.

- ↑ 52.0 52.1 http://www.cdc.gov/malaria/history/eradication_us.htm Centers for Disease Control. Eradication of Malaria in the United States (1947-1951) 2004.

- ↑ Killeen G, Fillinger U, Kiche I, Gouagna L, Knols B (2002). "Eradication of Anopheles gambiae from Brazil: lessons for malaria control in Africa?". Lancet Infect Dis 2 (10): e192. doi:10.1016/S1473-3099(02)00397-3. PMID 12383612.

- ↑ Imperial College, London, "Scientists create first transgenic malaria mosquito", 2000-06-22.

- ↑ Ito J, Ghosh A, Moreira LA, Wimmer EA, Jacobs-Lorena M (2002). "Transgenic anopheline mosquitoes impaired in transmission of a malaria parasite". Nature 417 (6887): 387–8. doi:10.1038/417452a. PMID 12024215.

- ↑ Knols et al., 2007

- ↑ Robert Guth. "Rocket Scientists Shoot Down Mosquitoes With Lasers". WSJ.com. http://online.wsj.com/article/SB123680870885500701.html. Retrieved 8 July 2009.

- ↑ Jacquerioz FA, Croft AM (2009). "Drugs for preventing malaria in travellers". Cochrane Database Syst Rev (4): CD006491. doi:10.1002/14651858.CD006491.pub2. PMID 19821371.

- ↑ Roestenberg M, McCall M, Hopman J, et al. (July 2009). "Protection against a malaria challenge by sporozoite inoculation". N. Engl. J. Med. 361 (5): 468–77. doi:10.1056/NEJMoa0805832. PMID 19641203.