RGB color model

The RGB color model is an additive color model in which red, green, and blue light are added together in various ways to reproduce a broad array of colors. The name of the model comes from the initials of the three additive primary colors, red, green, and blue.

The main purpose of the RGB color model is for the sensing, representation, and display of images in electronic systems, such as televisions and computers, though it has also been used in conventional photography. Before the electronic age, the RGB color model already had a solid theory behind it, based in human perception of colors.

RGB is a device-dependent color space: different devices detect or reproduce a given RGB value differently, since the color elements (such as phosphors or dyes) and their response to the individual R, G, and B levels vary from manufacturer to manufacturer, or even in the same device over time. Thus an RGB value does not define the same color across devices without some kind of color management.

Typical RGB input devices are color TV and video cameras, image scanners, and digital cameras. Typical RGB output devices are TV sets of various technologies (CRT, LCD, plasma, etc.), computer and mobile phone displays, video projectors, multicolor LED displays, and large screens as JumboTron, etc. Color printers, on the other hand, are usually not RGB devices, but subtractive color devices (typically CMYK color model).

This article discusses concepts common to all the different color spaces that use the RGB color model, which are used in one implementation or another in color image-producing technology.

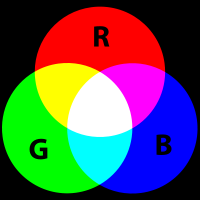

Additive primary colors

To form a color with RGB, three colored light beams (one red, one green, and one blue) must be superimposed (for example by emission from a black screen, or by reflection from a white screen). Each of the three beams is called a component of that color, and each of them can have an arbitrary intensity, from fully off to fully on, in the mixture.

The RGB color model is additive in the sense that the three light beams are added together, and their light spectra add, wavelength for wavelength, to make the final color's spectrum.[1][2]

Zero intensity for each component gives the darkest color (no light, considered the black), and full intensity of each gives a white; the quality of this white depends on the nature of the primary light sources, but if they are properly balanced, the result is a neutral white matching the system's white point. When the intensities for all the components are the same, the result is a shade of gray, darker or lighter depending on the intensity. When the intensities are different, the result is a colorized hue, more or less saturated depending on the difference of the strongest and weakest of the intensities of the primary colors employed.

When one of the components has the strongest intensity, the color is a hue near this primary color (reddish, greenish, or bluish), and when two components have the same strongest intensity, then the color is a hue of a secondary color (a shade of cyan, magenta or yellow). A secondary color is formed by the sum of two primary colors of equal intensity: cyan is green+blue, magenta is red+blue, and yellow is red+green. Every secondary color is the complement of one primary color; when a primary and its complementary secondary color are added together, the result is white: cyan complements red, magenta complements green, and yellow complements blue.

The RGB color model itself does not define what is meant by red, green, and blue colorimetrically, and so the results of mixing them are not specified as absolute, but relative to the primary colors. When the exact chromaticities of the red, green, and blue primaries are defined, the color model then becomes an absolute color space, such as sRGB or Adobe RGB; see RGB color spaces for more details.

Physical principles for the choice of red, green, and blue

The choice of primary colors is related to the physiology of the human eye; good primaries are stimuli that maximize the difference between the responses of the cone cells of the human retina to light of different wavelengths, and that thereby make a large color triangle.[3]

The normal three kinds of light-sensitive photoreceptor cells in the human eye (cone cells) respond most to yellow (long wavelength or L), green (medium or M), and violet (short or S) light (peak wavelengths near 570 nm, 540 nm and 440 nm, respectively[3]). The difference in the signals received from the three kinds allows the brain to differentiate a wide gamut of different colors, while being most sensitive (overall) to yellowish-green light and to differences between hues in the green-to-orange region.

As an example, suppose that light in the orange range of wavelengths (approximately 577 nm to 597 nm) enters the eye and strikes the retina. Light of these wavelengths would activate both the medium and long wavelength cones of the retina, but not equally — the long-wavelength cells will respond more. The difference in the response can be detected by the brain and associated with the concept that the light is orange. In this sense, the orange appearance of objects is simply the result of light from the object entering our eye and stimulating the relevant kinds of cones simultaneously but to different degrees.

Use of the three primary colors is not sufficient to reproduce all colors; only colors within the color triangle defined by the chromaticities of the primaries can be reproduced by additive mixing of non-negative amounts of those colors of light.[3]

History of RGB color model theory and usage

The RGB color model is based on the Young–Helmholtz theory of trichromatic color vision, developed by Thomas Young and Hermann Helmholtz, in the early to mid nineteenth century, and on James Clerk Maxwell's color triangle that elaborated that theory (circa 1860).

Photography

First experiments with RGB in early color photography were made in 1861 by Maxwell himself, and involved the process of three color-filtered separate takes.[4] To reproduce the color photograph, three matching projections over a screen in a dark room were necessary.

The additive RGB model and variants such as orange–green–violet were also used in the Autochrome Lumière color plates and other screen-plate technologies such as the Joly color screen and the Paget process in the early twentieth century. Color photography by taking three separate plates was used by other pioneers, such as Russian Sergey Prokudin-Gorsky in the period 1909 through 1915.[5] Such methods last until about 1960 using the expensive and extremely complex tri-color carbro Autotype process.[6]

When employed, the reproduction of prints from three-plate photos was done by dyes or pigments using the complementary CMY model, by simply using the negative plates of the filtered takes: reverse red gives the cyan plate, and so on.

Television

In pre-electronics, patents on mechanically scanned color systems exist since 1889 in Russia. The color TV pioneer John Logie Baird demonstrated the world's first RGB color transmission in 1928, and also the world's first color broadcast in 1938, in London. In his experiments, scanning and displaying were made by mechanical means through spinning colorized wheels.[7][8]

The Columbia Broadcasting System (CBS) began experimental RGB field-sequential color system in 1940. Images were electronically scanned, but it still used a moving part: the transparent RGB color wheel rotating at above 1,200 rpm synchronized in front of both the monochromatic camera and the cathode-ray tube (CRT) receiver counterpart.[9][10][11]

The modern RGB shadow mask technology for color CRT displays was patented by Werner Flechsig in Germany in 1938.[12]

Personal computers

Early personal computers of the late 1970s and early 1980s, such as those from Apple, Atari and Commodore, do not use RGB as their main method to manage colors, but rather composite video. IBM introduced a 16-color scheme (one bit each for RGB and Intensity) with the Color Graphics Adapter (CGA) for its first IBM PC (1981), later improved with the Enhanced Graphics Adapter (EGA) in 1984. The first manufacturer of a truecolor graphic card for PCs (the TARGA) was Truevision in 1987, but was not until the arrival of the Video Graphics Array (VGA) in 1988 that RGB became popular, mainly due to the analog signals in the connection between the adapter and the monitor which allowed a very wide range of RGB colors.

RGB devices

RGB and displays

One common application of the RGB color model is the display of colors on a cathode ray tube (CRT), liquid crystal display (LCD), plasma display, or LED display such as a television, a computer’s monitor, or a large scale screen. Each pixel on the screen is built by driving three small and very close but still separated RGB light sources. At common viewing distance, the separate sources are indistinguishable, which tricks the eye to see a given solid color. All the pixels together arranged in the rectangular screen surface conforms the color image.

During digital image processing each pixel can be represented in the computer memory or interface hardware (for example, a graphics card) as binary values for the red, green, and blue color components. When properly managed, these values are converted into intensities or voltages via gamma correction to correct the inherent nonlinearity of some devices, such that the intended intensities are reproduced on the display.

Video electronics

RGB is also the term referring to a type of component video signal used in the video electronics industry. It consists of three signals—red, green, and blue—carried on three separate cables/pins. Extra cables are sometimes needed to carry synchronizing signals. RGB signal formats are often based on modified versions of the RS-170 and RS-343 standards for monochrome video. This type of video signal is widely used in Europe since it is the best quality signal that can be carried on the standard SCART connector. Outside Europe, RGB is not very popular as a video signal format; S-Video takes that spot in most non-European regions. However, almost all computer monitors around the world use RGB.

Video framebuffer

A framebuffer is a digital device for computers which stores in the so-called video memory (conformed by an array of Video RAM or similar chips) the digital image to be displayed on the monitor. Driven by software, the CPU or other specialized chips write the appropriate bytes in the video memory to conform the image, which an electronic video generator sends to the monitor. Modern systems encode pixel color values by devoting some bits groupings for each of the RGB separate components. RGB information can be either carried by the pixel bits themselves or in a separate Color Look-Up Table (CLUT) if indexed color graphic modes are used.

By using an appropriate combination of red, green, and blue intensities, many colors can be displayed. Current typical display adapters use up to 24-bits of information for each pixel: 8-bit per component multiplied by three components (see the Digital representations section below). With this system, 16,777,216 (2563 or 224) discrete combinations of R, G and B values are allowed, providing thousands of different (though not necessarily distinguishable) hue, saturation, and lightness shades.

Nonlinearity

In classic cathode ray tube (CRT) devices, the brightness of a given point over the phosphorescent screen due to the impact of accelerated electrons is not proportional to the voltage applied to electrons in their RGB electron guns, but to an expansive function of that voltage. The amount of this deviation is known as its gamma value ( ), the argument for a power law function, which closely describes this behaviour. A linear response is given by a gamma value of 1.0, but actual CRT nonlinearities have a gamma value around 2.0 to 2.5.

), the argument for a power law function, which closely describes this behaviour. A linear response is given by a gamma value of 1.0, but actual CRT nonlinearities have a gamma value around 2.0 to 2.5.

Similarly, the intensity of the output on TV and computer display devices is not directly proportional to the R, G, and B applied electric signals (or file data values which drive them thru Digital-to-Analog Converters—DAC). On a typical standard 2.2-gamma CRT display, an input intensity RGB value of (0.5, 0.5, 0.5) only outputs about 22% of that when displaying the full (1.0, 1.0, 1.0), instead of at 50%.[13] To obtain the correct response, a gamma correction is used in encoding the image data, and possibly further corrections as part of the color calibration process of the device. Gamma affects black-and-white TV as well as color. In standard color TV, signals are already broadcast in a gamma-compensated fashion by TV stations.

Display technologies different from CRT (as LCD, plasma, LED, etc.) may behave nonlinearly in different ways. When they are intended to display standard TV and video shows, they are built in a such way that they behave in gamma like an older CRT TV monitor. In digital image processing, gamma correction can be applied either by the hardware or by the software packages used.

Other input/output RGB devices may have also nonlinear responses, depending on the technology employed. In any case, nonlinearity (whether gamma-related or not) is not part of the RGB color model in itself, although different standards that use RGB can also specify the gamma value and/or other nonlinear parameters involved.

RGB and cameras

In color television and video cameras manufactured before the 1990s, the incoming light was separated by prisms and filters into the three RGB primary colors feeding each color into a separate video camera tube (or pickup tube). These tubes are a type of cathode ray tube, not to be confused with that of CRT displays.

With the arriving of commercially viable charge-coupled device (CCD) technology in the 1980s, first the pickup tubes were replaced with this kind of sensors. Later, higher scale integration electronics was applied (mainly by Sony), simplifying and even removing the intermediate opticals, up to a point to reduce the size of video cameras for domestic use until convert them in handy and full camcorders. Current webcams and mobile phones with cameras are the most miniaturized commercial forms of such technology.

Photographic digital cameras that use a CMOS or CCD image sensor often operate with some variation of the RGB model. In a Bayer filter arrangement, green is given twice as many detectors as red and blue (ratio 1:2:1) in order to achieve higher luminance resolution than chrominance resolution. The sensor has a grid of red, green, and blue detectors arranged so that the first row is RGRGRGRG, the next is GBGBGBGB, and that sequence is repeated in subsequent rows. For every channel, missing pixels are obtained by interpolation in the demosaicing process to build up the complete image. Also, other processes used to be applied in order to map the camera RGB measurements into a standard RGB color space as sRGB.

RGB and scanners

In computing, an image scanner is a device that optically scans images (printed text, handwriting, or an object) and converts it to a digital image which is transferred to a computer. Among other formats, flat, drum, and film scanners exist, and most of them support RGB color. They can be considered the successors of early telephotography input devices, which were able to send consecutive scan lines as analog amplitude modulation signals through standard telephonic lines to appropriate receivers; such systems were in use in press since the 1920s to the mid 1990s. Color telephotographs were sent as three separated RGB filtered images consecutively.

Currently available scanners typically use charge-coupled device (CCD) or contact image sensor (CIS) as the image sensor, whereas older drum scanners use a photomultiplier tube as the image sensor. Early color film scanners used a halogen lamp and a three-color filter wheel, so three exposures were needed to scan a single color image. Due to heating problems, the worst of them being the potential destruction of the scanned film, this technology was later replaced by non-heating light sources such as color LEDs.

Numeric representations

A color in the RGB color model is described by indicating how much of each of the red, green, and blue is included. The color is expressed as an RGB triplet (r,g,b), each component of which can vary from zero to a defined maximum value. If all the components are at zero the result is black; if all are at maximum, the result is the brightest representable white.

These ranges may be quantified in several different ways:

- From 0 to 1, with any fractional value in between. This representation is used in theoretical analyses, and in systems that use floating-point representations.

- Each color component value can also be written as a percentage, from 0% to 100%.

- In computing, the component values are often stored as integer numbers in the range 0 to 255, the range that a single 8-bit byte can offer (by encoding 256 distinct values).

- High-end digital image equipment can deal with the integer range 0 to 65,535 for each primary color, by employing 16-bit words instead of 8-bit bytes.

For example, the full intensity red [_] is written in the different RGB notations as:

-

-

Notation RGB triplet Arithmetic (1.0, 0.0, 0.0) Percentage (100%, 0%, 0%) Digital 8-bit per channel (255, 0, 0) Digital 16-bit per channel (65535, 0, 0)

-

In many environments, the component values within the ranges are not managed as linear (that is, the numbers are nonlinearly related to the intensities that they represent), as in digital cameras and TV broadcasting and receiving due to gamma correction, for example.[14] Linear and nonlinear transformations are often dealt with via digital image processing. Representations with only 8 bits per component are considered sufficient if gamma encoding is used,[15] but sometimes even 8-bit linear is used.[16]

Geometric representation

- See also RGB color space

Since colors are usually defined by three components, not only in the RGB model, but also in other color models such as CIELAB and Y'UV, among others, then a three-dimensional volume is described by treating the component values as ordinary cartesian coordinates in a euclidean space. For the RGB model, this is represented by a cube using non-negative values within a 0–1 range and assigning black to the origin at the vertex (0, 0, 0), and with increasing intensity values running along the three axis up to white at the vertex (1, 1, 1), diagonally opposite black.

An RGB triplet (r,g,b) represents the three-dimensional coordinate of the point of the given color within the cube or its faces or along its edges. This approach allows computations of the color similarity of two given RGB colors by simply calculating the distance between them: the shorter the distance, the higher the similarity. Out-of-gamut computations can be performed this way, too.

Digital representations

| Pixel color depth |

|---|

|

1-bit monochrome 8-bit color |

| Related |

|

RGB color model |

The RGB color model is the most common way to encode color in computing, and several different binary digital representations are in use. The main characteristic of all of them is the quantization of the possible values per component (technically a sample) by using only integer numbers within some range, usually form 0 to a some power of two minus one (2n – 1) to fit them into some bit groupings.

As usual in computing, the values can be represented either in decimal and in hexadecimal notation as well, as is the case of HTML colors text-encoding convention.

The 24-bit RGB representation

RGB values encoded in 24 bits per pixel (bpp) are specified using three 8-bit unsigned integers (0 through 255) representing the intensities of red, green, and blue. This representation is the current mainstream standard representation for the so-called truecolor and common color interchange in image file formats such as JPEG or TIFF. It allows more than 16 million different combinations (hence the term millions of colors some systems use for this mode), many of them indistinguishable to the human eye.

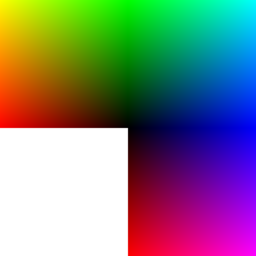

The following image shows the three "fully saturated" faces of a 24-bpp RGB cube, unfolded into a plane:

| yellow (255,255,0) |

green (0,255,0) |

cyan (0,255,255) |

|

| red (255,0,0) |

|

blue (0,0,255) |

|

| red (255,0,0) |

magenta (255,0,255) |

The above definition uses a convention known as full-range RGB. Color values are also often scaled from and to the range 0.0 through 1.0, specially they are mapped from/to other color models and/or encodings.

The 256 levels of a primary usually do not represent equally spaced intensities, due to gamma correction.

This representation cannot offer the exact mid point 127.5, nor other non-integer values, as bytes do not hold fractional values, so these need to be rounded or truncated to a nearby integer value.[17] For example, Microsoft considers the color "medium gray"[18] to be the (128,128,128) RGB triplet in its default palette. The effect of such quantization (for every value, not only the midpoint) is usually not noticeable, but may build up in repeated editing operations or colorspace conversions.[19]

Typically, RGB for digital video is not full range. Instead, video RGB uses a convention with scaling and offsets such that (16, 16, 16) is black, (235, 235, 235) is white, etc. For example, these scalings and offsets are used for the digital RGB definition in the CCIR 601 standard.

Beyond the 24-bit RGB

32-bit graphic mode

The so-called 32 bpp display graphic mode is identical in precision to the 24 bpp mode; there are still only eight bits per component, and the eight extra bits are often not used at all. The reason for the existence of the 32 bpp mode is the higher speed at which most modern 32-bit (and better) hardware can access data that is aligned to byte addresses evenly divisible by a power of two, compared to data not so aligned.

32-bit graphic mode with simple video overlay

Some graphics hardware allows the unused byte into the 32-bit mode to be used as an 8-bit paletted overlay. A certain palette entry (often 0 or 255) is designated as being transparent, i.e., where the overlay is this value the truecolor image is shown. Otherwise the overlay value is looked up in the palette and used. This allows for GUI elements (such as menus or the mouse cursor) or information to be overlayed over a truecolor image without modifying it. When the overlay needs to be removed, it is simply cleared to the transparent value and the truecolor image is displayed again. This feature was often found on graphics hardware for Unix workstations in the 90s and later on some PC graphics cards (most notably those by Matrox). However, PC graphics cards (and the systems they are used in) now have plentiful memory to use as a backing store and this feature has mostly disappeared.

32-bit RGBA (RGB plus alpha channel)

With the need for compositing images came a variant of 24-bit RGB which includes an extra 8-bit channel for transparency, thus resulting also in a 32-bit format. The transparency channel is commonly known as the alpha channel, so the format is named RGBA. Note again that since it does not change anything in the RGB model, RGBA is not a distinct color model, it is only a representation that integrates transparency information along with the color information. This extra channel allows for alpha blending of the image over another, and is a feature of the PNG format.

48-bit RGB

High precision color management typically uses up to 16 bits per component, resulting in 48 bpp. This makes it possible to represent 65,536 tones of each color component instead of 256. This is primarily used in professional image editing, like Adobe Photoshop for maintaining greater precision when a sequence of more than one image filtering algorithms is used on the image. With only 8 bit per component, rounding errors tend to accumulate with each filtering algorithm that is employed, distorting the end result. Sometimes also called 16-bit mode due to the precision by component, not to be confused with 16-bit Highcolor which is a more limited representation (see below).

Limited representations below 24-bit RGB

16-bit RGB (Highcolor)

A 16-bit mode known as Highcolor, in which there are either 5 bits per color, called 555 mode (32,768 total colors), or the same with an extra bit for green (because the green component contributes most to the brightness of a color in the human eye), called 565 mode (65,535 colors). (In general, a good RGB representation needs 1 bit more for red than blue and 1 more bit for green,[20] but this can not be fully achieved within a 16-bit word.) This was the high-end for some display adapters for personal computers during the 1990s, but today is considered slightly obsolete in favour of the 24 or 32 bpp graphic modes. It is still in use in many devices with color screens as cell phones, digital cameras, personal digital assistants (PDA) and portable videogame consoles.

RGB arrangements for 8-bit indexed color

Display adapters and image file formats using indexed-color techniques limit the simultaneously available colors per image up to 256, 8 bits per pixel. The selected colors are arranged into a palette, and the actual image pixels values do not represent RGB triplets, but mere indices into the palette, which in turn stores the 24-bit RGB triplets for every color in the image, so colors are addressed indirectly.

Every image can have its own color selection (or adaptive palette) when indexed color is employed. But this scheme has the inconvenience that two or more indexed-color images with incompatible palettes cannot be properly displayed simultaneously where the 256-color limitation is imposed by the system's hardware.

One solution is to use an intermediate master palette which comprises a full RGB selection with limited levels to the red, green, and blue components, in order to fit it at all within 256 color entries.

Usual limited RGB repertoires include 6×6×6 levels with 216 combinations (the Web colors case), 6×7×6 levels with 252 combinations, 6×8×5 levels with 240 combinations and 8×8×4 levels with the full 256 combinations (see RGB arrangements for samples).

3-bit RGB

The minimum RGB binary representation is 3-bit RGB, one bit per component. Typical for early color terminals in the 1970s, it is still used today with the Teletext TV retrieval service.

Colors in web-page design

Colors used in web-page design are commonly specified using RGB; see web colors for an explanation of how colors are used in HTML and related languages. Initially, the limited color depth of most video hardware led to a limited color palette of 216 RGB colors, defined by the Netscape Color Cube. However, with the predominance of 24-bit displays, the use of the full 16.7 million colors of the HTML RGB color code no longer poses problems for most viewers.

In short, the web-safe color palette consists of the 216 combinations of red, green, and blue where each color can take one of six values (in hexadecimal): #00, #33, #66, #99, #CC or #FF (based on the 0 to 255 range for each value discussed above). Clearly, 6 cubed = 216. These hexadecimal values = 0, 51, 102, 153, 204, 255 in decimal, which = 0%, 20%, 40%, 60%, 80%, 100% in terms of intensity. This seems fine for splitting up 216 colors into a cube of dimension 6. However, lacking gamma correction, the perceived intensity on a standard 2.5 gamma CRT / LCD is only: 0%, 2%, 10%, 28%, 57%, 100%. See the actual web safe color palette for a visual confirmation that the majority of the colors produced are very dark, or see Xona.com Color List for a side by side comparison of proper colors next to their equivalent lacking proper gamma correction.

The RGB color model for HTML was formally adopted as an Internet standard in HTML 3.2, however it had been in use for some time before that.

Color management

Proper reproduction of colors, especially in professional environments, requires color management of all the devices involved in the production process, many of them using RGB. Color management results in several transparent conversions between device-independent and device-dependent color spaces (RGB and others, as CMYK for color printing) during a typical production cycle, in order to ensure color consistency throughout the process. Along with the creative processing, such interventions on digital images can damage the color accuracy and image detail, especially where the gamut is reduced. Professional digital devices and software tools allow for 48 bpp (bits per pixel) images to be manipulated (16 bits per channel), to minimize any such damage.

ICC-compliant applications, such as Adobe Photoshop, use either the Lab color space or the CIE 1931 color space as a Profile Connection Space when translating between color spaces.[21]

RGB model and luminance–chrominance formats relationship

All luminance–chrominance formats used in the different TV and video standards such as YIQ for NTSC, YUV for PAL, YDBDR for SECAM, and YPBPR for component video use color difference signals, by which RGB color images can be encoded for broadcasting/recording and later decoded into RGB again to display them. These intermediate formats were needed for compatibility with pre-existent black-and-white TV formats. Also, those color difference signals need lower data bandwidth compared to full RGB signals.

Similarly, current high-efficiency digital color image data compression schemes such as JPEG and MPEG store RGB color internally in YCBCR format, a digital luminance-chrominance format based on YPBPR. The use of YCBCR also allows to perform lossy subsampling with the chroma channels (typically to 4:2:2 or 4:1:1 ratios), which it aids to reduce the resultant file size.

See also

- Color banding

- SCART connector

- Color theory

- List of palettes

References

- ↑ Charles A. Poynton (2003). Digital Video and HDTV: Algorithms and Interfaces. Morgan Kaufmann. http://books.google.com/books?id=ra1lcAwgvq4C&pg=RA1-PA234&dq=wavelength+beams+additive&lr=&as_brr=3&ei=Pj_fR_uSFYbktAPs_rHxAQ&sig=1FcBF7Soo3r8ZHG1gS75fDDGjX8.

- ↑ Nicholas Boughen (2003). Lightwave 3d 7.5 Lighting. Wordware Publishing, Inc. http://books.google.com/books?id=Xsq4JiSssMoC&pg=PA216&dq=additive-color&lr=&as_brr=0&ei=V7LeR8aGN6butAO5hMjwAQ&sig=2h6YHKM608DuFvvcsnLNPkWeMzU.

- ↑ 3.0 3.1 3.2 R. W. G. Hunt (2004). The Reproduction of Colour (6th ed. ed.). Chichester UK: Wiley–IS&T Series in Imaging Science and Technology. ISBN 0-470-02425-9.

- ↑ Robert Hirsch (2004). Exploring Colour Photography: A Complete Guide. Laurence King Publishing. ISBN 1856694208. http://books.google.com/books?id=4Gx2WItWGYoC&pg=PA28&dq=maxwell+additive+color+photograph+register&lr=&as_brr=0&ei=-K6BR-TrBYGmswP6jqHDCw&sig=KzP8phk345XPVijOyYR_KlffeXc#PPA28,M1.

- ↑ Photographer to the Tsar: Sergei Mikhailovich Prokudin-Gorskii Library of Congress.

- ↑ The Evolution of Color Pigment Printing

- ↑ John Logie Baird, Television Apparatus and the Like, U.S. patent, filed in U.K. in 1928.

- ↑ Baird Television: Crystal Palace Television Studios. Previous color television demonstrations in the U.K. and U.S. had been via closed circuit.

- ↑ "Color Television Success in Test", NY Times (1940-08-30), p. 21. Retrieved on 2008-05-12.

- ↑ "CBS Demonstrates Full Color Television," Wall Street Journal, Sept. 5, 1940, p. 1.

- ↑ "Television Hearing Set", NY Times (1940-11-13), p. 26. Retrieved on 2008-05-12.

- ↑ Morton, David L. (1999). "Television Broadcasting". A History of Electronic Entertainment Since 1945. IEEE. ISBN 0-7803-9936-6. http://www.ieee.org/portal/cms_docs_iportals/iportals/aboutus/history_center/publications/entertainment/Chapter2.pdf.

- ↑ Steve Wright (2006). Digital Compositing for Film and Video. Focal Press. ISBN 024080760X. http://books.google.com/books?id=IpSRykrRamgC&pg=PA265&ots=psmPVWAJtK&dq=display+gamma+2.2+0.5&sig=eVscoBjp0EZoGsLSVtMNkZtuRyI#PPA265,M1.

- ↑ Edwin Paul J. Tozer (2004). Broadcast Engineer's Reference Book. Elsevier. http://books.google.com/books?id=DL73f4vFeEwC&pg=PA179&dq=rgb++gamma+assumed&lr=&as_brr=3&ei=mwvQR50Hmb6zA-CI0KQF&sig=TgFR2jnLR5QhA7itDo2mHXdXfpQ#PPA180,M1.

- ↑ Bernice Ellen Rogowitz and Thrasyvoulos N. Pappas (1998). Human Vision and Electronic Imaging III. SPIE. http://books.google.com/books?id=zPdRAAAAMAAJ&q=gamma+assumed+rgb+100%25&dq=gamma+assumed+rgb+100%25&lr=&as_brr=0&ei=UwzQR8GpI42msgO0_oylBQ&pgis=1.

- ↑ "Gamma".

- ↑ About roundoff errors in color conversion in Adobe tools.

- ↑ About Microsoft Windows and palettes.

- ↑ Wladyslaw Wadysaw (ed.) (2001). Computer Analysis of Images and Patterns: 9th International Conference, CAIP 2001. Springer. ISBN 3540425136. http://books.google.com/books?id=5bS4NTsx370C&pg=PA88&dq=rgb+255+round+error+accumulation&lr=&as_brr=0&ei=tDTER8eKC57-swP_ubWxCA&sig=XpZObbRVxJinweyAWcwinwcQya0#PPA88,M1.

- ↑ Cowlishaw, M. F. (1985). "Fundamental requirements for picture presentation" (PDF). Proc. Society for Information Display 26 (2): 101–107. http://www.cary.demon.co.uk/buss/cowlishaw1985-fundamental.pdf.

- ↑ ICC. "Why Color Management?". Retrieved on 2008-04-16. "The two PCS's in the ICC system are CIE-XYZ and CIELAB"

External links

|

||||||||||||||||||||