Probability space

A probability space, in probability theory, is the conventional mathematical model of randomness. This mathematical object, sometimes called also probability triple, formalizes three interrelated ideas by three mathematical notions. First, a sample point (called also elementary event), --- something to be chosen at random (outcome of experiment, state of nature, possibility etc.) Second, an event, --- something that will occur or not, depending on the chosen elementary event. Third, the probability of an event. The definition (see below) was introduced by Kolmogorov in the 1930s. For an algebraic alternative to Kolmogorov's approach, see algebra of random variables. Alternative models of randomness (finitely additive probability, non-additive probability) are sometimes advocated in connection to various probability interpretations.

Contents |

Definition

A probability space is a measure space such that the measure of the whole space is equal to 1.

In other words: a probability space is a triple  consisting of a set

consisting of a set  (called the sample space), a σ-algebra (also called σ-field)

(called the sample space), a σ-algebra (also called σ-field)  of subsets of

of subsets of  (these subsets are called events), and a measure

(these subsets are called events), and a measure  on

on  such that

such that  (called the probability measure).

(called the probability measure).

Discrete case

Discrete probability theory needs only at most countable sample spaces  , which makes the foundations much less technical. Probabilities can be ascribed to points of

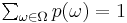

, which makes the foundations much less technical. Probabilities can be ascribed to points of  by a function

by a function ![\textstyle p�: \Omega \to [0,1]](/2009-wikipedia_en_wp1-0.7_2009-05/I/f3c0f1ee451370b490b0a9dfcd3bdef4.png) such that

such that  . All subsets of

. All subsets of  can be treated as events (thus,

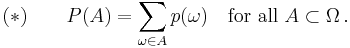

can be treated as events (thus,  is the power set). The probability measure takes the simple form

is the power set). The probability measure takes the simple form

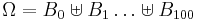

The greatest σ-algebra  describes the complete information. In general, a σ-algebra

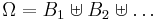

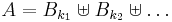

describes the complete information. In general, a σ-algebra  corresponds to a (finite or countable) partition

corresponds to a (finite or countable) partition  , the general form of an event

, the general form of an event  being

being  (Here

(Here  means the union of disjoint sets.) See also Examples.

means the union of disjoint sets.) See also Examples.

The case  is permitted by the definition, but rarely used, since such

is permitted by the definition, but rarely used, since such  can safely be excluded from the sample space.

can safely be excluded from the sample space.

General case

If  is uncountable, still, it may happen that

is uncountable, still, it may happen that  for some

for some  ; such

; such  are called atoms. They are an at most countable (maybe, empty) set, whose probability is the sum of probabilities of all atoms. If this sum is equal to 1 then all other points can safely be excluded from the sample space, returning us to the discrete case. Otherwise, if the sum of probabilities of all atoms is less than 1 (maybe 0), then the probability space decomposes into a discrete (atomic) part (maybe empty) and a non-atomic part.

are called atoms. They are an at most countable (maybe, empty) set, whose probability is the sum of probabilities of all atoms. If this sum is equal to 1 then all other points can safely be excluded from the sample space, returning us to the discrete case. Otherwise, if the sum of probabilities of all atoms is less than 1 (maybe 0), then the probability space decomposes into a discrete (atomic) part (maybe empty) and a non-atomic part.

Non-atomic case

If  for all

for all  then Equation (*) fails; the probability of a set is not the sum over its elements, which makes the theory much more technical. Initially the probabilities are ascribed to some `elementary' sets (see Examples). Then a limiting procedure allows to ascribe probabilities to sets that are limits of sequences of elementary sets, or limits of limits, and so on. All these sets are the σ-algebra

then Equation (*) fails; the probability of a set is not the sum over its elements, which makes the theory much more technical. Initially the probabilities are ascribed to some `elementary' sets (see Examples). Then a limiting procedure allows to ascribe probabilities to sets that are limits of sequences of elementary sets, or limits of limits, and so on. All these sets are the σ-algebra  For technical details see Caratheodory's extension theorem. Sets belonging to

For technical details see Caratheodory's extension theorem. Sets belonging to  are called measurable. In general they are much more complicated than elementary sets, but much better than non-measurable sets.

are called measurable. In general they are much more complicated than elementary sets, but much better than non-measurable sets.

Examples

Discrete examples

Example 1

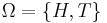

If the space concerns one flip of a fair coin, then the outcomes are heads and tails:  . The σ-algebra

. The σ-algebra  contains

contains  events, namely,

events, namely,  : heads,

: heads,  : tails,

: tails,  : neither heads nor tails, and

: neither heads nor tails, and  : heads or tails. So,

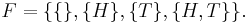

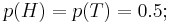

: heads or tails. So,  There is a fifty percent chance of tossing either heads or tail:

There is a fifty percent chance of tossing either heads or tail:  thus

thus  The chance of tossing neither is zero:

The chance of tossing neither is zero:  and the chance of tossing one or the other is one:

and the chance of tossing one or the other is one:

Example 2

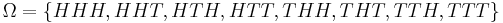

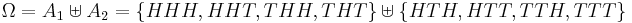

The fair coin is tossed 3 times. There are 8 possibilities:  . The complete information is described by the σ-algebra

. The complete information is described by the σ-algebra  of

of  events (just one of them:

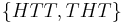

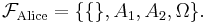

events (just one of them:  ). Alice knows the outcome of the second toss only. Her incomplete information is described by the partition

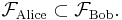

). Alice knows the outcome of the second toss only. Her incomplete information is described by the partition  and the corresponding σ-algebra

and the corresponding σ-algebra  Bob knows only the total number of heads. His partition

Bob knows only the total number of heads. His partition  contains 4 parts; accordingly, his σ-algebra

contains 4 parts; accordingly, his σ-algebra  contains

contains  events (just one of them:

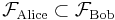

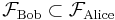

events (just one of them:  ). The two σ-algebras are incomparable (neither

). The two σ-algebras are incomparable (neither  nor

nor  ); both are sub-σ-algebras of

); both are sub-σ-algebras of

Example 3

If 100 voters are to be drawn randomly from among all voters in California and asked whom they will vote for governor, then the set of all sequences of 100 Californian voters would be the sample space  (Assuming that sampling without replacement is used, only sequences of 100 different voters are allowed. Ordered sample is considered; otherwise 100-element sets of voters should be considered instead of sequences.)

(Assuming that sampling without replacement is used, only sequences of 100 different voters are allowed. Ordered sample is considered; otherwise 100-element sets of voters should be considered instead of sequences.)

The set of all sequences of 100 Californian voters in which at least 60 will vote for Schwarzenegger is identified with the event that at least 60 of the 100 chosen voters will so vote.

Alice knows only, whether this specific event occurs or not. Her incomplete information is described by the σ-algebra  that contains: (1) the set of all sequences of 100 where at least 60 vote for Schwarzenegger; (2) the set of all sequences of 100 where fewer than 60 vote for Schwarzenegger (the complement of (1)); (3) the whole sample space Ω as above; and (4) the empty set.

that contains: (1) the set of all sequences of 100 where at least 60 vote for Schwarzenegger; (2) the set of all sequences of 100 where fewer than 60 vote for Schwarzenegger (the complement of (1)); (3) the whole sample space Ω as above; and (4) the empty set.

Bob knows the number of voters who will vote for Schwarzenegger in the sample of 100. His incomplete information is described by the corresponding partition  (assuming that all these sets are nonempty, which depends on Californian voters...) and the σ-algebra

(assuming that all these sets are nonempty, which depends on Californian voters...) and the σ-algebra  of

of  events.

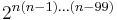

events.  The complete information is described by the much larger σ-algebra

The complete information is described by the much larger σ-algebra  of

of  events, where

events, where  is the number of all voters in California.

is the number of all voters in California.

Non-atomic examples

Example 4

A number between  and

and  is chosen at random, uniformly. Here

is chosen at random, uniformly. Here ![\textstyle \Omega = [0,1],](/2009-wikipedia_en_wp1-0.7_2009-05/I/2147ebada24ef51774114b137b9bd7e1.png)

is the Lebesgue measure on

is the Lebesgue measure on ![\textstyle [0,1],](/2009-wikipedia_en_wp1-0.7_2009-05/I/8848019ede0ebafa35c10ec94131bdd1.png) and

and  is the σ-algebra of all measurable subsets of

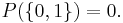

is the σ-algebra of all measurable subsets of ![\textstyle [0,1].](/2009-wikipedia_en_wp1-0.7_2009-05/I/17a24f85d600e9bde0824ae4c92ccef0.png) (The set

(The set  may be used equally well, since

may be used equally well, since  )

)

Intervals (or their finite unions) may be used as elementary sets.

Example 5

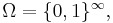

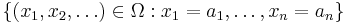

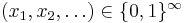

A fair coin is tossed endlessly. Here one can take  the set of all infinite sequences of numbers 0 and 1. Cylinder sets

the set of all infinite sequences of numbers 0 and 1. Cylinder sets  (or their finite unions) may be used as elementary sets.

(or their finite unions) may be used as elementary sets.

These two non-atomic examples are closely related: a sequence  leads to the number

leads to the number ![\textstyle \frac{x_1}{2^1} + \frac{x_2}{2^2} + \dots \in [0,1].](/2009-wikipedia_en_wp1-0.7_2009-05/I/d2b17356c0ea16b848478259f0e9ae2f.png) This is not a one-to-one correspondence between

This is not a one-to-one correspondence between  and

and ![\textstyle [0,1]](/2009-wikipedia_en_wp1-0.7_2009-05/I/84235d31ac83fe764546463aba7acc0e.png) ; however, it is a isomorphism modulo zero, which allows for treating the two probability spaces as two forms of the same probability space. In fact, all non-pathologic non-atomic probability spaces are the same (in this sense), see standard probability space.

; however, it is a isomorphism modulo zero, which allows for treating the two probability spaces as two forms of the same probability space. In fact, all non-pathologic non-atomic probability spaces are the same (in this sense), see standard probability space.

Related concepts

Probability distribution

Any probability distribution defines a probability measure.

Random variables

A random variable X is a measurable function from the sample space  ; to another measurable space called the state space.

; to another measurable space called the state space.

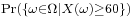

If X is a real-valued random variable, then the notation  is shorthand for

is shorthand for  , assuming that

, assuming that  is an event.

is an event.

Defining the events in terms of the sample space

If  is countable we almost always define

is countable we almost always define  as the power set of

as the power set of  , i.e

, i.e  which is trivially a σ-algebra and the biggest one we can create using

which is trivially a σ-algebra and the biggest one we can create using  . We can therefore omit

. We can therefore omit  and just write

and just write  to define the probability space.

to define the probability space.

On the other hand, if  is uncountable and we use

is uncountable and we use  we get into trouble defining our probability measure

we get into trouble defining our probability measure  because

because  is too 'huge', i.e. there will often be sets to which it will be impossible to assign a unique measure, giving rise to problems like the Banach–Tarski paradox. In this case, we have to use a smaller σ-algebra

is too 'huge', i.e. there will often be sets to which it will be impossible to assign a unique measure, giving rise to problems like the Banach–Tarski paradox. In this case, we have to use a smaller σ-algebra  (e.g. the Borel algebra of

(e.g. the Borel algebra of  , which is the smallest σ-algebra that makes all open sets measurable).

, which is the smallest σ-algebra that makes all open sets measurable).

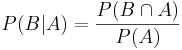

Conditional probability

Kolmogorov's definition of probability spaces gives rise to the natural concept of conditional probability. Every set  with non-zero probability (that is, P(A) > 0 ) defines another probability measure

with non-zero probability (that is, P(A) > 0 ) defines another probability measure

on the space. This is usually read as the "probability of B given A".

Independence

Two events, A and B are said to be independent if P(A∩B)=P(A)P(B).

Two random variables, X and Y, are said to be independent if any event defined in terms of X is independent of any event defined in terms of Y. Formally, they generate independent σ-algebras, where two σ-algebras G and H, which are subsets of F are said to be independent if any element of G is independent of any element of H.

The concept of independence is where probability theory departs from measure theory. In spite of defining independence as above the definition does not allow

further examination e.g. towards causation. Applications of the independence definition can lead type I, and II errors. Therefore, the definition leads to a blind alley.

It might be useful to apply Bayesian calculus for independent events, and to try to deduce equations as if independent formalism.

Mutual exclusivity

Two events, A and B are said to be mutually exclusive or disjoint if P(A∩B)=0. (This is weaker than A∩B=∅, which is the definition of disjoint for sets).

If A and B are disjoint events, then P(A∪B)=P(A)+P(B). This extends to a (finite or countably infinite) sequence of events. However, the probability of the union of an uncountable set of events is not the sum of their probabilities. For example, if Z is a normally distributed random variable, then P(Z=x) is 0 for any x, but P(Z is real)=1.

The event A∩B is referred to as A AND B, and the event A∪B as A OR B.

Bibliography

- Pierre Simon de Laplace (1812) Analytical Theory of Probability

-

- The first major treatise blending calculus with probability theory, originally in French: Théorie Analytique des Probabilités.

- Andrei Nikolajevich Kolmogorov (1950) Foundations of the Theory of Probability

-

- The modern measure-theoretic foundation of probability theory; the original German version (Grundbegriffe der Wahrscheinlichkeitrechnung) appeared in 1933.

- Harold Jeffreys (1939) The Theory of Probability

-

- An empiricist, Bayesian approach to the foundations of probability theory.

- Edward Nelson (1987) Radically Elementary Probability Theory

-

- Discrete foundations of probability theory, based on nonstandard analysis and internal set theory. downloadable. http://www.math.princeton.edu/~nelson/books.html

- Patrick Billingsley: Probability and Measure, John Wiley and Sons, New York, Toronto, London, 1979.

- Henk Tijms (2004) Understanding Probability

-

- A lively introduction to probability theory for the beginner, Cambridge Univ. Press.

- David Williams (1991) Probability with martingales

-

- An undergraduate introduction to measure-theoretic probability, Cambridge Univ. Press.

- Gut, Allan (2005). Probability: A Graduate Course. Springer. ISBN 0387228330.