Ohm

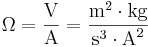

The ohm (symbol: Ω) is the SI unit of electrical impedance or, in the direct current case, electrical resistance, named after Georg Ohm.

Contents |

Definition

The ohm is defined as the electric resistance between two points of a conductor when a constant potential difference of 1 volt, applied to these points, produces in the conductor a current of 1 ampere, the conductor not being the seat of any electromotive force.[1]

In many cases the resistance of a conductor in ohms is approximately constant within a certain range of voltages, temperatures, and other parameters; one speaks of linear resistors. In other cases resistance varies (e.g., thermistors).

The most commonly used multiples and submultiples in electrical and electronic usage are the milliohm, ohm, kilohm, and megohm.

Conversions

- The SI unit of electrical conductance is the siemens, formerly known as the mho (ohm spelled backwards); it is the reciprocal of resistance in ohms.

Power as a function of resistance

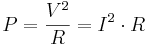

The power dissipated by a linear resistor may be calculated from its resistance, and voltage or current. The formula is a combination of Ohm's law and Joule's law:

where P is the power in watts, R the resistance in ohms, V the voltage across the resistor, and I the current through it.

This formula is not applicable to devices whose resistance varies with current.