Norm (mathematics)

In linear algebra, functional analysis and related areas of mathematics, a norm is a function that assigns a strictly positive length or size to all vectors in a vector space, other than the zero vector. A seminorm (or pseudonorm), on the other hand, is allowed to assign zero length to some non-zero vectors.

A simple example is the 2-dimensional Euclidean space R2 equipped with the Euclidean norm. Elements in this vector space (e.g., (3, 7) ) are usually drawn as arrows in a 2-dimensional cartesian coordinate system starting at the origin (0, 0). The Euclidean norm assigns to each vector the length of its arrow. Because of this, the Euclidean norm is often known as the magnitude.

A vector space with a norm is called a normed vector space. Similarly, a vector space with a seminorm is called a seminormed vector space.

Contents |

Definition

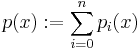

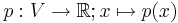

Given a vector space V over a subfield F of the complex numbers such as the complex numbers themselves or the real or rational numbers, a seminorm on V is a function  with the following properties:

with the following properties:

For all a in F and all u and v in V,

- p(a v) = |a| p(v), (positive homogeneity or positive scalability)

- p(u + v) ≤ p(u) + p(v) (triangle inequality or subadditivity).

A simple consequence of these two axioms, positive homogeneity and the triangle inequality, is p(0) = 0 and thus

- p(v) ≥ 0 (positivity).

A norm is a seminorm with the additional property

- p(v) = 0 if and only if v is the zero vector (positive definiteness).

Although every vector space is seminormed (e.g., with the trivial seminorm in the Examples section below), it may be not normed. Every vector space V with seminorm p(v) induces a normed space V/W, called the quotient space, where W is the subspace of V consisting of all vectors v in V with p(v) = 0. The induced norm on V/W is clearly well-defined and is given by:

- p(W+v) = p(v).

A topological vector space is called normable (seminormable) if the topology of the space can be induced by a norm (seminorm).

Notation

The norm of a vector v is usually denoted ||v||, and sometimes |v|. However, the latter notation is generally discouraged, because it is also used to denote the absolute value of scalars and the determinant of matrices.

Examples

- All norms are seminorms.

- The trivial seminorm, with p(x) = 0 for all x in V.

- The absolute value is a norm on the real numbers.

- Every linear form f on a vector space defines a seminorm by x → |f(x)|.

Euclidean norm

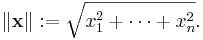

On Rn, the intuitive notion of length of the vector x = [x1, x2, ..., xn] is captured by the formula

This gives the ordinary distance from the origin to the point x, a consequence of the Pythagorean theorem. The Euclidean norm is by far the most commonly used norm on Rn, but there are other norms on this vector space as will be shown below.

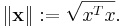

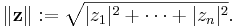

On Cn the most common norm is

, equivalent with the Euclidean norm on R2n.

, equivalent with the Euclidean norm on R2n.

In each case we can also express the norm as the square root of the inner product of the vector and itself. The Euclidean norm is also called the L2 distance or L2 norm; see Lp space.

The set of vectors whose Euclidean norm is a given constant forms the surface of an n-sphere, with n+1 being the dimension of the Euclidean space.

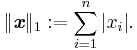

Taxicab norm or Manhattan norm

The name relates to the distance a taxi has to drive in a rectangular street grid to get from the origin to the point x.

The set of vectors whose 1-norm is a given constant forms the surface of a cross polytope of dimension equivalent to that of the norm minus 1.

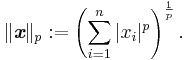

p-norm

Let p ≥ 1 be a real number.

Note that for p = 1 we get the taxicab norm and for p = 2 we get the Euclidean norm.

This formula is also valid for 0 < p < 1, but the resulting function does not define a norm,[1] because it violates the triangle inequality.

Taking the limit  yields the uniform norm, and taking the limit

yields the uniform norm, and taking the limit  yields the so-called zero norm, which, despite the name, is not a norm.

yields the so-called zero norm, which, despite the name, is not a norm.

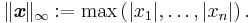

Infinity norm or maximum norm

The set of vectors whose infinity norm is a given constant, c, forms the surface of a hypercube with edge length 2c.

Zero norm

In the machine learning and optimization literature, one often finds reference to the zero norm. The zero norm of x is defined as  where

where  is the p-norm defined above. If we define

is the p-norm defined above. If we define  then we can write the zero norm as

then we can write the zero norm as  . It follows that the zero norm of x is simply the number of non-zero elements of x. Despite its name, the zero norm is not a true norm; in particular, it is not positive homogeneous. Such a norm can be defined over arbitrary fields (besides the fields of complex numbers). In the context of the information theory, it is often called the Hamming distance in the case of the 2-element GF(2) field.

. It follows that the zero norm of x is simply the number of non-zero elements of x. Despite its name, the zero norm is not a true norm; in particular, it is not positive homogeneous. Such a norm can be defined over arbitrary fields (besides the fields of complex numbers). In the context of the information theory, it is often called the Hamming distance in the case of the 2-element GF(2) field.

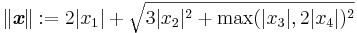

Other norms

Other norms on Rn can be constructed by combining the above; for example

is a norm on R4.

For any norm and any bijective linear transformation A we can define a new norm of x, equal to

In 2D, with A a rotation by 45° and a suitable scaling, this changes the taxicab norm into the maximum norm. In 2D, each A applied to the taxicab norm, up to inversion and interchanging of axes, gives a different unit ball: a parallelogram of a particular shape, size and orientation. In 3D this is similar but different for the 1-norm (octahedrons) and the maximum norm (prisms with parallelogram base).

All the above formulas also yield norms on Cn without modification.

Infinite dimensional case

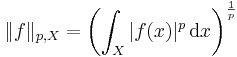

The generalization of the above norms to an infinite number of components leads to the Lp spaces, with norms

resp.

resp.

(for complex-valued sequences x resp. functions f defined on  ), which can be further generalized (see Haar measure).

), which can be further generalized (see Haar measure).

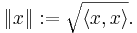

Any inner product induces in a natural way the norm

Other examples of infinite dimensional normed vector spaces can be found in the Banach space article.

Properties

The concept of unit circle (the set of all vectors of norm 1) is different in different norms: for the 1-norm the unit circle in R2 is a square, for the 2-norm (Euclidean norm) it is the well-known unit circle, while for the infinity norm it is a different square. For any p-norm it is a superellipse (with congruent axes). See the accompanying illustration. Note that due to the definition of the norm, the unit circle is always convex and centrally symmetric (therefore, for example, the unit ball may be a rectangle but cannot be a triangle).

In terms of the vector space, the seminorm defines a topology on the space, and this is a Hausdorff topology precisely when the seminorm can distinguish between distinct vectors, which is again equivalent to the seminorm being a norm.

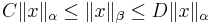

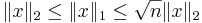

Two norms ||•||α and ||•||β on a vector space V are called equivalent if there exist positive real numbers C and D such that

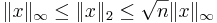

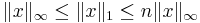

for all x in V. On a finite-dimensional vector space all norms are equivalent. For instance, the  ,

,  , and

, and  norms are all equivalent on

norms are all equivalent on  :

:

Equivalent norms define the same notions of continuity and convergence and for many purposes do not need to be distinguished. To be more precise the uniform structure defined by equivalent norms on the vector space is uniformly isomorphic.

Every (semi)-norm is a sublinear function, which implies that every norm is a convex function. As a result, finding a global optimum of a norm-based objective function is often tractable.

Given a finite family of seminorms pi on a vector space the sum

is again a seminorm.

For any norm p on a vector space V, we have that for all u and v ∈ V:

- p(u ± v) ≥ | p(u) − p(v) |

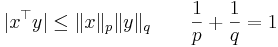

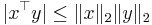

A special case of the above property is the Cauchy-Schwarz inequality:[2]

Classification of seminorms: Absolutely convex absorbing sets

All seminorms on a vector space V can be classified in terms of absolutely convex absorbing sets in V. To each such set, A, corresponds a seminorm pA called the gauge of A, defined as

- pA(x) := inf{α : α > 0, x ∈ α A}

with the property that

- {x : pA(x) < 1} ⊆ A ⊆ {x : pA(x) ≤ 1}.

Conversely, if a norm p is given and A is its open (or closed) unit ball, then A is an absolutely convex absorbing set, and p = pA.

Any locally convex topological vector space has a local basis consisting of absolutely convex absorbing sets. A common method to construct such a basis is to use a family of seminorms. Typically this family is infinite, and there are enough seminorms to distinguish between elements of the vector space, creating a Hausdorff space.

Notes

- ↑ Except in R1, where it coincides with the Euclidean norm, and R0, where it is trivial.

- ↑ 2.0 2.1 Golub, Gene; Charles F. Van Loan (1996). Matrix Computations - Third Edition. Baltimore: The Johns Hopkins University Press. pp. 53. ISBN 0-8018-5413-X.

References

Bourbaki, N. (1987). Topological Vector Spaces, Chapters 1-5. Elements of Mathematics. Springer. ISBN 3-540-13627-4.

See also

- Asymmetric norm, a generalization of a norm for which ||x|| and ||−x|| are not necessarily equal.

- Matrix norm

- Mahalanobis distance

- Manhattan distance

- Relation of norms and metrics, a translation invariant and homogeneous metric can be used to define a norm.

- Normed vector space