Inner product space

In mathematics, an inner product space is a vector space with the additional structure of inner product. This additional structure associates each pair of vectors in the space with a scalar quantity known as the inner product of the vectors. Inner products allow the rigorous introduction of intuitive geometrical notions such as the length of a vector or the angle between two vectors. It also provides the means of defining orthogonality between vectors (zero scalar product). Inner product spaces generalize Euclidean spaces (in which the inner product is the dot product, also known as the scalar product) to vector spaces of any (possibly infinite) dimension, and are studied in functional analysis.

An inner product space is sometimes also called a pre-Hilbert space, since its completion with respect to the metric, induced by its inner product, is a Hilbert space.

Inner product spaces were referred to as unitary spaces in earlier work, although this terminology is now rarely used.

Contents |

Definition

In the following article, the field of scalars denoted F is either the field of real numbers R or the field of complex numbers C. See below.

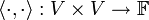

Formally, an inner product space is a vector space V over the field F together with a positive-definite sesquilinear form, called an inner product. For real vector spaces, this is actually a positive-definite symmetric bilinear form. Thus the inner product is a map

satisfying the following axioms for all  :

:

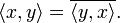

- Conjugate symmetry:

- This condition implies that

, because

, because  .

.

- (Conjugation is also often written with an asterisk, as in

, as is the conjugate transpose.)

, as is the conjugate transpose.)

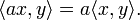

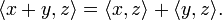

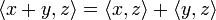

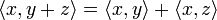

- Linearity in the first variable:

- By combining these with conjugate symmetry, we get:

- So

is actually a sesquilinear form.

is actually a sesquilinear form.

- Positivity:

-

for all

for all  .

.

- (This makes sense because

for all

for all  .)

.)

- Definiteness:

-

implies

implies  .

.

- Hence, the inner product is a positive-definite Hermitian form.

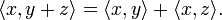

- The property of an inner product space

that

that

and

and  is known as additivity.

is known as additivity.

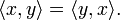

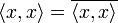

- Note that if F=R, then the conjugate symmetry property is simply symmetry of the inner product, i.e.,

- In this case, sesquilinearity becomes standard bilinearity.

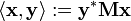

Remark: In the more abstract linear algebra literature, the conjugate-linear argument of the inner product is conventionally put in the second position (e.g., y in  ) as we have done above. This convention is reversed in both physics and matrix algebra; in those respective disciplines we would write the product

) as we have done above. This convention is reversed in both physics and matrix algebra; in those respective disciplines we would write the product  as

as  (the bra-ket notation of quantum mechanics) and

(the bra-ket notation of quantum mechanics) and  (dot product as a case of the convention of forming the matrix product AB as the dot products of rows of A with columns of B). Here the kets and columns are identified with the vectors of V and the bras and rows with the dual vectors or linear functionals of the dual space V*, with conjugacy associated with duality. This reverse order is now occasionally followed in the more abstract literature, e.g., Emch [1972], taking

(dot product as a case of the convention of forming the matrix product AB as the dot products of rows of A with columns of B). Here the kets and columns are identified with the vectors of V and the bras and rows with the dual vectors or linear functionals of the dual space V*, with conjugacy associated with duality. This reverse order is now occasionally followed in the more abstract literature, e.g., Emch [1972], taking  to be conjugate linear in x rather than y. A few instead find a middle ground by recognizing both

to be conjugate linear in x rather than y. A few instead find a middle ground by recognizing both  and

and  as distinct notations differing only in which argument is conjugate linear.

as distinct notations differing only in which argument is conjugate linear.

There are various technical reasons why it is necessary to restrict the basefield to R and C in the definition. Briefly, the basefield has to contain an ordered subfield (in order for non-negativity to make sense) and therefore has to have characteristic equal to 0. This immediately excludes finite fields. The basefield has to have additional structure, such as a distinguished automorphism. More generally any quadratically closed subfield of R or C will suffice for this purpose, e.g., the algebraic numbers, but when it is a proper subfield (i.e., neither R nor C) even finite-dimensional inner product spaces will fail to be metrically complete. In contrast all finite-dimensional inner product spaces over R or C, such as those used in quantum computation, are automatically metrically complete and hence Hilbert spaces.

In some cases we need to consider non-negative semi-definite sesquilinear forms. This means that  is only required to be non-negative. We show how to treat these below.

is only required to be non-negative. We show how to treat these below.

Examples

A trivial example is the real numbers with the standard multiplication as the inner product

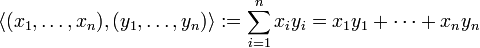

More generally any Euclidean space Rn with the dot product is an inner product space

The general form of an inner product on Cn is given by:

with M any symmetric positive-definite matrix, and y* the conjugate transpose of y. For the real case this corresponds to the dot product of the results of directionally differential scaling of the two vectors, with positive scale factors and orthogonal directions of scaling. Apart from an orthogonal transformation it is a weighted-sum version of the dot product, with positive weights.

The article on Hilbert space has several examples of inner product spaces wherein the metric induced by the inner product yields a complete metric space. An example of an inner product which induces an incomplete metric occurs with the space C[a, b] of continuous complex valued functions on the interval [a, b]. The inner product is

This space is not complete; consider for example, for the interval [−1,1] the sequence of "step" functions { fk }k where

- fk(t) is 0 for t in the subinterval [−1,0]

- fk(t) is 1 for t in the subinterval [1/k, 1]

- fk is affine in [0, 1/k]

This sequence is a Cauchy sequence which does not converge to a continuous function.

Norms on inner product spaces

Inner product spaces have a naturally defined norm

This is well defined by the nonnegativity axiom of the definition of inner product space. The norm is thought of as the length of the vector x. Directly from the axioms, we can prove the following:

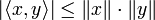

- Cauchy-Schwarz inequality: for x, y elements of V

- with equality if and only if x and y are linearly dependent. This is one of the most important inequalities in mathematics. It is also known in the Russian mathematical literature as the Cauchy-Bunyakowski-Schwarz inequality.

- Because of its importance, its short proof should be noted.

-

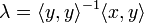

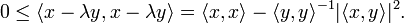

- It is trivial to prove the inequality true in the case y = 0. Thus we assume <y, y> is nonzero, giving us the following:

-

- The complete proof can be obtained by multiplying out this result.

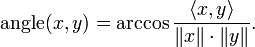

- Orthogonality: The geometric interpretation of the inner product in terms of angle and length, motivates much of the geometric terminology we use in regard to these spaces. Indeed, an immediate consequence of the Cauchy-Schwarz inequality is that it justifies defining the angle between two non-zero vectors x and y (at least in the case F = R) by the identity

- We assume the value of the angle is chosen to be in the interval [0, +π]. This is in analogy to the situation in two-dimensional Euclidean space. Correspondingly, we will say that non-zero vectors x, y of V are orthogonal if and only if their inner product is zero.

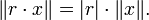

- Homogeneity: for x an element of V and r a scalar

- The homogeneity property is completely trivial to prove.

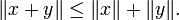

- Triangle inequality: for x, y elements of V

- The last two properties show the function defined is indeed a norm.

- Because of the triangle inequality and because of axiom 2, we see that ||·|| is a norm which turns V into a normed vector space and hence also into a metric space. The most important inner product spaces are the ones which are complete with respect to this metric; they are called Hilbert spaces. Every inner product V space is a dense subspace of some Hilbert space. This Hilbert space is essentially uniquely determined by V and is constructed by completing V.

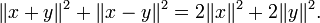

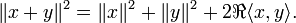

- Parallelogram law: for x, y elements of V,

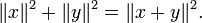

- Pythagorean theorem: Whenever x, y are in V and <x, y> = 0, then

- The proofs of both of these identities require only expressing the definition of norm in terms of the inner product and multiplying out, using the property of additivity of each component. Alternatively, both can be seen as consequences of the identity

- which is a form of the law of cosines, and is proved as before.

- The name Pythagorean theorem arises from the geometric interpretation of this result as an analogue of the theorem in synthetic geometry. Note that the proof of the Pythagorean theorem in synthetic geometry is considerably more elaborate because of the paucity of underlying structure. In this sense, the synthetic Pythagorean theorem, if correctly demonstrated is deeper than the version given above.

- An easy induction on the Pythagorean theorem yields:

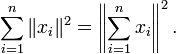

- If x1, ..., xn are orthogonal vectors, that is, <xj, xk> = 0 for distinct indices j, k, then

- In view of the Cauchy-Schwarz inequality, we also note that <·,·> is continuous from V × V to F. This allows us to extend Pythagoras' theorem to infinitely many summands:

- Parseval's identity: Suppose V is a complete inner product space. If {xk} are mutually orthogonal vectors in V then

- provided the infinite series on the left is convergent. Completeness of the space is needed to ensure that the sequence of partial sums

- which is easily shown to be a Cauchy sequence is convergent.

Orthonormal sequences

A sequence {ek}k is orthonormal if and only if it is orthogonal and each ek has norm 1. An orthonormal basis for an inner product space of finite dimension V is an orthonormal sequence whose algebraic span is V. This definition of orthonormal basis does not generalise conveniently to the case of infinite dimensions, where the concept (properly formulated) is of major importance. Using the norm associated to the inner product, one has the notion of dense subset, and the appropriate definition of orthonormal basis is that the algebraic span (subspace of finite linear combinations of basis vectors) should be dense.

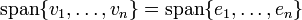

The Gram-Schmidt process is a canonical procedure that takes a linearly independent sequence {vk}k on an inner product space and produces an orthonormal sequence {ek}k such that for each n

By the Gram-Schmidt orthonormalization process, one shows:

Theorem. Any separable inner product space V has an orthonormal basis.

Parseval's identity leads immediately to the following theorem:

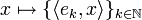

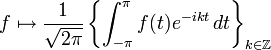

Theorem. Let V be a separable inner product space and {ek}k an orthonormal basis of V. Then the map

is an isometric linear map V → l2 with a dense image.

This theorem can be regarded as an abstract form of Fourier series, in which an arbitrary orthonormal basis plays the role of the sequence of trigonometric polynomials. Note that the underlying index set can be taken to be any countable set (and in fact any set whatsoever, provided l2 is defined appropriately, as is explained in the article Hilbert space). In particular, we obtain the following result in the theory of Fourier series:

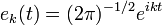

Theorem. Let V be the inner product space ![C[-\pi,\pi]](/2009-wikipedia_en_wp1-0.7_2009-05/I/8844ac48264a6497b40d9944f38b443b.png) . Then the sequence (indexed on set of all integers) of continuous functions

. Then the sequence (indexed on set of all integers) of continuous functions

is an orthonormal basis of the space ![C[-\pi,\pi]](/2009-wikipedia_en_wp1-0.7_2009-05/I/8844ac48264a6497b40d9944f38b443b.png) with the L2 inner product. The mapping

with the L2 inner product. The mapping

is an isometric linear map with dense image.

Orthogonality of the sequence {ek}k follows immediately from the fact that if k ≠ j, then

Normality of the sequence is by design, that is, the coefficients are so chosen so that the norm comes out to 1. Finally the fact that the sequence has a dense algebraic span, in the inner product norm, follows from the fact that the sequence has a dense algebraic span, this time in the space of continuous periodic functions on ![[-\pi,\pi]](/2009-wikipedia_en_wp1-0.7_2009-05/I/911bebeafbf3d4845e122edfc4f667f8.png) with the uniform norm. This is the content of the Weierstrass theorem on the uniform density of trigonometric polynomials.

with the uniform norm. This is the content of the Weierstrass theorem on the uniform density of trigonometric polynomials.

Operators on inner product spaces

Several types of linear maps A from an inner product space V to an inner product space W are of relevance:

- Continuous linear maps, i.e. A is linear and continuous with respect to the metric defined above, or equivalently, A is linear and the set of non-negative reals {||Ax||}, where x ranges over the closed unit ball of V, is bounded.

- Symmetric linear operators, i.e. A is linear and <Ax, y> = <x, A y> for all x, y in V.

- Isometries, i.e. A is linear and <Ax, Ay> = <x, y> for all x, y in V, or equivalently, A is linear and ||Ax|| = ||x|| for all x in V. All isometries are injective. Isometries are morphisms between inner product spaces, and morphisms of real inner product spaces are orthogonal transformations (compare with orthogonal matrix).

- Isometrical isomorphisms, i.e. A is an isometry which is surjective (and hence bijective). Isometrical isomorphisms are also known as unitary operators (compare with unitary matrix).

From the point of view of inner product space theory, there is no need to distinguish between two spaces which are isometrically isomorphic. The spectral theorem provides a canonical form for symmetric, unitary and more generally normal operators on finite dimensional inner product spaces. A generalization of the spectral theorem holds for continuous normal operators in Hilbert spaces.

Degenerate inner products

If V is a vector space and < , > a semi-definite sesquilinear form, then the function ||x|| = <x, x>1/2 makes sense and satisfies all the properties of norm except that ||x|| = 0 does not imply x = 0. (Such a functional is then called a semi-norm.) We can produce an inner product space by considering the quotient W = V/{ x : ||x|| = 0}. The sesquilinear form < , > factors through W.

This construction is used in numerous contexts. The Gelfand-Naimark-Segal construction is a particularly important example of the use of this technique. Another example is the representation of semi-definite kernels on arbitrary sets.

See also

- Outer product

- Exterior algebra

- bilinear form

- dual space

- dual pair

- biorthogonal system

- Fubini-Study metric

- Energetic space

References

- Axler, Sheldon (1997), Linear Algebra Done Right (2nd ed.), Berlin, New York: Springer-Verlag, ISBN 978-0-387-98258-8

- Emch, Gerard G. (1972), Algebraic methods in statistical mechanics and quantum field theory, Wiley-Interscience, ISBN 978-0-471-23900-0

- Young, Nicholas (1988), An introduction to Hilbert space, Cambridge University Press, ISBN 978-0-521-33717-5

|

|||||