Finite difference

A finite difference is a mathematical expression of the form f(x + b) − f(x + a). If a finite difference is divided by b − a, one gets a difference quotient. The approximation of derivatives by finite differences plays a central role in finite difference methods for the numerical solution of differential equations, especially boundary value problems.

In mathematical analysis, operators involving finite differences are studied. A difference operator is an operator which maps a function f to a function whose values are the corresponding finite differences.

Contents |

Forward, backward, and central differences

Only three forms are commonly considered: forward, backward, and central differences.

A forward difference is an expression of the form

Depending on the application, the spacing h may be variable or held constant.

A backward difference uses the function values at x and x − h, instead of the values at x + h and x:

Finally, the central difference is given by

Relation with derivatives

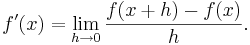

The derivative of a function f at a point x is defined by the limit

If h has a fixed (non-zero) value, instead of approaching zero, then the right-hand side is

Hence, the forward difference divided by h approximates the derivative when h is small. The error in this approximation can be derived from Taylor's theorem. Assuming that f is continuously differentiable, the error is

The same formula holds for the backward difference:

However, the central difference yields a more accurate approximation. Its error is proportional to square of the spacing (if f is twice continuously differentiable):

Higher-order differences

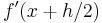

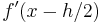

In an analogous way one can obtain finite difference approximations to higher order derivatives and differential operators. For example, by using the above central difference formula for  and

and  and applying a central difference formula for the derivative of

and applying a central difference formula for the derivative of  at x, we obtain the central difference approximation of the second derivative of f:

at x, we obtain the central difference approximation of the second derivative of f:

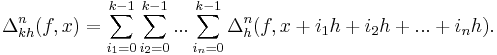

More generally, the nth-order forward, backward, and central differences are respectively given by:

Note that the central difference will, for odd  , have

, have  multiplied by non-integers. If this is a problem (usually it is), it may be remedied taking the average of

multiplied by non-integers. If this is a problem (usually it is), it may be remedied taking the average of ](/2009-wikipedia_en_wp1-0.7_2009-05/I/f3a7dd5eddc46d291556941e3a12ab22.png) and

and ](/2009-wikipedia_en_wp1-0.7_2009-05/I/9c8c87e86a3132e62c24608719e3f024.png) .

.

The relationship of these higher-order differences with the respective derivatives is very straightforward:

Higher-order differences can also be used to construct better approximations. As mentioned above, the first-order difference approximates the first-order derivative up to a term of order h. However, the combination

approximates f'(x) up to a term of order h2. This can be proven by expanding the above expression in Taylor series, or by using the calculus of finite differences, explained below.

If necessary, the finite difference can be centered about any point by mixing forward, backward, and central differences.

Properties

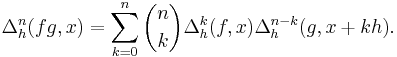

- For all positive k and n

- Leibniz rule:

Finite difference methods

An important application of finite differences is in numerical analysis, especially in numerical differential equations, which aim at the numerical solution of ordinary and partial differential equations respectively. The idea is to replace the derivatives appearing in the differential equation by finite differences that approximate them. The resulting methods are called finite difference methods.

Common applications of the finite difference method are in computational science and engineering disciplines, such as thermal engineering, fluid mechanics, etc.

Calculus of finite differences

The forward difference can be considered as a difference operator, which maps the function f to Δh[f]. This operator satisfies

where  is the shift operator with step

is the shift operator with step  , defined by

, defined by  = f(x+h)](/2009-wikipedia_en_wp1-0.7_2009-05/I/1a20c4121e01bc8c839e9949939dfd2d.png) , and

, and  is an identity operator.

is an identity operator.

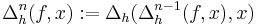

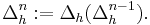

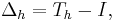

Finite difference of higher orders can be defined in recursive manner as  or, in operators notation,

or, in operators notation,  Another possible (and equivalent) definition is

Another possible (and equivalent) definition is ![\Delta^n_h = [T_h-I]^n.](/2009-wikipedia_en_wp1-0.7_2009-05/I/d7bac350e0f122c3f788176280cd75a8.png)

The difference operator Δh is linear and satisfies Leibniz rule. Similar statements hold for the backward and central difference.

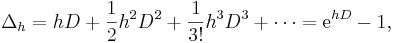

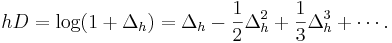

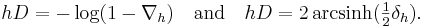

Taylor's theorem can now be expressed by the formula

where D denotes the derivative operator, mapping f to its derivative f'. Formally inverting the exponential suggests that

This formula holds in the sense that both operators give the same result when applied to a polynomial. Even for analytic functions, the series on the right is not guaranteed to converge; it may be an asymptotic series. However, it can be used to obtain more accurate approximations for the derivative. For instance, retaining the first two terms of the series yields the second-order approximation to  mentioned at the end of the section Higher-order differences.

mentioned at the end of the section Higher-order differences.

The analogous formulas for the backward and central difference operators are

The calculus of finite differences is related to the umbral calculus in combinatorics.

Generalizations

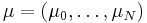

A generalized finite difference is usually defined as

where  is its coefficients vector. An infinite difference is a further generalization, where the finite sum above is replaced by an infinite series. Another way of generalization is making coefficients

is its coefficients vector. An infinite difference is a further generalization, where the finite sum above is replaced by an infinite series. Another way of generalization is making coefficients  depend on point

depend on point  :

:  , thus considering weighted finite difference. Also one may make step

, thus considering weighted finite difference. Also one may make step  depend on point

depend on point  :

:  . Such generalizations are useful for constructing different modulus of continuity.

. Such generalizations are useful for constructing different modulus of continuity.

Finite difference in several variables

Finite differences can be considered in more than one variable. They are analogous to partial derivatives in several variables.

See also

- Taylor series

- Numerical differentiation

- Five-point stencil

- Divided differences

- Modulus of continuity

- Time scale calculus

References

- William F. Ames, Numerical Methods for Partial Differential Equations, Section 1.6. Academic Press, New York, 1977. ISBN 0-12-056760-1.

- Francis B. Hildebrand, Finite-Difference Equations and Simulations, Section 2.2, Prentice-Hall, Englewood Cliffs, New Jersey, 1968.

- Boole, George, A Treatise On The Calculus of Finite Differences, 2nd ed., Macmillan and Company, 1872. [See also: Dover edition 1960].

- Robert D. Richtmyer and K. W. Morton, Difference Methods for Initial Value Problems, 2nd ed., Wiley, New York, 1967.

= f(x + h) - f(x). \](/2009-wikipedia_en_wp1-0.7_2009-05/I/222e477a2df8c501aecc7728fb969d88.png)

= f(x) - f(x-h). \](/2009-wikipedia_en_wp1-0.7_2009-05/I/c8202ccc4ee962447c3a7463a47ecbff.png)

= f(x+\tfrac12h)-f(x-\tfrac12h). \](/2009-wikipedia_en_wp1-0.7_2009-05/I/03003621f1d1c21db25c422bac0c580e.png)

}{h}.](/2009-wikipedia_en_wp1-0.7_2009-05/I/757400f2745c7cc6ba4f4e43baf4cabf.png)

}{h} - f'(x) = O(h) \quad (h \to 0).](/2009-wikipedia_en_wp1-0.7_2009-05/I/475d8465a4ea17726430ae3cb71ca792.png)

}{h} - f'(x) = O(h).](/2009-wikipedia_en_wp1-0.7_2009-05/I/415fabcde94e8a24b84ab476254fe50d.png)

}{h} - f'(x) = O(h^{2}) . \!](/2009-wikipedia_en_wp1-0.7_2009-05/I/7a45867fa8cb69b0122039189f0a581c.png)

}{h^2} = \frac{f(x+h) - 2 f(x) + f(x-h)}{h^{2}} .](/2009-wikipedia_en_wp1-0.7_2009-05/I/1953a53dd7b94b8f9a1a3dff7d3d322b.png)

=

\sum_{i = 0}^{n} (-1)^i \binom{n}{i} f(x + (n - i) h),](/2009-wikipedia_en_wp1-0.7_2009-05/I/a25c0e912e46484437a236128eda063d.png)

=

\sum_{i = 0}^{n} (-1)^i \binom{n}{i} f(x - ih),](/2009-wikipedia_en_wp1-0.7_2009-05/I/90621614d2f6c04fe52246fc9e8ca7e6.png)

=

\sum_{i = 0}^{n} (-1)^i \binom{n}{i} f\left(x + \left(\frac{n}{2} - i\right) h\right).](/2009-wikipedia_en_wp1-0.7_2009-05/I/682eb520a31cb9c590269e244010b0a9.png)

}{h^n}+O(h)](/2009-wikipedia_en_wp1-0.7_2009-05/I/2d120533be03a29b422296ee3487ae36.png)

}{h^n}+O(h)](/2009-wikipedia_en_wp1-0.7_2009-05/I/630870d63a734696aac48668362453a4.png)

}{h^n} + O(h^2).](/2009-wikipedia_en_wp1-0.7_2009-05/I/393e90ca5911b6e9458ecf95592a4a68.png)

- \frac12 \Delta_h^2[f](x)}{h} = - \frac{f(x+2h)-4f(x+h)+3f(x)}{2h}](/2009-wikipedia_en_wp1-0.7_2009-05/I/fc70d2c77bc379db24104f3ec369d28d.png)

= \sum_{k=0}^N \mu_k f(x+kh),](/2009-wikipedia_en_wp1-0.7_2009-05/I/708de88797ca34cc08637cae669d4868.png)