Natural number

In mathematics, a natural number (also called counting number) can mean either an element of the set {1, 2, 3, ...} (the positive integers) or an element of the set {0, 1, 2, 3, ...} (the non-negative integers). The latter is especially preferred in mathematical logic, set theory, and computer science.

Natural numbers have two main purposes: they can be used for counting ("there are 3 apples on the table"), and they can be used for ordering ("this is the 3rd largest city in the country").

Properties of the natural numbers related to divisibility, such as the distribution of prime numbers, are studied in number theory. Problems concerning counting, such as Ramsey theory, are studied in combinatorics.

Contents |

History of natural numbers and the status of zero

The natural numbers had their origins in the words used to count things, beginning with the number one.

The first major advance in abstraction was the use of numerals to represent numbers. This allowed systems to be developed for recording large numbers. For example, the Babylonians developed a powerful place-value system based essentially on the numerals for 1 and 10. The ancient Egyptians had a system of numerals with distinct hieroglyphs for 1, 10, and all the powers of 10 up to one million. A stone carving from Karnak, dating from around 1500 BC and now at the Louvre in Paris, depicts 276 as 2 hundreds, 7 tens, and 6 ones; and similarly for the number 4,622.

A much later advance in abstraction was the development of the idea of zero as a number with its own numeral. A zero digit had been used in place-value notation as early as 700 BC by the Babylonians, but, they omitted it when it would have been the last symbol in the number.[1] The Olmec and Maya civilization used zero as a separate number as early as 1st century BC, developed independently, but this usage did not spread beyond Mesoamerica. The concept as used in modern times originated with the Indian mathematician Brahmagupta in 628. Nevertheless, medieval computists (calculators of Easter), beginning with Dionysius Exiguus in 525, used zero as a number without using a Roman numeral to write it. Instead nullus, the Latin word for "nothing", was employed. The first systematic study of numbers as abstractions (that is, as abstract entities) is usually credited to the Greek philosophers Pythagoras and Archimedes. However, independent studies also occurred at around the same time in India, China, and Mesoamerica.

In the nineteenth century, a set-theoretical definition of natural numbers was developed. With this definition, it was convenient to include zero (corresponding to the empty set) as a natural number. Including zero in the natural numbers is now the common convention among set theorists, logicians and computer scientists. Other mathematicians, such as number theorists, have kept the older tradition and take 1 to be the first natural number. Sometimes the set of natural numbers with zero included is called the set of whole numbers.

Notation

Mathematicians use N or  (an N in blackboard bold, displayed as ℕ in Unicode) to refer to the set of all natural numbers. This set is countably infinite: it is infinite but countable by definition. This is also expressed by saying that the cardinal number of the set is aleph-null

(an N in blackboard bold, displayed as ℕ in Unicode) to refer to the set of all natural numbers. This set is countably infinite: it is infinite but countable by definition. This is also expressed by saying that the cardinal number of the set is aleph-null  .

.

To be unambiguous about whether zero is included or not, sometimes an index "0" is added in the former case, and a superscript "*" is added in the latter case:

0 = { 0, 1, 2, ... } ;

0 = { 0, 1, 2, ... } ;  * = { 1, 2, ... }.

* = { 1, 2, ... }.

(Sometimes, an index or superscript "+" is added to signify "positive". However, this is often used for "nonnegative" in other cases, as ℝ+ = [0,∞) and ℤ+ = { 0, 1, 2,... }, at least in European literature. The notation "*", however, is standard for nonzero or rather invertible elements.)

Some authors who exclude zero from the naturals use the term whole numbers, denoted  , for the set of nonnegative integers. Others use the notation

, for the set of nonnegative integers. Others use the notation  for the positive integers.

for the positive integers.

Set theorists often denote the set of all natural numbers by a lower-case Greek letter omega: ω. This stems from the identification of an ordinal number with the set of ordinals that are smaller. When this notation is used, zero is explicitly included as a natural number.

Algebraic properties

| addition | multiplication | |

| closure: | a + b is a natural number | a × b is a natural number |

| associativity: | a + (b + c) = (a + b) + c | a × (b × c) = (a × b) × c |

| commutativity: | a + b = b + a | a × b = b × a |

| existence of an identity element: | a + 0 = a | a × 1 = a |

| distributivity: | a × (b + c) = (a × b) + (a × c) | |

| No zero divisors: | if ab = 0, then either a = 0 or b = 0 (or both) | |

Properties

One can recursively define an addition on the natural numbers by setting a + 0 = a and a + S(b) = S(a + b) for all a, b. This turns the natural numbers (N, +) into a commutative monoid with identity element 0, the so-called free monoid with one generator. This monoid satisfies the cancellation property and can be embedded in a group. The smallest group containing the natural numbers is the integers.

If we define 1 := S(0), then b + 1 = b + S(0) = S(b + 0) = S(b). That is, b + 1 is simply the successor of b.

Analogously, given that addition has been defined, a multiplication × can be defined via a × 0 = 0 and a × S(b) = (a × b) + a. This turns (N*, ×) into a free commutative monoid with identity element 1; a generator set for this monoid is the set of prime numbers. Addition and multiplication are compatible, which is expressed in the distribution law: a × (b + c) = (a × b) + (a × c). These properties of addition and multiplication make the natural numbers an instance of a commutative semiring. Semirings are an algebraic generalization of the natural numbers where multiplication is not necessarily commutative.

If we interpret the natural numbers as "excluding 0", and "starting at 1", the definitions of + and × are as above, except that we start with a + 1 = S(a) and a × 1 = a.

For the remainder of the article, we write ab to indicate the product a × b, and we also assume the standard order of operations.

Furthermore, one defines a total order on the natural numbers by writing a ≤ b if and only if there exists another natural number c with a + c = b. This order is compatible with the arithmetical operations in the following sense: if a, b and c are natural numbers and a ≤ b, then a + c ≤ b + c and ac ≤ bc. An important property of the natural numbers is that they are well-ordered: every non-empty set of natural numbers has a least element. The rank among well-ordered sets is expressed by an ordinal number; for the natural numbers this is expressed as " ".

".

While it is in general not possible to divide one natural number by another and get a natural number as result, the procedure of division with remainder is available as a substitute: for any two natural numbers a and b with b ≠ 0 we can find natural numbers q and r such that

- a = bq + r and r < b.

The number q is called the quotient and r is called the remainder of division of a by b. The numbers q and r are uniquely determined by a and b. This, the Division algorithm, is key to several other properties (divisibility), algorithms (such as the Euclidean algorithm), and ideas in number theory.

The natural numbers including zero form a commutative monoid under addition (with identity element zero), and under multiplication (with identity element one).

Generalizations

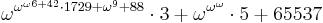

Two generalizations of natural numbers arise from the two uses:

- A natural number can be used to express the size of a finite set; more generally a cardinal number is a measure for the size of a set also suitable for infinite sets; this refers to a concept of "size" such that if there is a bijection between two sets they have the same size. The set of natural numbers itself and any other countably infinite set has cardinality aleph-null (

).

). - Ordinal numbers "first", "second", "third" can be assigned to the elements of a totally ordered finite set, and also to the elements of well-ordered countably infinite sets like the set of natural numbers itself. This can be generalized to ordinal numbers which describe the position of an element in a well-order set in general. An ordinal number is also used to describe the "size" of a well-ordered set, in a sense different from cardinality: if there is an order isomorphism between two well-ordered sets they have the same ordinal number. The first ordinal number that is not a natural number is expressed as

; this is also the ordinal number of the set of natural numbers itself.

; this is also the ordinal number of the set of natural numbers itself.

and

and  have to be distinguished because many well-ordered sets with cardinal number

have to be distinguished because many well-ordered sets with cardinal number  have a higher ordinal number than

have a higher ordinal number than  , for example,

, for example,  ;

;  is the lowest possible value (the initial ordinal).

is the lowest possible value (the initial ordinal).

For finite well-ordered sets there is one-to-one correspondence between ordinal and cardinal number; therefore they can both be expressed by the same natural number, the number of elements of the set. This number can also be used to describe the position of an element in a larger finite, or an infinite, sequence.

Other generalizations are discussed in the article on numbers.

Formal definitions

Historically, the precise mathematical definition of the natural numbers developed with some difficulty. The Peano postulates state conditions that any successful definition must satisfy. Certain constructions show that, given set theory, models of the Peano postulates must exist.

Peano axioms

- There is a natural number 0.

- Every natural number a has a natural number successor, denoted by S(a).

- There is no natural number whose successor is 0.

- Distinct natural numbers have distinct successors: if a ≠ b, then S(a) ≠ S(b).

- If a property is possessed by 0 and also by the successor of every natural number which possesses it, then it is possessed by all natural numbers. (This postulate ensures that the proof technique of mathematical induction is valid.)

It should be noted that the "0" in the above definition need not correspond to what we normally consider to be the number zero. "0" simply means some object that when combined with an appropriate successor function, satisfies the Peano axioms. All systems that satisfy these axioms are isomorphic, the name "0" is used here for the first element, which is the only element that is not a successor. For example, the natural numbers starting with one also satisfy the axioms.

Constructions based on set theory

A standard construction

A standard construction in set theory, a special case of the von Neumann ordinal construction, is to define the natural numbers as follows:

- We set 0 := { }, the empty set,

- and define S(a) = a ∪ {a} for every set a. S(a) is the successor of a, and S is called the successor function.

- If the axiom of infinity holds, then the set of all natural numbers exists and is the intersection of all sets containing 0 which are closed under this successor function.

- If the set of all natural numbers exists, then it satisfies the Peano axioms.

- Each natural number is then equal to the set of natural numbers less than it, so that

-

- 0 = { }

- 1 = {0} = {{ }}

- 2 = {0,1} = {0, {0}} = {{ }, {{ }}}

- 3 = {0,1,2} = {0, {0}, {0, {0}}} = {{ }, {{ }}, {{ }, {{ }}}}

- n = {0,1,2,...,n−2,n−1} = {0,1,2,...,n−2} ∪ {n−1} = (n−1) ∪ {n−1}

- and so on. When a natural number is used as a set, this is typically what is meant. Under this definition, there are exactly n elements (in the naïve sense) in the set n and n ≤ m (in the naïve sense) if and only if n is a subset of m.

- Also, with this definition, different possible interpretations of notations like Rn (n-tuples versus mappings of n into R) coincide.

- Even if the axiom of infinity fails and the set of all natural numbers does not exist, it is possible to define what it means to be one of these sets. A set n is a natural number means that it is either 0 (empty) or a successor, and each of its elements is either 0 or the successor of another of its elements.

Other constructions

Although the standard construction is useful, it is not the only possible construction. For example:

- one could define 0 = { }

- and S(a) = {a},

- producing

- 0 = { }

- 1 = {0} = {{ }}

- 2 = {1} = {{{ }}}, etc.

Or we could even define 0 = {{ }}

- and S(a) = a U {a}

- producing

- 0 = {{ }}

- 1 = {{ }, 0} = {{ }, {{ }}}

- 2 = {{ }, 0, 1}, etc.

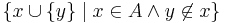

Arguably the oldest set-theoretic definition of the natural numbers is the definition commonly ascribed to Frege and Russell under which each concrete natural number n is defined as the set of all sets with n elements. This may appear circular, but can be made rigorous with care. Define 0 as  (clearly the set of all sets with 0 elements) and define

(clearly the set of all sets with 0 elements) and define  (for any set A) as

(for any set A) as  (see set-builder notation). Then 0 will be the set of all sets with 0 elements,

(see set-builder notation). Then 0 will be the set of all sets with 0 elements,  will be the set of all sets with 1 element,

will be the set of all sets with 1 element,  will be the set of all sets with 2 elements, and so forth. The set of all natural numbers can be defined as the intersection of all sets containing 0 as an element and closed under

will be the set of all sets with 2 elements, and so forth. The set of all natural numbers can be defined as the intersection of all sets containing 0 as an element and closed under  (that is, if the set contains an element n, it also contains

(that is, if the set contains an element n, it also contains  ). This definition does not work in the usual systems of axiomatic set theory because the collections involved are too large (it will not work in any set theory with the axiom of separation); but it does work in New Foundations (and in related systems known to be consistent) and in some systems of type theory.

). This definition does not work in the usual systems of axiomatic set theory because the collections involved are too large (it will not work in any set theory with the axiom of separation); but it does work in New Foundations (and in related systems known to be consistent) and in some systems of type theory.

Notes

References

- Edmund Landau, Foundations of Analysis, Chelsea Pub Co. ISBN 0-8218-2693-X.

- Richard Dedekind, Essays on the theory of numbers, Dover, 1963, ISBN 0486210103 / Kessinger Publishing, LLC , 2007, ISBN 054808985X

External links

- Axioms and Construction of Natural Numbers

- Essays on the Theory of Numbers by Richard Dedekind at Project Gutenberg

|

|||||||||||